This article is part of Jepsen, a series on network partitions. We’re going to learn about distributed consensus, discuss the CAP theorem’s implications, and demonstrate how different databases behave under partition.

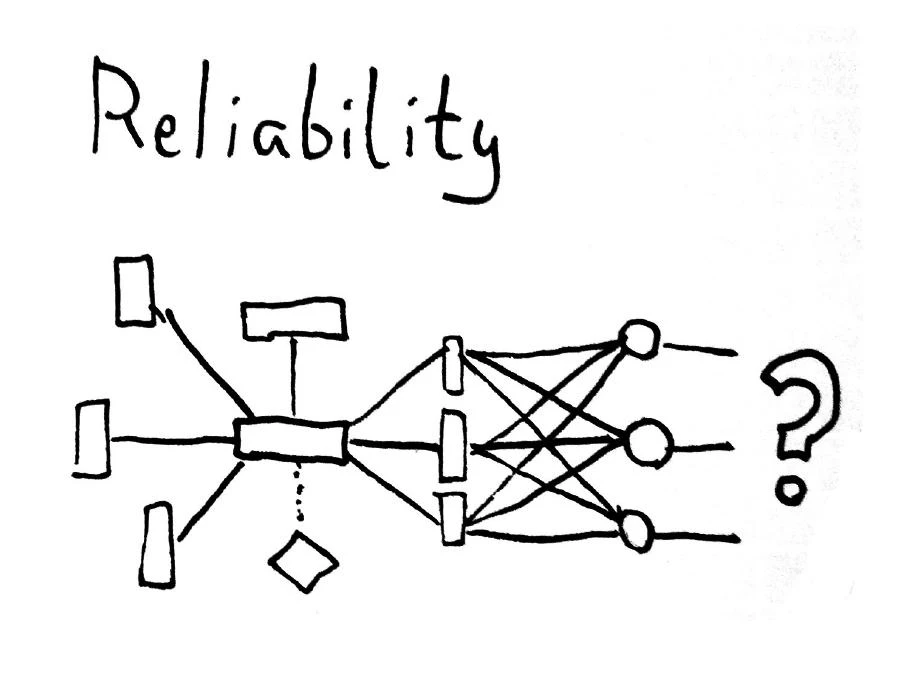

Modern software systems are composed of dozens of components which communicate over an asynchronous, unreliable network. Understanding the reliability of a distributed system’s dynamics requires careful analysis of the network itself. Like most hard problems in computer science, this one comes down to shared state. A set of nodes separated by the network must exchange information: “Did I like that post?” “Was my write successful?” “Will you thumbnail my image?” “How much is in my account?”

At the end of one of these requests, you might guarantee that the requested operation…

- will be visible to everyone from now on

- will be visible to your connection now, and others later

- may not yet be visible, but is causally connected to some future state of the system

- is visible now, but might not be later

- may or may not be visible: ERRNO_YOLO

These are some examples of the complex interplay between consistency and durability in distributed systems. For instance, if you’re writing CRDTs to one of two geographically replicated Riak clusters with W=2 and DW=1, you can guarantee that write…

- is causally connected to some future state of the system

- will survive the total failure of one node

- will survive a power failure (assuming fsync works) of all nodes

- will survive the destruction of an entire datacenter, given a few minutes to replicate

If you’re writing to ZooKeeper, you might have a stronger set of guarantees: the write is visible now to all participants, for instance, and that the write will survive the total failure of up to n/2 - 1 nodes. If you write to Postgres, depending on your transaction’s consistency level, you might be able to guarantee that the write will be visible to everyone, just to yourself, or “eventually”.

These guarantees are particularly tricky to understand when the network is unreliable.

Partitions

Formal proofs of distributed systems often assume that the network is asynchronous, which means the network may arbitrarily duplicate, drop, delay, or reorder messages between nodes. This is a weak hypothesis: some physical networks can do better than this, but in practice IP networks will encounter all of these failure modes, so the theoretical limitations of the asynchronous network apply to real-world systems as well.

In practice, the TCP state machine allows nodes to reconstruct “reliable” ordered delivery of messages between nodes. TCP sockets guarantee that our messages will arrive without drops, duplication, or reordering. However, there can still be arbitrary delays–which would ordinarily cause the distributed system to lock indefinitely. Since computers have finite memory and latency bounds, we introduce timeouts, which close the connection when expected messages fail to arrive within a given time frame. Calls to read() on sockets will simply block, then fail.

Detecting network failures is hard. Since our only knowledge of the other nodes passes through the network, delays are indistinguishible from failure. This is the fundamental problem of the network partition: latency high enough to be considered a failure. When partitions arise, we have no way to determine what happened on the other nodes: are they alive? Dead? Did they receive our message? Did they try to respond? Literally no one knows. When the network finally heals, we’ll have to re-establish the connection and try to work out what happened–perhaps recovering from an inconsistent state.

Many systems handle partitions by entering a special degraded mode of operation. The CAP theorem tells us that we can either have consistency (technically, linearizability for a read-write register), or availability (all nodes can continue to handle requests), but not both. What’s more, few databases come close to CAP’s theoretical limitations; many simply drop data.

In this series, I’m going to demonstrate how some real distributed systems behave when the network fails. We’ll start by setting up a cluster and a simple application. In each subsequent post, we’ll explore that application written for a particular database, and how that system behaves under partition.

Setting up a cluster

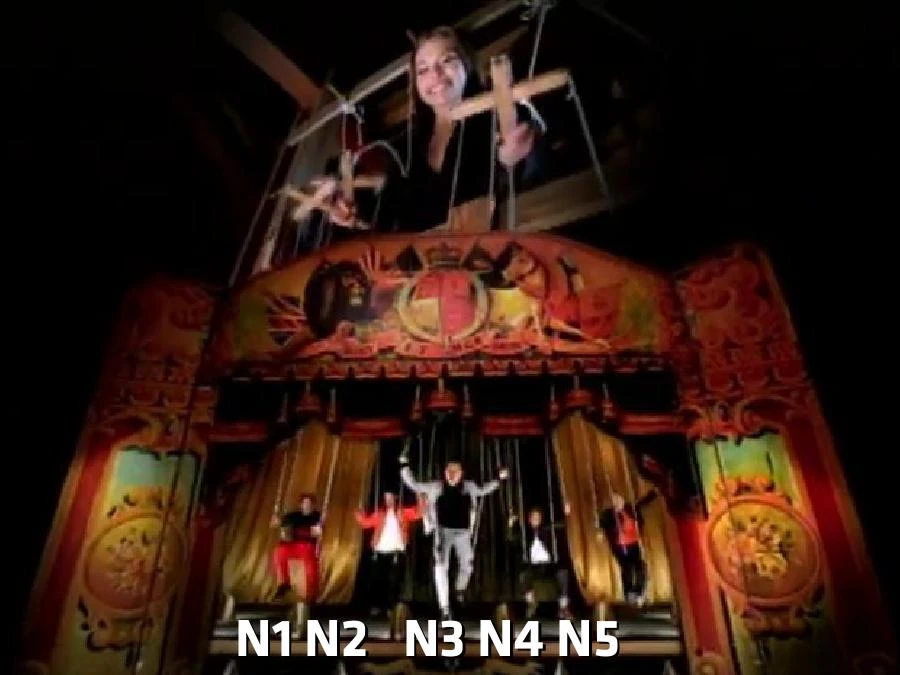

You can create partitions at home! For these demonstrations, I’m going to be running a five node cluster of Ubuntu 12.10 machines, virtualized using LXC–but you can use real computers, virtual private servers, EC2, etc. I’ve named the nodes n1, n2, n3, n4, and n5: it’s probably easiest to add these entries to /etc/hosts on your computer and on each of the nodes themselves.

We’re going to need some configuration for the cluster, and client applications to test their behavior. You can clone http://github.com/aphyr/jepsen to follow along.

To run commands across the cluster, I’m using Salticid (http://github.com/aphyr/salticid). I’ve set my ~/.salticidrc to point to configuration in the Jepsen repo:

load ENV['HOME'] + '/jepsen/salticid/*.rb'

If you take a look at this file, you’ll see that it defines a group called :jepsen, with hosts n1 … n5. The user and password for each node is ‘ubuntu’–you’ll probably want to change this if you’re running your nodes on the public internet.

Try salticid -s salticid to see all the groups, hosts, and roles defined by the current configuration:

$ salticid -s salticid

Groups

jepsen

Hosts:

n1

n2

n3

n4

n5

Roles

base

riak

mongo

redis

postgres

jepsen

net

Top-level tasks

First off, let’s set up these nodes with some common software–compilers, network tools, etc.

salticid base.setup

The base role defines some basic operating system functions. base.reboot will reboot the cluster, and base.shutdown will unpower it.

The jepsen role defines tasks for simulating network failures. To cause a partition, run salticid jepsen.partition. That command causes nodes n1 and n2 to drop IP traffic from n3, n4, and n5–essentially by running

iptables -A INPUT -s n3 -j DROP

iptables -A INPUT -s n4 -j DROP

iptables -A INPUT -s n5 -j DROP

That’s it, really. To check the current network status, run jepsen.status. jepsen.heal will reset the iptables chains to their defaults, resolving the partition.

To simulate slow networks, or networks which drop packets, we can use tc to adjust the ethernet interface. Jepsen assumes the inter-node interface is eth0. salticid jepsen.slow will add latency to the network, making it easier to reproduce bugs which rely on a particular message being dropped. salticid jepsen.flaky will probabilistically drop messages. Adjusting the inter-node latency and lossiness simulates the behavior of real-world networks under congestion, and helps expose timing dependencies in distributed algorithms–like database replication.

A simple distributed system

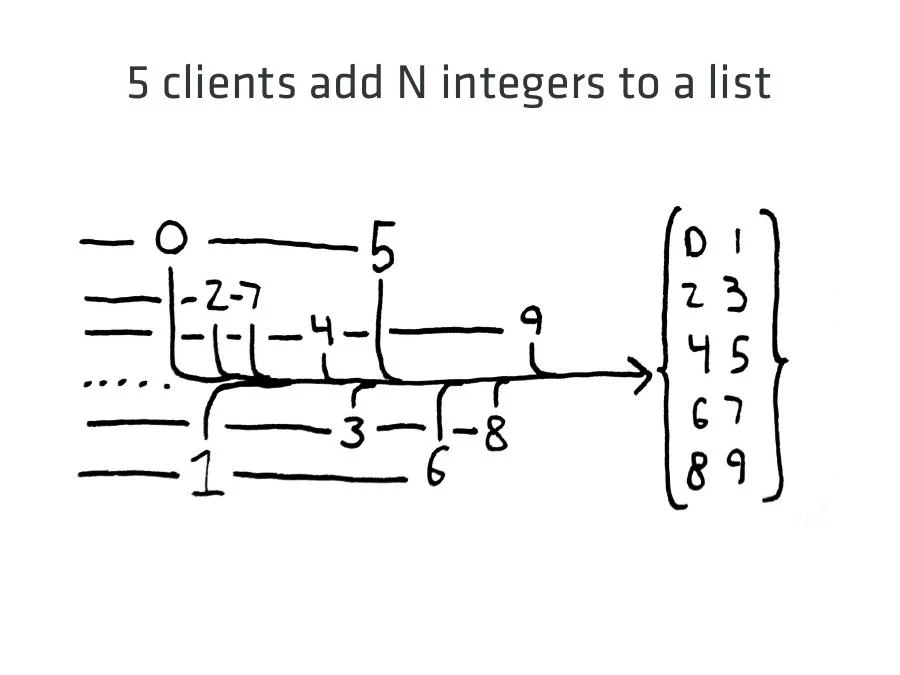

In order to test a distributed system, we need a workload–a set of clients which make requests and record their results for analysis. For these posts, we’re going to work with a simple application which writes several numbers to a list in a database. Each client app will independently write some integers to the DB. With five clients, client 0 writes 0, 5, 10, 15, …; client 1 writes 1, 6, 11, and so on.

For each write we record whether the database acknowledged the write successfully or whether there was an error. At the end of the run, we ask the database for the full set. If acknowledged writes are missing, or unacknowledged writes are present, we know that the system was inconsistent in some way: that the client application and the database disagreed about the state of the system.

In this series of blog posts, we’re going to run this app against several distributed databases, and cause partitions during its run. In each case, we’ll see how the system responds to the uncertainty of dropped messages.

I’ve written several implementations of this workload in Clojure. jepsen/src/jepsen/set_app.clj defines the application. (defprotocol SetApp ...) lists the functions an app has to implement, and (run n apps) sets up the apps and runs them in parallel, collects results, and shows any inconsistencies. Particular implementations live in src/jepsen/riak.clj, pg.clj,redis.clj`, and so forth.

You’ll need a JVM and Leiningen 2 to run this code. Once you’ve installed lein, and added it to your path, we’re ready to go!

Next up on Jepsen, we take a look at how Postgresql’s transaction protocol handles network failures.

‘If you write to Postgres, depending on your transaction’s consistency level, you might be able to guarantee that the write will be visible to everyone, just to yourself, or “eventually”.’

Doesn’t this depend on your readers' transaction isolation levels?