Previously in Jepsen, we discussed Redis. In this post, we’ll see MongoDB drop a phenomenal amount of data. See also: followup analyses of 2.6.7 and 3.4.0-rc3.

MongoDB is a document-oriented database with a similar distribution design to Redis. In a replica set, there exists a single writable primary node which accepts writes, and asynchronously replicates those writes as an oplog to N secondaries. However, there are a few key differences.

First, Mongo builds in its leader election and replicated state machine. There’s no separate system which tries to observe a replica set in order to make decisions about what it should do. The replica set decides among itself which node should be primary, when to step down, how to replicate, etc. This is operationally simpler and eliminates whole classes of topology problems.

Second, Mongo allows you to ask that the primary confirm successful replication of a write by its disk log, or by secondary nodes. At the cost of latency, we can get stronger guarantees about whether or not a write was successful.

What happens when a primary becomes inaccessible?

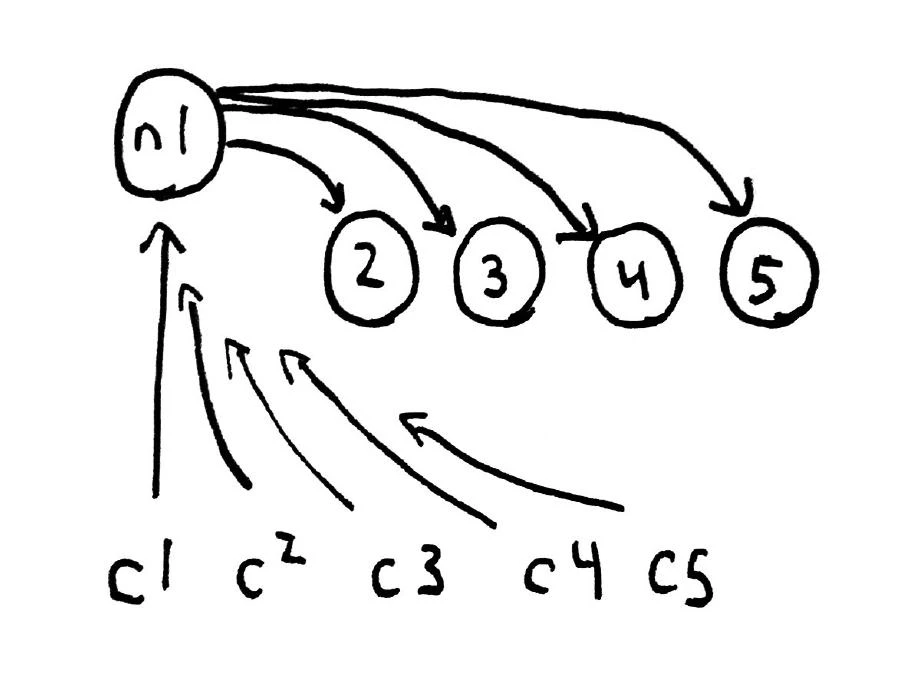

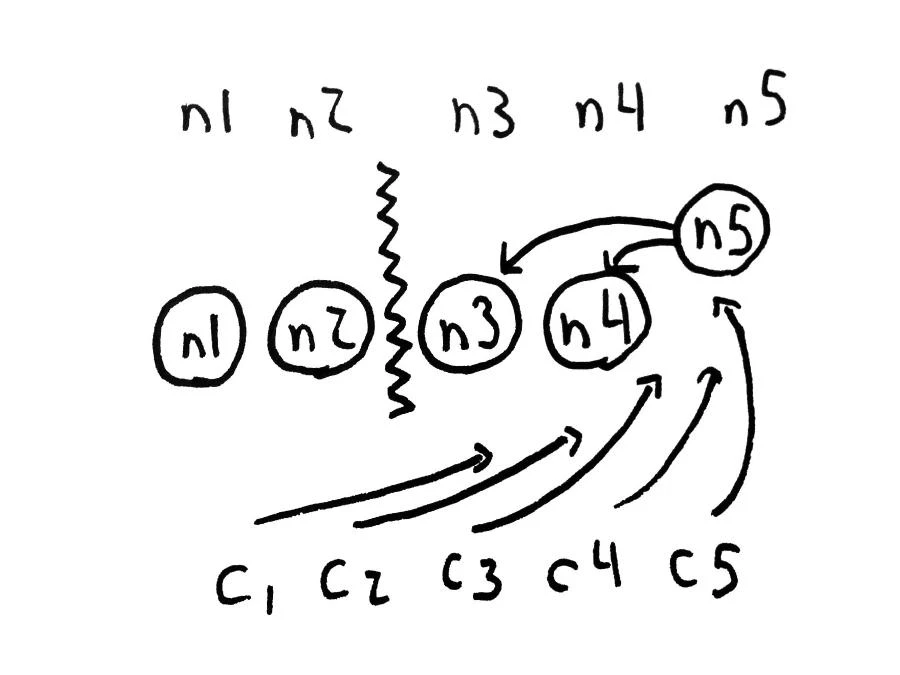

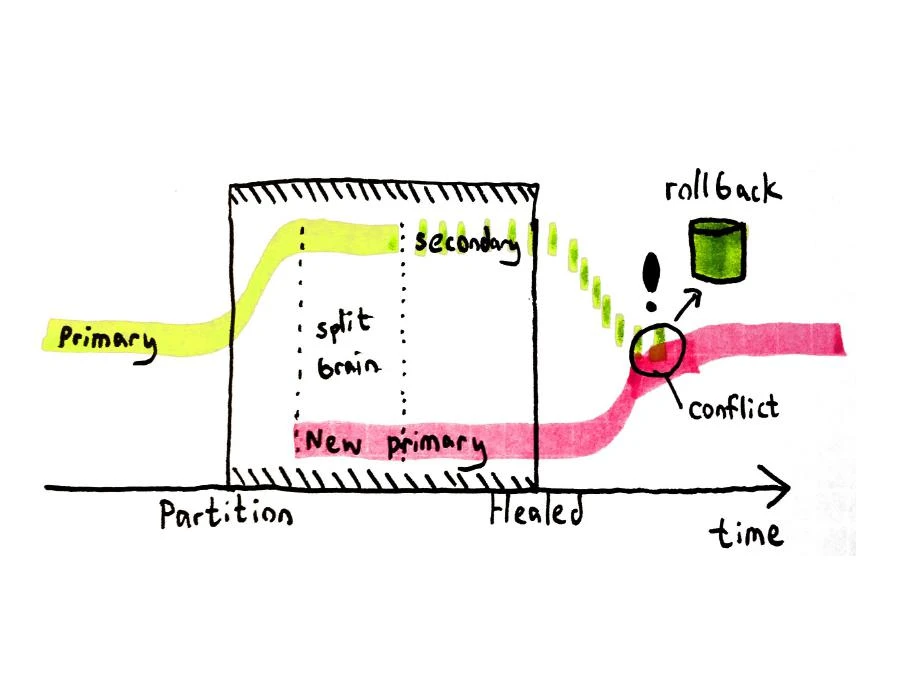

The remaining secondaries will gradually detect the failed connection and attempt to come to a consensus about what to do. If they have a majority (and remember, there can be only one majority in a cluster, so this suggests we’re heading towards a CP system), they’ll select the node with the highest optime (a monotonic clock maintained by each node) and promote it to be a new primary. Simultaneously, the minority nodes will detect that they no longer have a quorum, and demote the primary to a secondary so it can’t accept writes.

If our primary is on n1, and we cut off n1 and n2 from the rest of the cluster, we expect either n3, n4, or n5 to become the new primary. Because this architecture demotes the original primary on n1, we won’t find ourselves in the same split-brain problem we saw with Redis.

Consistency

So is MongoDB CP? There’s a popular notion that MongoDB is a CP system, including exchanges like this, where all kinds of nuanced technical assertions about strong consistency are thrown around. At the same time, Mongo’s documentation for replica sets explains carefully that Mongo may “revert operations”:

In some failover situations primaries will have accepted write operations that have not replicated to the secondaries after a failover occurs. This case is rare and typically occurs as a result of a network partition with replication lag. When this member (the former primary) rejoins the replica set and attempts to continue replication as a secondary the former primary must revert these operations or “roll back” these operations to maintain database consistency across the replica set.

“Revert” certainly doesn’t sound like linearizability to me, but that bit about “maintain[ing] database consistency” doesn’t sound so bad. What actually happens? Let’s find out!

For this example, we’ll be adding integers to a list in a MongoDB document by using the update command in a CaS loop–just like you’d use with any transactionally isolated database. Yes, we could use $addInSet, but I’m using this app as an example of atomic updates in general, and they have different oplog dynamics.

Unacknowledged

Up until recently, clients for MongoDB didn’t bother to check whether or not their writes succeeded, by default: they just sent them and assumed everything went fine. This goes about as well as you’d expect.

lein run mongo-unsafe -n 6000

salticid jepsen.partition

For a while, writes continue to complete against n1. Then we see errors as the replica set fails over, like

3186 No replica set members available in [ { address:'n3/10.10.3.101:27017', ok:true, ping:0.8954104, isMaster:false, isSecondary:true, setName:rs0, maxBsonObjectSize:16777216, },{ address:'n4/10.10.3.95:27017', ok:true, ping:0.681164, isMaster:false, isSecondary:true, setName:rs0, maxBsonObjectSize:16777216, },{ address:'n5/10.10.3.32:27017', ok:true, ping:0.6231328, isMaster:false, isSecondary:true, setName:rs0, maxBsonObjectSize:16777216, },{ address:'n2/10.10.3.52:27017', ok:true, ping:0.51316977, isMaster:false, isSecondary:true, setName:rs0, maxBsonObjectSize:16777216, },{ address:'n1/10.10.3.242:27017', ok:true, ping:0.37008655, isMaster:false, isSecondary:true, setName:rs0, maxBsonObjectSize:16777216, } ] for { "mode" : "primary"}

During this time, the majority nodes (n3, n4, n5) are still secondaries, but they’ve agreed that the old primary is inaccessible. They compare optimes and race to elect a leader:

$ salticid mongo.rs_stat

22:09:08 Starting...

22:09:08 MongoDB shell version: 2.4.1

22:09:08 connecting to: test

22:09:08 n1:27017 (not reachable/healthy) 1368940104/56

22:09:08 n2:27017 (not reachable/healthy) 1368940103/458

22:09:08 n3:27017 SECONDARY 1368940104/89

22:09:08 n4:27017 SECONDARY 1368940104/89

22:09:08 n5:27017 SECONDARY 1368940104/102

22:09:08 true

22:09:08 Finished

22:09:23 n1:27017 (not reachable/healthy) 1368941926/66

22:09:23 n2:27017 (not reachable/healthy) 1368941961/70

22:09:23 n3:27017 SECONDARY 1368941962/9

22:09:23 n4:27017 SECONDARY 1368941961/45

22:09:23 n5:27017 PRIMARY 1368941963/11

N5 wins the race, and proceeds to accept writes. If we heal the partition with salticid jepsen.heal, and wait a few seconds, the nodes will detect the fully connected cluster and the new primary will step down, to allow n1 to resume its place. Now that the cluster has stabilized, we hit enter to check how many of our writes survived:

Hit enter when ready to collect results.

Writes completed in 93.608 seconds

6000 total

5700 acknowledged

3319 survivors

2381 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

469 474 479 484 489 494 ... 3166 3168 3171 3173 3178 3183

0.95 ack rate

0.4177193 loss rate

0.0 unacknowledged but successful rate

42% write loss. Well, to some extent, this shouldn’t be surprising, because we weren’t checking to see whether the server was successful in applying our writes. Those 300 errors only came about when we tried to write to a secondary. But we never actually crashed a node, and we didn’t see any signs of a split-brain condition with two simultaneous primaries–so why did Mongo drop data?

Remember those writes that completed on n1 just after the partition started? Those writes are still on n1, but never made it to n5. N5 proceeded without them. Now n1 and n5 are comparing notes, and n1 realizes that n5’s optime is higher. N1 figures out the last point where the two agreed on the oplog, and rolls back to that point.

22:09:33 Sun May 19 05:09:33.032 [rsHealthPoll] replSet member n5:27017 is now in state PRIMARY

22:09:33 Sun May 19 05:09:33.207 [initandlisten] connection accepted from 10.10.3.95:37718 #6154 (23 connections now open)

22:09:33 Sun May 19 05:09:33.417 [rsBackgroundSync] replSet syncing to: n5:27017

22:09:33 Sun May 19 05:09:33.438 [rsBackgroundSync] replSet our last op time fetched: May 19 05:08:37:2

22:09:33 Sun May 19 05:09:33.438 [rsBackgroundSync] replset source's GTE: May 19 05:09:26:1

22:09:33 Sun May 19 05:09:33.438 [rsBackgroundSync] replSet rollback 0

22:09:33 Sun May 19 05:09:33.438 [rsBackgroundSync] replSet ROLLBACK

22:09:33 Sun May 19 05:09:33.439 [rsBackgroundSync] replSet rollback 1

22:09:33 Sun May 19 05:09:33.439 [rsBackgroundSync] replSet rollback 2 FindCommonPoint

22:09:33 Sun May 19 05:09:33.439 [rsBackgroundSync] replSet info rollback our last optime: May 19 05:08:37:2

22:09:33 Sun May 19 05:09:33.439 [rsBackgroundSync] replSet info rollback their last optime: May 19 05:09:33:32

22:09:33 Sun May 19 05:09:33.439 [rsBackgroundSync] replSet info rollback diff in end of log times: -56 seconds

22:09:35 Sun May 19 05:09:33.621 [initandlisten] connection accepted from 10.10.3.32:59066 #6155 (24 connections now open)

22:09:35 Sun May 19 05:09:35.221 [rsBackgroundSync] replSet rollback found matching events at May 19 05:08:24:66

22:09:35 Sun May 19 05:09:35.221 [rsBackgroundSync] replSet rollback findcommonpoint scanned : 3798

22:09:35 Sun May 19 05:09:35.221 [rsBackgroundSync] replSet replSet rollback 3 fixup

22:09:35 Sun May 19 05:09:35.222 [rsBackgroundSync] replSet rollback 3.5

22:09:35 Sun May 19 05:09:35.222 [rsBackgroundSync] replSet rollback 4 n:1

22:09:35 Sun May 19 05:09:35.222 [rsBackgroundSync] replSet minvalid=May 19 05:09:35 51985e8f:19

22:09:35 Sun May 19 05:09:35.222 [rsBackgroundSync] replSet rollback 4.6

22:09:35 Sun May 19 05:09:35.223 [rsBackgroundSync] replSet rollback 4.7

22:09:35 Sun May 19 05:09:35.223 [rsBackgroundSync] replSet rollback 5 d:0 u:1

22:09:35 Sun May 19 05:09:35.224 [rsBackgroundSync] replSet rollback 6

22:09:35 Sun May 19 05:09:35.236 [rsBackgroundSync] replSet rollback 7

22:09:35 Sun May 19 05:09:35.238 [rsBackgroundSync] replSet rollback done

22:09:35 Sun May 19 05:09:35.238 [rsBackgroundSync] replSet RECOVERING

During a rollback, all the writes the old primary accepted after the common point in the oplog are removed from the database and written to a BSON file in Mongo’s rollbacks directory. If you’re a sysadmin, you could go look at the rollback files to try and reconstruct the writes that the database dropped.

Well, theoretically. In my tests, it only does this in 1 out of 5 runs or so. Mostly, it just throws those writes away entirely: no rollback files, no nothing. I don’t really know why.

This leads to an important discovery: it doesn’t matter whether or not there were two primaries at the same time. We can still get conflicting writes if the old primary’s state is causally unconnected from the new primary. A primary/secondary system, by itself, is not sufficient. We have to actually track causality on the writes themselves in order to be CP. Otherwise, newly elected primaries could diverge from the old one.

Safe

Aha! But that was with the old “unsafe” write concern! We should use the Safe write concern!

lein run mongo-safe -n 6000

...

6000 total

5900 acknowledged

3692 survivors

2208 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

458 463 468 473 478 483 ... 3075 3080 3085 3090 3095 3100

0.98333335 ack rate

0.3742373 loss rate

0.0 unacknowledged but successful rate

Replicas-safe

WriteConcern.SAFE only verifies that the write was accepted by the primary. We need to make sure that the replicas have received our write before considering it a success.

lein run mongo-replicas-safe -n 6000

...

6000 total

5695 acknowledged

3768 survivors

1927 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

712 717 722 727 732 737 ... 2794 2799 2804 2809 2814 2819

0.94916666 ack rate

0.338367 loss rate

0.0 unacknowledged but successful rate

Mongo still rolled back our writes. Why? Because REPLICAS_SAFE only checks to see if the write took place against two replicas. Our cluster has five nodes, so it’s possible for writes to exist only on n1 and n2. A new primary can be elected without having seen our write. We need to wait until our write has been acknowledged by a majority of nodes.

Majority

lein run mongo -n 6000

Using WriteConcern.MAJORITY, we notice an improvement! When we cause the partition, writes pause immediately. The clients are blocked, waiting for the primary to confirm acknowledgement on nodes which will never respond. Eventually they time out. This is a hallmark of a CP system: we shouldn’t be able to make progress without talking to a majority of nodes.

Writes completed in 157.425 seconds

6000 total

5700 acknowledged

5701 survivors

2 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

(596 598)

3 unacknowledged writes found! ヽ(´ー`)ノ

(562 653 3818)

0.95 ack rate

1.754386E-4 loss rate

5.2631577E-4 unacknowledged but successful rate

So 3 writes which supposedly failed actually succeeded. That’s not so bad. On the other hand, Mongo still dropped two “successful” writes. Writes which were supposedly acknowledged by a majority of nodes.

I’ve been talking with 10gen, and they think this is a bug. When the network partitions, the server just checks off the “OK” field for the client’s WriteConcern request, and sends it back. The client sees the “OK” message and… sensibly presumes the write was OK. This should be fixed in master, but is still present in 2.4.3, the most recent release.

Even if this bug is fixed, Mongo still isn’t consistent. Those three writes which “failed” but showed up in the result set? Those are writes which were replicated to a majority node just prior to the partition, but never had the chance to acknowledge. Single writes are not atomic without a proper consensus protocol: those failed writes could materialize never, now, or some time in the future; potentially overwriting valid data.

Strategies for working with Mongo

On the one hand, Mongo advocates usually tell me “but network partitions are exceedingly rare in practice.” Then I talk to Mongo users who report their cluster fails over on a weekly basis. One thing to keep in mind is that heavy load–like seasonal writes, recovering from a crash, or performing a rollback–can slow a node down to the point where other nodes declare it dead. This is a partition. I’ve seen my test cluster perform dozens of rollbacks as nodes go unavailable attempting to elect a new primary. You should probably instrument your cluster to watch for these events in production.

As we’ve discussed before, one option is simply to accept data loss. Not all applications need consistency.

At the same time, you should watch those rollback files. Sometimes they don’t appear even though they’re supposed to, and not all data types will actually be rolled back. Conflicts in capped collections, for example, appear to simply discard all data in the collection past the conflict point by design.

People use capped collections for distributed queues. Think about that for a minute.

Moreover, a rollback file doesn’t give you enough information to actually reconstruct the correct state of the system–at least in general. It’s just a snapshot of “some state” the database had to discard. Because there’s no well-defined ordering for these writes, you’ll have to decide what that means for your particular data structures. If you can structure your documents as CRDTs and write a merge function, you’ll be able to safely merge. If there’s no conflicting copy of the document in the database, and you never delete those kinds of documents, you can restore it automatically. Immutable records can always be recovered, too.

Finally, you can drastically reduce the probability of write loss by using WriteConcern.MAJORITY. This is gonna impose a big performance hit. That’s another hallmark of more-available CP systems.

To recap: MongoDB is neither AP nor CP. The defaults can cause significant loss of acknowledged writes. The strongest consistency offered has bugs which cause false acknowledgements, and even if they’re fixed, doesn’t prevent false failures.

In the next post, we’ll talk about a database which emphasizes availability and partition tolerance: Riak.

“So 3 writes which supposedly failed actually succeeded. That’s not so bad.”

Actually, this can happen in any kind of cluster. In fact, it can happen even in single server failure. It simply means that there is a short moment where data is committed (to disk, to a cluster… any definition of committed) but acknowledgement of this has not yet been sent back to client, at which point the server (or client) crashes. So the commit has happened properly, you just didn’t hear about it.

Admittedly, with MongoDB w=majority this is probably more likely to happen than in some other systems, ie the “moment” is longer.