In the last Jepsen post, we learned about NuoDB. Now it’s time to switch gears and discuss Kafka. Up next: Cassandra.

Kafka is a messaging system which provides an immutable, linearizable, sharded log of messages. Throughput and storage capacity scale linearly with nodes, and thanks to some impressive engineering tricks, Kafka can push astonishingly high volume through each node; often saturating disk, network, or both. Consumers use Zookeeper to coordinate their reads over the message log, providing efficient at-least-once delivery–and some other nice properties, like replayability.

In the upcoming 0.8 release, Kafka is introducing a new feature: replication. Replication enhances the durability and availability of Kafka by duplicating each shard’s data across multiple nodes. In this post, we’ll explore how Kafka’s proposed replication system works, and see a new type of failure.

Here’s a slide from Jun Rao’s overview of the replication architecture. In the context of the CAP theorem, Kafka claims to provide both serializability and availability by sacrificing partition tolerance. Kafka can do this because LinkedIn’s brokers run in a datacenter, where partitions are rare.

Note that the claimed behavior isn’t impossible: Kafka could be a CP system, providing “bytewise identical replicas” and remaining available whenever, say, a majority of nodes are connected. It just can’t be fully available if a partition occurs. On the other hand, we saw that NuoDB, in purporting to refute the CAP theorem, actually sacrificed availability. What happens to Kafka during a network partition?

Design

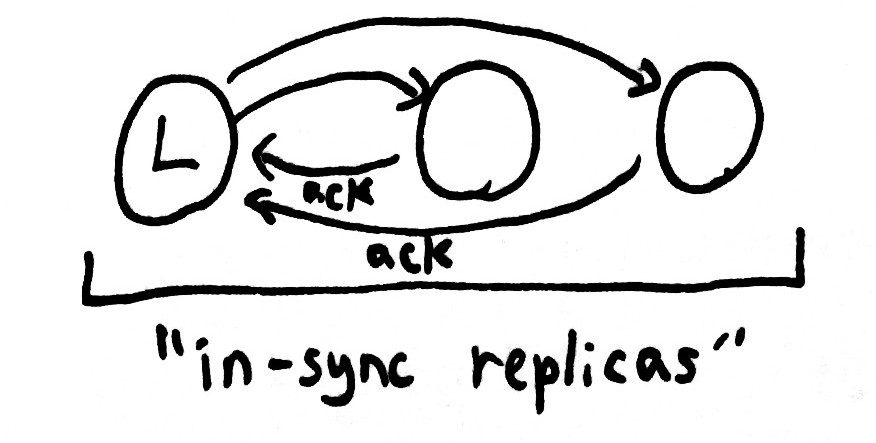

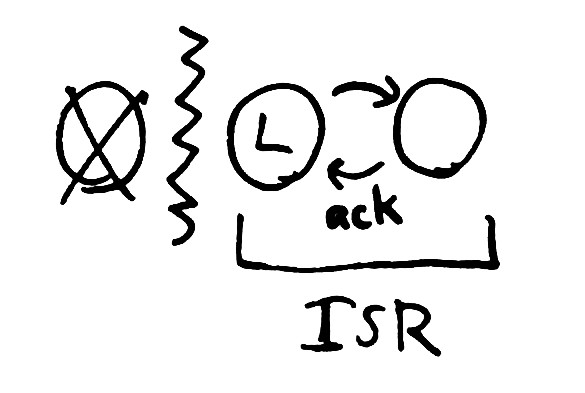

Kafka’s replication design uses leaders, elected via Zookeeper. Each shard has a single leader. The leader maintains a set of in-sync-replicas: all the nodes which are up-to-date with the leader’s log, and actively acknowledging new writes. Every write goes through the leader and is propagated to every node in the In Sync Replica set, or ISR. Once all nodes in the ISR have acknowledged the request, the leader considers it committed, and can ack to the client.

When a node fails, the leader detects that writes have timed out, and removes that node from the ISR in Zookeeper. Remaining writes only have to be acknowledged by the healthy nodes still in the ISR, so we can tolerate a few failing or inaccessible nodes safely.

So far, so good; this is about what you’d expect from a synchronous replication design. But then there’s this claim from the replication blog posts and wiki: “with f nodes, Kafka can tolerate f-1 failures”.

This is of note because most CP systems only claim tolerance to n/2-1 failures; e.g. a majority of nodes must be connected and healthy in order to continue. Linkedin says that majority quorums are not reliable enough, in their operational experience, and that tolerating the loss of all but one node is an important aspect of the design.

Kafka attains this goal by allowing the ISR to shrink to just one node: the leader itself. In this state, the leader is acknowledging writes which have been only been persisted locally. What happens if the leader then loses its Zookeeper claim?

The system cannot safely continue–but the show must go on. In this case, Kafka holds a new election and promotes any remaining node–which could be arbitrarily far behind the original leader. That node begins accepting requests and replicating them to the new ISR.

When the original leader comes back online, we have a conflict. The old leader is identical with the new up until some point, after which they diverge. Two possibilities come to mind: we could preserve both writes, perhaps appending the old leader’s writes to the new–but this would violate the linear ordering property Kafka aims to preserve. Another option is to drop the old leader’s conflicting writes altogether. This means destroying committed data.

In order to see this failure mode, two things have to happen:

- The ISR must shrink such that some node (the new leader) is no longer in the ISR.

- All nodes in the ISR must lose their Zookeeper connection.

For instance, a lossy NIC which drops some packets but not others might isolate a leader from its Kafka followers, but break the Zookeeper connection slightly later. Or the leader could be partitioned from the other kafka nodes by a network failure, and then crash, lose power, or be restarted by an administrator. Or there could be correlated failures across multiple nodes, though this is less likely.

In short, two well-timed failures (or, depending on how you look at it, one complex failure) on a single node can cause the loss of arbitrary writes in the proposed replication system.

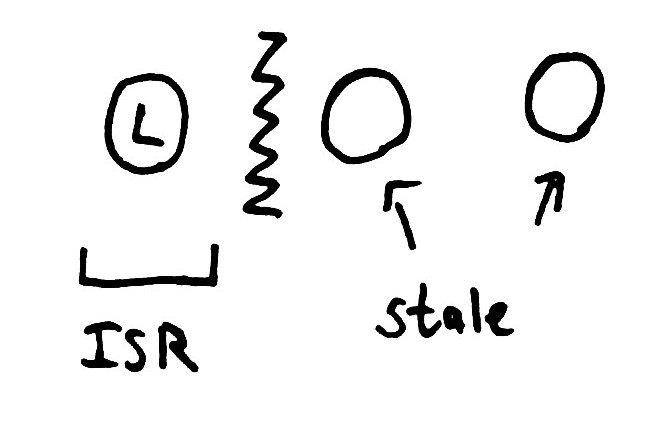

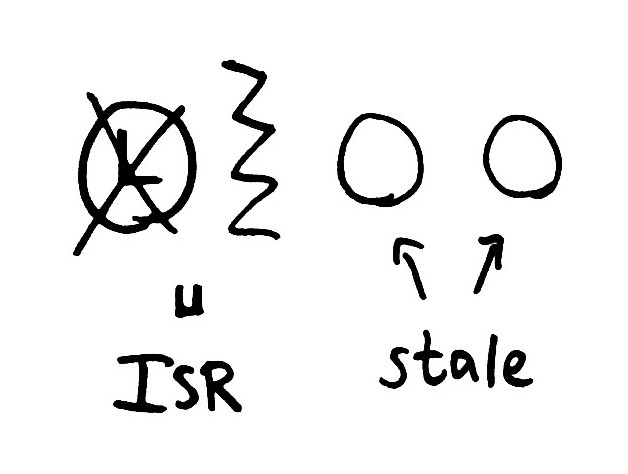

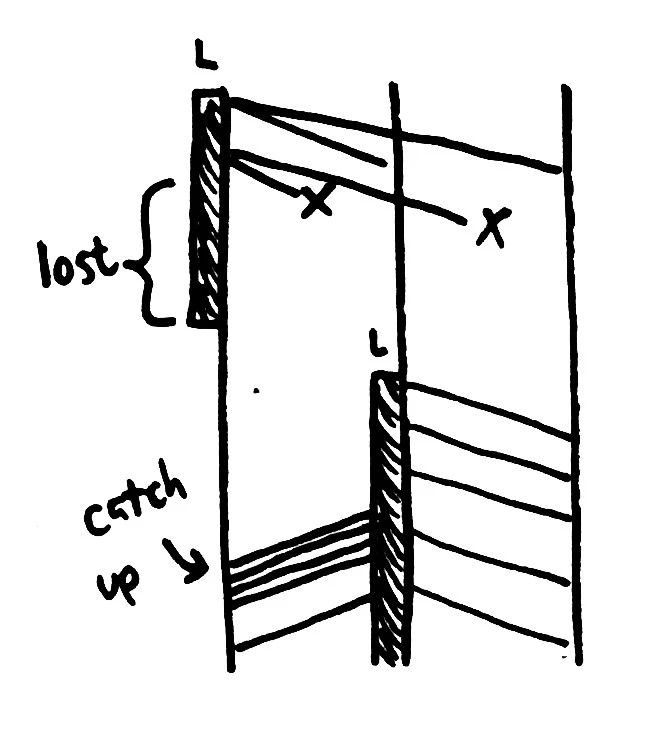

I want to rephrase this, because it’s a bit tricky to understand. In the causality diagram to the right, the three vertical lines represent three distinct nodes, and time flows downwards. Initially, the Leader (L) can replicate requests to its followers in the ISR. Then a partition occurs, and writes time out. The leader detects the failure and removes nodes 2 and 3 from the ISR, then acknowledges some log entries written only to itself.

When the leader loses its Zookeeper connection, the middle node becomes the new leader. What data does it have? We can trace its line upwards in time to see that it only knows about the very first write made. All other writes on the original leader are causally disconnected from the new leader. This is the reason data is lost: the causal invariant between leaders is violated by electing a new node once the ISR is empty.

I suspected this problem existed from reading the JIRA ticket, but after talking it through with Jay Kreps I wasn’t convinced I understood the system correctly. Time for an experiment!

Results

First, I should mention that Kafka has some parameters that control write consistency. The default behaves like MongoDB: writes are not replicated prior to acknowledgement, which allows for higher throughput at the cost of safety. In this test, we’ll be running in synchronous mode:

(producer/producer

{"metadata.broker.list" (str (:host opts) ":9092")

"request.required.acks" "-1" ; all in-sync brokers

"producer.type" "sync"

"message.send.max_retries" "1"

"connect.timeout.ms" "1000"

"retry.backoff.ms" "1000"

"serializer.class" "kafka.serializer.DefaultEncoder"

"partitioner.class" "kafka.producer.DefaultPartitioner"})

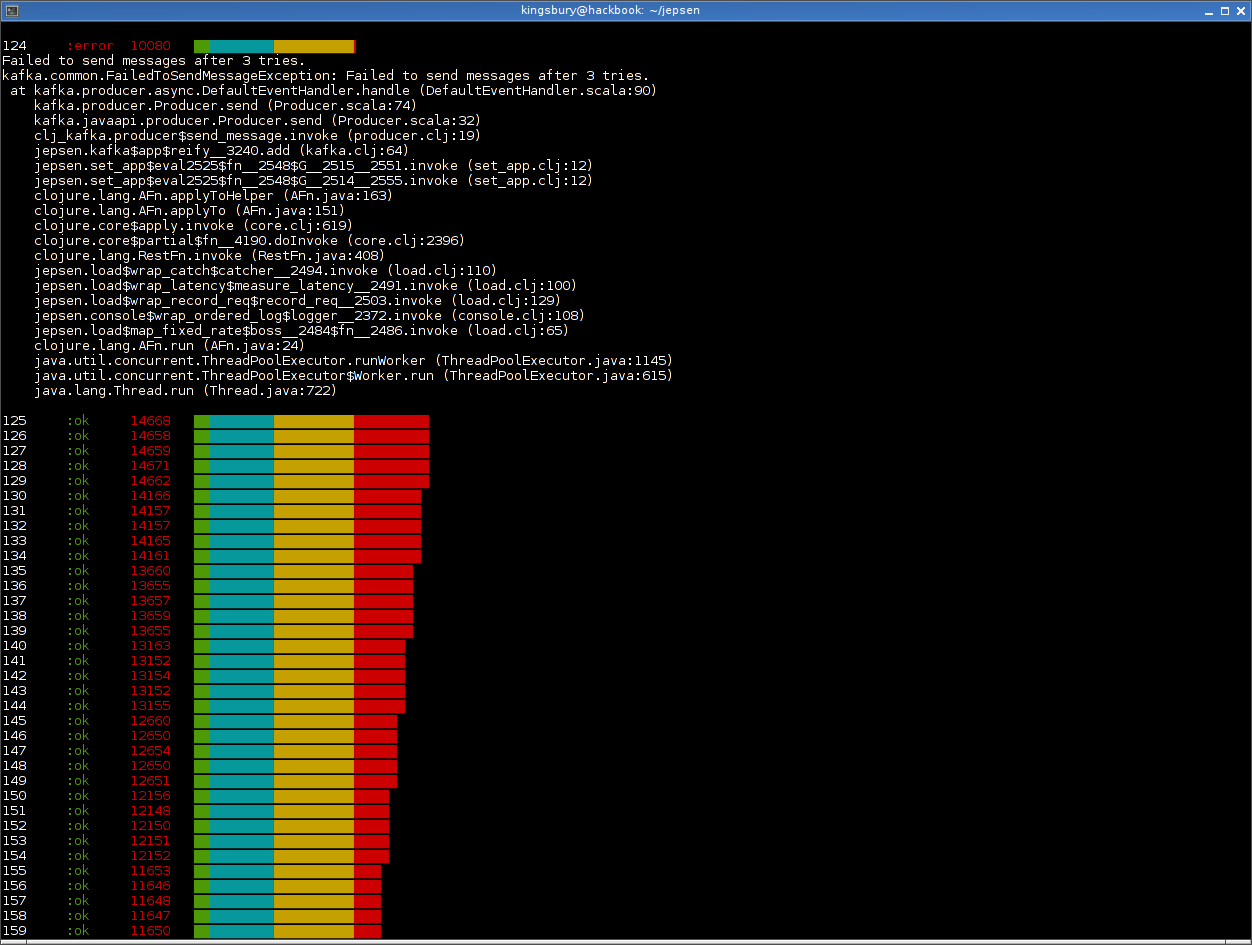

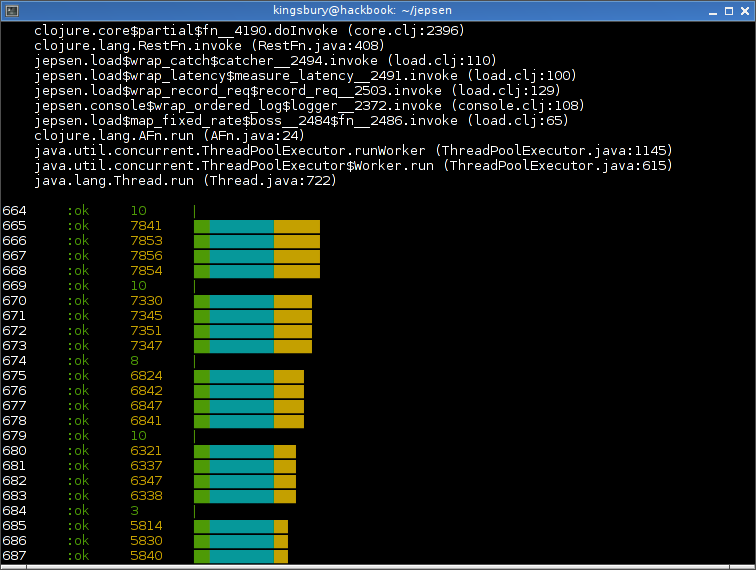

With that out of the way, our writes should be fully acknowledged by the ISR once the client returns from a write operation successfully. We’ll enqueue a series of integers into the Kafka cluster, then isolate a leader using iptables from the other Kafka nodes. Latencies spike initially, while the leader waits for the missing nodes to respond.

A few requests may fail, but the ISR shrinks in a few seconds and writes begin to succeed again.

We’ll allow that leader to acknowledge writes independently, for a time. While these writes look fine, they’re actually only durable on a single node–and could be lost if a leader election occurs.

Then we totally partition the leader. ZK detects the leader’s disconnection and the remaining nodes will promote a new leader, causing data loss. Again, a brief latency spike:

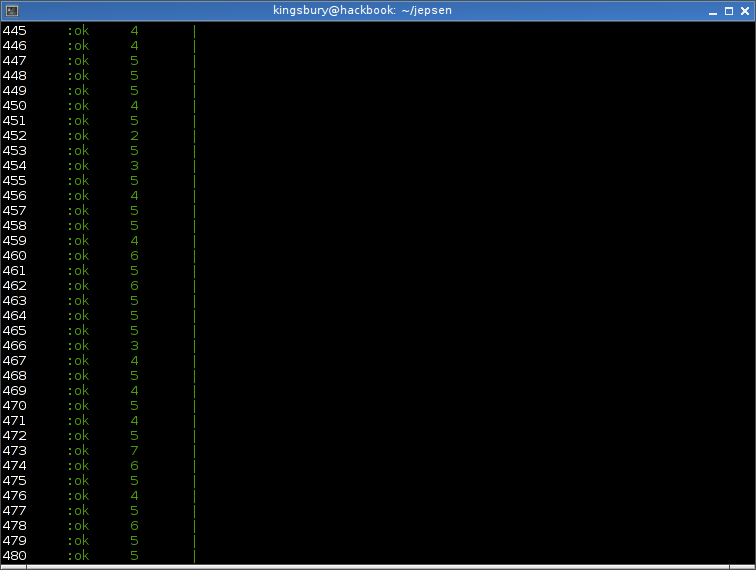

At the end of the run, Kafka typically acknowledges 98–100% of writes. However, half of those writes (all those made during the partition) are lost.

Writes completed in 100.023 seconds

1000 total

987 acknowledged

468 survivors

520 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

130 131 132 133 134 135 ... 644 645 646 647 648 649

1 unacknowledged writes found! ヽ(´ー`)ノ

(126)

0.987 ack rate

0.52684903 loss rate

0.0010131713 unacknowledged but successful rate

Discussion

Kafka’s replication claimed to be CA, but in the presence of a partition, threw away an arbitrarily large volume of committed writes. It claimed tolerance to F-1 failures, but a single node could cause catastrophe. How could we improve the algorithm?

All redundant systems have a breaking point. If you lose all N nodes in a system which writes to N nodes synchronously, it’ll lose data. If you lose 1 node in a system which writes to 1 node synchronously, that’ll lose data too. There’s a tradeoff to be made between how many nodes are required for a write, and the number of faults which cause data loss. That’s why many systems offer per-request settings for durability. But what choice is optimal, in general? If we wanted to preserve the all-nodes-in-the-ISR model, could we constrain the ISR in a way which is most highly available?

It turns out there is a maximally available number. From Peleg and Wool’s overview paper on quorum consensus:

It is shown that in a complete network the optimal availability quorum system is the majority (Maj) coterie if p < 1/2.

In particular, given uniformly distributed element failure probabilities smaller than 1/2 (which realistically describes most homogenous clusters), the worst quorum systems are the Single coterie (one failure causes unavailability), and the best quorum system is the simple Majority (provided the cohort size is small). Because Kafka keeps only a small number (on the order of 1-10) replicas, Majority quorums are provably optimal in their availability characteristics.

You can reason about this from extreme cases: if we allow the ISR to shrink to 1 node, the probability of a single additional failure causing data loss is high. If we require the ISR include all nodes, any node failure will make the system unavailable for writes. If we assume failures are partially independent, the probability of two failures goes like 1 - (1-p)^2, which is much smaller than p. This superlinear failure probability at both ends is why bounding the ISR size in the middle has the lowest probability of failure.

I made two recommendations to the Kafka team:

-

Ensure that the ISR never goes below N/2 nodes. This reduces the probability of a single node failure causing the loss of commited writes.

-

In the event that the ISR becomes empty, block and sound an alarm instead of silently dropping data. It’s OK to make this configurable, but as an administrator, you probably want to be aware when a datastore is about to violate one of its constraints–and make the decision yourself. It might be better to wait until an old leader can be recovered. Or perhaps the administrator would like a dump of the to-be-dropped writes which could be merged back into the new state of the cluster.

Finally, remember that this is pre-release software; we’re discussing a candidate design, not a finished product. Jay Kreps and I discussed the possibility of a “stronger safety” mode which does bound the ISR and halts when it becomes empty–if that mode makes it into the next release, and strong safety is important for your use case, check that it is enabled.

Remember, Jun Rao, Jay Kreps, Neha Narkhede, and the rest of the Kafka team are seasoned distributed systems experts–they’re much better at this sort of thing than I am. They’re also contending with nontrivial performance and fault-tolerance constraints at LinkedIn–and those constraints shape the design space of Kafka in ways I can’t fully understand. I trust that they’ve thought about this problem extensively, and will make the right tradeoffs for their (and hopefully everyone’s) use case. Kafka is still a phenomenal persistent messaging system, and I expect it will only get better.

The next post in the Jepsen series explores Cassandra, an AP datastore based on the Dynamo model.

This seems to violate the whole concept of the ISR set, no? It’d be great if your recommendation #2. makes it in to 0.8. I’d much rather get pages about produce errors rather than have to figure out how to clean up inconsistent partitions.