In a recent blog post, antirez detailed a new operation in Redis: WAIT. WAIT is proposed as an enhancement to Redis’ replication protocol to reduce the window of data loss in replicated Redis systems; clients can block awaiting acknowledgement of a write to a given number of nodes (or time out if the given threshold is not met). The theory here is that positive acknowledgement of a write to a majority of nodes guarantees that write will be visible in all future states of the system.

As I explained earlier, any asynchronously replicated system with primary-secondary failover allows data loss. Optional synchronous replication, antirez proposes, should make it possible for Redis to provide strong consistency for those operations.

WAIT means that if you run three nodes A, B, C where every node contains a Sentinel instance and a Redis instance, and you “WAIT 1” after every operation to reach the majority of slaves, you get a consistent system.

WAIT can be also used, by improving the failover procedure, in order to have a strong consistent system (no writes to the older master from the point the failure detection is positive, to the end of the failover when the configuration is updated, or alternative, disconnect the majority of slaves you can reach during the failure detection so that every write will fail during this time).

Antirez later qualified these claims:

I understand this not the “C” consistency of “CAP” but, before: the partition with clients and the (old) master partitioned away would receive writes that gets lost. after: under certain system models the system is consistent, like if you assume that crashed instances never start again. Of course, the existence of synchronous replication does not prove that the system is linearizable; only some types of failover preserve the ordering of writes.

As I showed in Call me maybe: Redis, Redis Sentinel will enter split-brain during network partitions, causing significant windows of data loss. Exactly how much data loss depends on the sentinel configuration and the failure topology. Antirez finally suggested that if we replace Redis Sentinel with a strongly consistent coordination service for failover, Redis WAIT could provide full linearizability.

The failover proposal

In a five-node cluster, assume every write is followed by WAIT 2 to ensure that a majority of nodes have received the write. In the event of a failure, a strong external coordinator goes through the following election process:

- Totally partition the old primary P1.

- Of all reachable nodes, identify the node with the highest replication offset. Let that node be P2.

- Promote P2.

- Inform all reachable nodes that they are to follow P2.

- Have all reachable clients switch to the new primary.

There are several serious problems with this design. I hinted at these issues in the mailing list with limited success. Kelly Sommers pointed out repeatedly that this design has the same issues as Cassandra’s CL.ALL. Replication alone does not ensure linearizability; we have to be able to roll back operations which should not have happened in the first place. If those failed operations can make it into our consistent timeline in an unsafe way, perhaps corrupting our successful operations, we can lose data.

… surprisingly I think that transactional rollbacks are totally irrelevant.

Ultimately I was hoping that antirez and other contributors might realize why their proposal for a custom replication protocol was unsafe nine months ago, and abandon it in favor of an established algorithm with a formal model and a peer-reviewed proof, but that hasn’t happened yet. Redis continues to accrete homegrown consensus and replication algorithms without even a cursory nod to formal analysis.

OK, fine. Let’s talk about the failover coordinator.

The coordinator

Redis Sentinel is not linearizable; nor are its proposed improvements. Whatever failover system you’re planning to use here is going to need something stronger. In fact, we can’t even guarantee safety using a strong coordination service like ZooKeeper to serialize the failover operations, because ZooKeeper cannot guarantee the mutual exclusion of two services in the presence of message delays and clock skews. Let’s paper over that issue by introducing large delays and carefully ordering our timeouts.

It gets worse. Even if we did have a perfect mutex around the coordinator, two coordinators could issue messages to the same Redis nodes which arrive out of order. TCP does not guarantee ordering between two distinct TCP streams, which means we might see coordinator A initiate a failover process then time out halfway; followed by coordinator B which begins the failover process, only to be interrupted on some nodes by messages en-route through the network from coordinator A. Don’t believe me? TCP message delays have been reported in excess of ninety seconds. That one took out Github.

It gets even worse. If the original primary P1 is isolated from the coordinator, the coordinator will not be able to force P1 to step down. Indeed, P1 could remain a primary for the entire duration of a failover, accepting writes, making state changes, and attempting to replicate those changes to other nodes. This is dangerous because we cannot atomically guarantee that the new majority of nodes will reject those writes.

- A client writes to P1, which replicates to secondaries S2, S3, S4, and S5.

- The coordinator attempts to elect a new primary, and sees S2, S3, S4, and S5.

- Without loss of generality, assume S2 has the highest replication offset. The coordinator promotes S2 to P2.

- P1 receives acks from S3, S4, and S5, and, having reached a majority, returns success to the client.

- The coordinator reparents S3, S4, and S5 to P2, destroying the write.

You might try to solve this by forcing S2–S5 into a read-only, non-replicating mode before attempting to promote a new primary, but that gets into a whole other morass of issues around multiple state transitions and partial failures. Suffice it to say: it’s difficult to solve this by simply pausing nodes first. Maybe impossible? I’m not sure.

Typically, replication protocols solve this problem by guaranteeing that writes from S1 can not be accepted after S2–S5 acknowledge to the coordinator that they will participate in a new cohort. This often takes the form of a ballot (Paxos), epoch (ZAB, Viewstamped Replication), or term (RAFT). Redis has no such construct, and antirez seems to eschew it as unnecessary:

In this model, it is possible to reach linearizability? I believe, yes, because we removed all the hard part, for which the strong protocols like Raft use epochs.

This brings us to a different, but related series of problems.

The servers

By using the offset in the replication log as the determining factor in which nodes are promotable, the proposed failover design opens the door for significant data loss.

Imagine the following sequence:

- The primary P1, with log offset O1, becomes isolated from S3, S4, and S5.

- Clients writing to P1 see their operations using

WAIT 2fail. - S3 is promoted to P3, with offset O1=O3. Clients writing to P3 see their writes succeed, replicated to S4 and S5.

- More operations occur on P1 than on P3. O1 becomes greater than O3.

- The partition heals; the coordinator can see both P1 and P3.

- The coordinator sees that O1 is higher than O3, and chooses P1 as the new primary.

- P3 is demoted, and all its acknowledged writes are destroyed.

Don’t believe me? Here, let’s try it. Here’s a function which implements (more or less) the proposed coordinator algorithm. Note that we’re not demoting the original primary because it may not be reachable.

(defn elect!

"Forces an election among the given nodes. Picks the node with the highest

replication offset, promotes it, and re-parents the secondaries."

[nodes]

(let [highest (highest-node nodes)]

(log "Promoting" highest)

(with-node highest

(redis/slaveof "no" "one"))

(doseq [node (remove #{highest} nodes)]

(log "Reparenting" node "to" highest)

(with-node node

(redis/slaveof highest 6379)))))

And in the test, we’ll use WAIT to ensure that only writes which are successfully replicated to 2 or more replicas are considered successful:

(add [app element]

(try

(redis/with-conn pool spec

(redis/sadd key element))

; Block for 2 secondaries (3 total) to ack.

(let [acks (redis/with-conn pool spec

(taoensso.carmine.protocol/send-request! "WAIT" 2 1000))]

(if (< acks 2)

(do

(log "not enough copies: " acks)

error)

ok))

(catch Exception e

(if (->> e .getMessage (re-find #"^READONLY"))

error

(throw e))))

I’m gonna punt on informing clients which node is the current primary; we’ll just issue set-add requests to each node independently. Jepsen only cares about whether successful writes are lost, so we’ll let those writes fail and log ’em as unsuccessful.

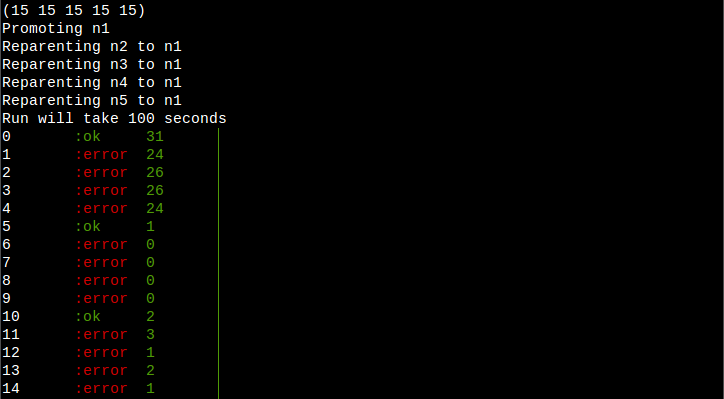

Initially, the offset for all 5 nodes is 15. Writes complete successfully on P1 and fail on S2–S5.

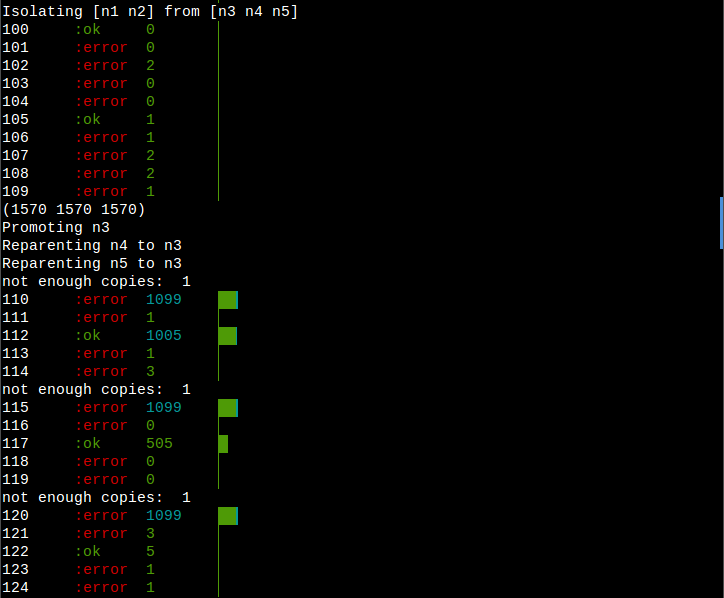

We cut off P1 and S2 from S3, S4, and S5. S3, S4, and S5 all have equal offsets (1570), so we promote S3 to P3. As soon as the partition takes effect, writes to P1 begin to fail–we see not enough copies: 1, and an :error status for write 110, 115, and so on. Latencies on P1 jump to 1 second, since that’s how long we’re blocking for using WAIT.

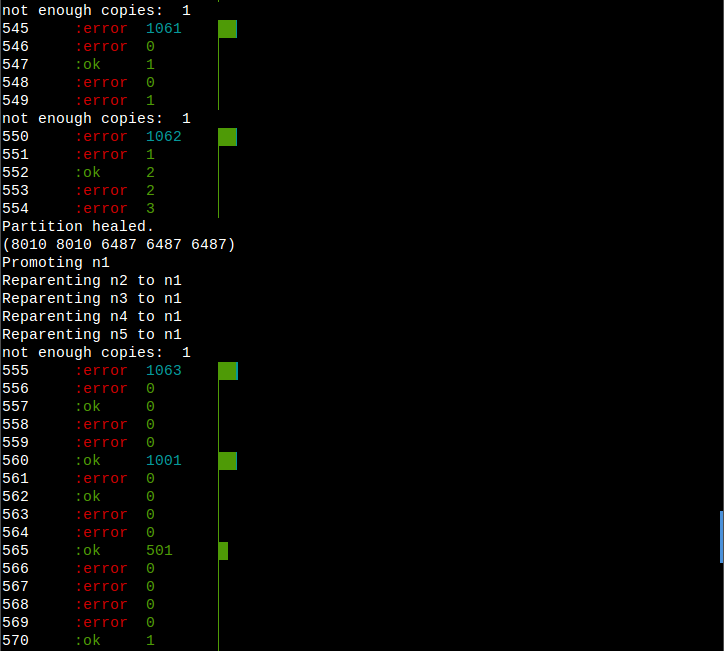

Writes complete successfully on P3, since it can see a majority of nodes: itself, S4, and S5. We heal the partition and initiate a second election. Since P1’s offset (8010) is higher than P3’s (6487), we preserve P1 as a primary and demote all other nodes to follow it. All P3’s writes accepted during the partition are silently destroyed.

Note that there’s actually a window here where writes can successfully take place on either of P1 or P2 in a mixed sequence, depending on the order in which the secondaries are reparented. Both 560 and 562 complete successfully, even though 562 was written to S3, which was demoted at that point in time. Some weird opportunity for timing anomalies there.

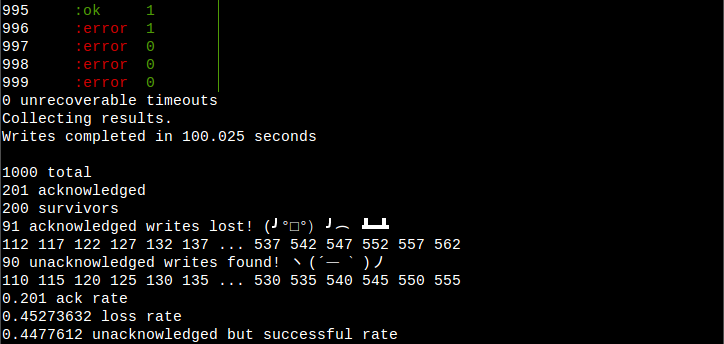

These results are catastrophic. In a partition which lasted for roughly 45% of the test, 45% of acknowledged writes were thrown away. To add insult to injury, Redis preserved all the failed writes in place of the successful ones.

Additional issues

Two bugs amplify this problem. Note that this is the unstable branch, so this isn’t a huge deal right now:

First, Redis secondaries return -1 for their offset when they detect the primary is down. Returning a special status code makes sense… but not if you’re using the offset to determine which nodes become the primary. This could cause the highest nodes to appear the lowest, and vice versa. If a fresh node has offset 0, and all other nodes return offset -1, this could cause a cluster to erase all data ever written to it.

Second, Redis resets the replication offset to zero every time a node is promoted. Again, a reasonable choice in isolation, but it actually maximizes the chances that this particular failure mode will occur. The current design is biased towards data loss.

Even if these bugs were corrected, the problem could still occur. All that’s required is for more operations to happen on P1 than P3 after the two diverge.

Going forward

Distributed systems design is really hard, but engineers continue to assume otherwise:

However I think that distributed systems are not super hard, like kernel programming is not super hard, like C system programming is not super hard. Everything new or that you don’t do in a daily basis seems super hard, but it is actually different concepts that are definitely things everybody here in this list can master.

For sure a few months of exposure will not make you able to provide work like Raft or Paxos, but the basics can be used in order to try to design practical systems, that can be improved over time.

I assert just the opposite: we need formal theory, written proofs, computer verification, and experimental demonstration that our systems make the tradeoffs we think they make. Throughout the Redis criticism thread and discussion on Twitter, I see engineers assuming that they understand the tradeoffs despite the presence of gaping holes in the system’s safety net.

This behavior endangers users.

These list threads and blog posts are the sources that users come to, years later, to understand the safety properties of our systems. They’ll read our conjectures and idle thoughts and tease out some gestalt, and use that to build their systems on top of ours. They’ll miss subtle differences in phrasing and they won’t read every reply. Most won’t do any reading at all; they’re not even aware that these problems could exist.

Engineers routinely characterize Redis’s reliability as “rock solid”.

This is part of why I engage in these discussions so vocally. As systems engineers, we continually struggle to erase the assumption of safety before that assumption causes data loss or downtime. We need to clearly document system behaviors so that users can make the right choices.

We must understand our systems in order to explain them–and distributed systems are hard to understand. That’s why it’s so important that we rely on formal models, on proofs, instead of inventing our own consensus protocols–because much of the hard work of understanding has been done already. We can build on that work. Implementing a peer-reviewed paper is vastly simpler than trying to design and verify an algorithm from scratch–or worse, evolving one piecemeal, comprised of systems which encode subtly different assumptions about their responsibilities to the world. Those designs lead to small gaps which, viewed from the right angle, become big enough to drive a truck through.

I wholeheartedly encourage antirez, myself, and every other distributed systems engineer: keep writing code, building features, solving problems–but please, please, use existing algorithms, or learn how to write a proof.

How long was it before people stopped writing their own encryption protocols? How many blog posts had to be written to convince application programmers that peer reviewed encryption schemes are better than whatever cockamamie algorithm they could come up with. I salute your efforts to educate and raise awareness but alas I fear you have embarked on a decade long journey then again even if your efforts get to at least one other person I think you can consider it a success.