For more on Timelike and routing simulation, check out part 2 of this article: everything fails all the time. There’s also more discussion on Reddit.

RapGenius is upset about Heroku’s routing infrastructure. RapGenius, like many web sites, uses Rails, and Rails is notoriously difficult to operate in a multithreaded environment. Heroku operates at large scale, and made engineering tradeoffs which gave rise to high latencies–latencies with adverse effects on customers. I’d like to explore why Heroku’s Bamboo architecture behaves this way, and help readers reason about their own network infrastructure.

To start off with, here’s a Rails server. Since we’re going to be discussing complex chains of network software, I’ll write it down as an s-expression:

(server :rails)

Let’s pretend that server has some constant request-parsing overhead–perhaps 20 milliseconds–and an exponentially-distributed processing time with a mean of 100 milliseconds.

(delay-fixed 20

(delay-exponential 100

(server :rails)))

Heroku runs a Rails application in a virtual machine called a Dyno, on EC2. Since the Rails server can only do one thing at a time, the dyno keeps a queue of HTTP requests, and applies them sequentially to the rails application. We’ll talk to the dyno over a 2-millisecond-long network cable.

(defn dyno []

(cable 2

(queue-exclusive

(delay-fixed 20

(delay-exponential 100

(server :rails))))))

This node can process an infinite queue of requests at the average rate of 1 every 124 milliseconds (2 + 20 + 100 + 2). But some requests take longer than others. What happens if your request lands behind a different, longer request? How long do you, the user, have to wait?

Introducing Timelike

Surprise! This way of describing network systems is also executable code. Welcome to Timelike.

(cable 2 ...) returns a function which accepts a request, sleeps for 2 milliseconds, then passes the request to a child function–in this case, a queuing function returned by queue-exclusive. Then cable sleeps for 2 more milliseconds to simulate the return trip, and returns the response from queue-exclusive. The request (and response) are just a list of events, each one timestamped. The return value of each function, or “node”, is the entire history of a request as it passes through the pipeline.

Network node composition is function composition–and since they’re functions, we can run them.

(let [responses (future*

; In a new thread, generate poisson-distributed

; requests. We want 10,000 total, spaced roughly

; 150 milliseconds apart. Apply them to a single

; dyno.

(load-poisson 10000 150 req (dyno)))]

(prn (first @responses))

(pstats @responses))

Timelike doesn’t actually sleep for 150 milliseconds between requests. The openjdk and oracle schedulers are unreliable as it stands–and we don’t actually need to wait that long to compute the value of this function. We just virtualize time for every thread in the network (in this case, a thread per request). All operations complete “immediately” according to the virtual clock, and the clock only advances when threads explicitly sleep. We can still exploit parallelism whenever two threads wake up at the same time, and advance the clock whenever there’s no more work to be done at a given time. The scheduler will even detect deadlocks and allow the clock to advance when active threads are blocked waiting to acquire a mutex held by a thread which won’t release it until the future… though that’s a little slow. ;-)

The upside of all this ridiculous lisp is that you can simulate concurrent systems where the results are independent of wall-clock time, which makes it easier to compare parallel systems at different scales. You can simulate one machine or a network of thousands, and the dynamics are the same.

Here’s an example request, and some response statistics. We discard the first and last parts of the request logs to avoid measuring the warm-up or cool-down period of the dyno queue.

[{:time 0} {:node :rails, :time 66}]

Total reqs: 10000

Selected reqs: 5000

Successful frac: 1.0

Request rate: 6.6635394 reqs/s

Response rate: 6.653865 reqs/s

Latency distribution:

Min: 22.0

Median: 387.0

95th %: 1728.0

99th %: 2894.1100000000024

Max: 3706.0

Since the request and response rates are close, we know the dyno was stable during this time–it wasn’t overloaded or draining its queue. But look at that latency distribution! Our median request took 3 times the mean, and some requests blocked for multiple seconds. Requests which stack up behind each other have to wait, even if they could complete quickly. We need a way to handle more than one request at a time.

How do you do that with a singlethreaded Rails? You run more server processes at once. In Heroku, you add more dynos. Each runs in parallel, so with n dynos you can (optimally) process n requests at a time.

(defn dynos

"A pool of n dynos"

[n]

(pool n (dyno)))

There’s those funny macros again.

Now you have a new problem: how do you get requests to the right dynos? Remember, whatever routing system we design needs to be distributed–multiple load balancers have to coordinate about the environment.

Random routing

Random load balancers are simple. When you get a new request, you pick a random dyno and send the request over there. In the infinite limit this is fine; a uniformly even distribution will distribute an infinite number of requests evenly across the cluster. But our systems aren’t infinite. A random LB will sometimes send two, or even a hundred requests to the same dyno even when its neighbors go unused. That dyno’s queue will back up, and everyone in that queue has to wait for all the requests ahead of them.

(lb-random

(dynos 250))

Total reqs: 100000

Selected reqs: 50000

Successful frac: 1.0

Request rate: 1039.7172 reqs/s

Response rate: 1012.6787 reqs/s

Latency distribution:

Min: 22.0

Median: 162.0

95th %: 631.0

99th %: 970.0

Max: 1995.0

A cool thing about random LBs is that they require little coordinated state. You don’t have to agree with your peers about where to route a request. They also compose freely: a layer of random load balancers over another layer of random load balancers has exactly the same characteristics as a single random load balancer, assuming perfect concurrency. On the other hand, leaving nodes unused while piling up requests on a struggling dyno is silly. We can do better.

Round-Robin routing

Round-robin load balancers write down all their backends in a circular list (also termed a “ring”). The first request goes to the first backend in the ring; the second request to the second backend, and so forth, around and around. This has the advantage of evenly distributing requests, and it’s relatively simple to manage the state involved: you only need to know a single number, telling you which element in the list to point to.

(lb-rr

(dynos 250))

Total reqs: 100000

Selected reqs: 50000

Successful frac: 1.0

Request rate: 1043.9939 reqs/s

Response rate: 1029.6116 reqs/s

Latency distribution:

Min: 22.0

Median: 105.0

95th %: 375.0

99th %: 560.0

Max: 1173.0

We halved our 95th percentile latencies, and cut median request time by roughly a third. RR balancers have a drawback though. Most real-world requests–like the one in our model–take a variable amount of time. When that variability is large enough (relative to pool saturation), round robin balancers can put two long-running requests on the same dyno. Queues back up again.

Least-connections routing

A min-conn LB algorithm keeps track of the number of connections which it has opened on each particular backend. When a new connection arrives, you find the backend with the least number of current connections. For singlethreaded servers, this also corresponds to the server with the shortest queue (in terms of request count, not time).

(lb-min-conn

(dynos 250))

Total reqs: 100000

Selected reqs: 50000

Successful frac: 1.0

Request rate: 1049.7806 reqs/s

Response rate: 1041.1244 reqs/s

Latency distribution:

Min: 22.0

Median: 92.0

95th %: 322.0

99th %: 483.0

Max: 974.0

Our 95th percentile latency has gone from 600 ms, to 375 ms, to 322ms. This algorithm is significantly more efficient over our simulated dynos than random or round-robin balancing–though it’s still not optimal. An optimal algorithms would predict the future and figure out how long the request will take before allocating it–so it could avoid stacking two long-running requests in the same queue.

Least-conns also means keeping track of lots of state: a number for every dyno, at least. All that state has to be shared between the load balancers in a given cluster, which can be expensive. On the other hand, we could afford up to a 200-millisecond delay on each connection, and still be more efficient than a random balancer. That’s a fair bit of headroom.

Meanwhile, in the real world

Heroku can’t use round-robin or min-conns load balancers for their whole infrastructure–it’s just too big a problem to coordinate. Moreover, some of the load balancers are far apart from each other so they can’t communicate quickly or reliably. Instead, Heroku uses several independent least-conns load balancers for their Bamboo stack. This has a drawback: with two least-conns routers, you can load the same dyno with requests from both routers at once–which increases the queue depth variability.

Let’s hook up a random router to a set of min-conns routers, all backed by the same pool of 250 dynos. We’ll separate the random routing layer from the min-conns layer by a 5-millisecond-long network cable.

(defn bamboo-test

[n]

(test-node (str "Bamboo with " n " routers")

(let [dynos (dynos pool-size)]

(lb-random

(pool n

(cable 5

(lb-min-conn

dynos)))))))

(deftest ^:bamboo bamboo-2

(bamboo-test 2))

(deftest ^:bamboo bamboo-4

(bamboo-test 4))

(deftest ^:bamboo bamboo-8

(bamboo-test 8))

(deftest ^:bamboo bamboo-16

(bamboo-test 16))

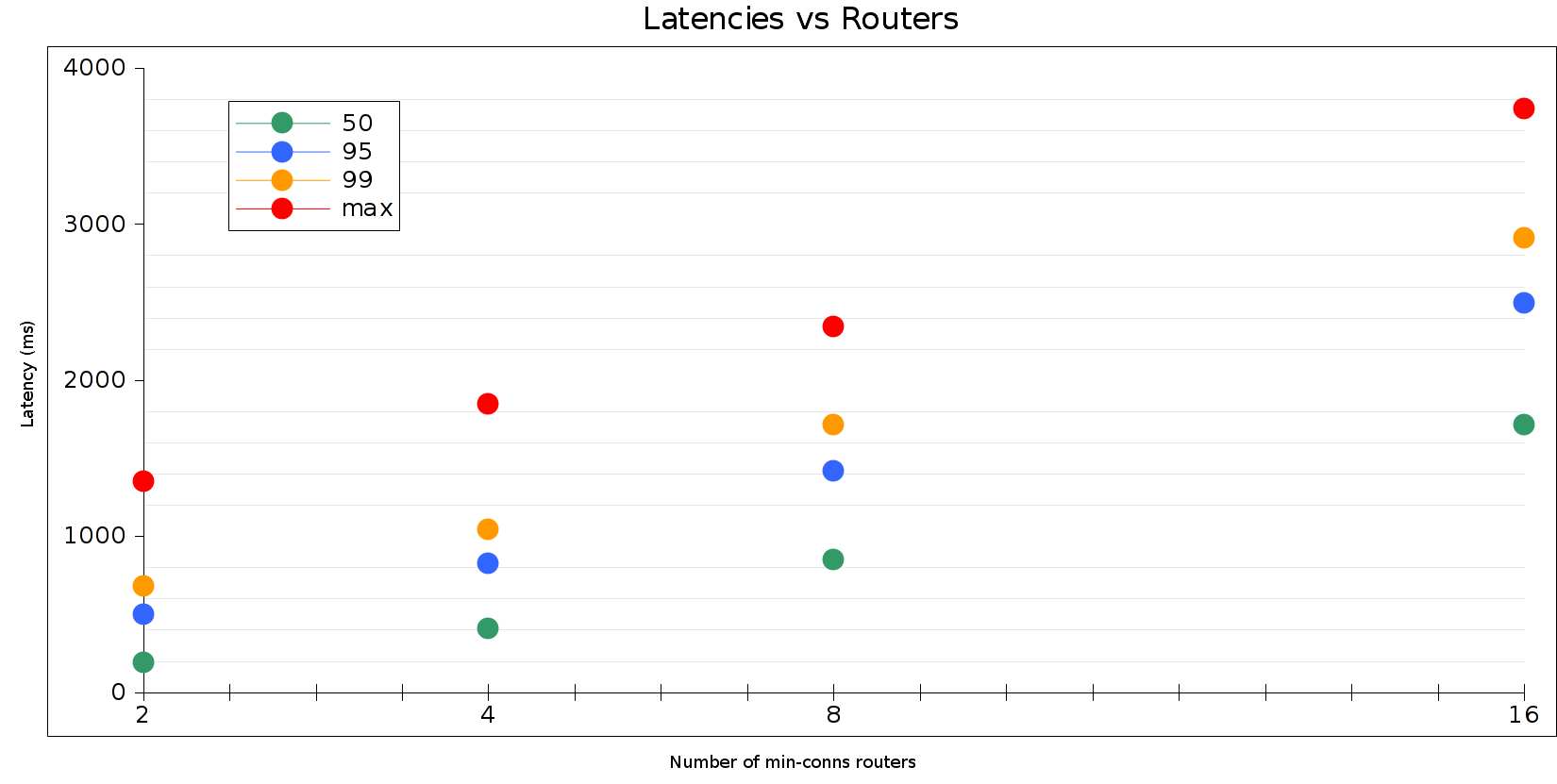

This plot sums up, in a nutshell, why RapGenius saw terrible response times. Latencies in this model–especially those killer 95th and 99th percentile times–rise linearly with additional least-conns routers (asymptotically bounded by the performance of a random router). As Heroku’s Bamboo cluster grew, so did the variability of dyno queue depths.

This is not the only routing topology available. In part 2, I explore some other options for distributed load balancing. If you want to experiment with Timelike for yourself, check out the github project.

Wow. What an extraordinary and straightforward explanation of a hugely complex topic.