In the previous post, I described an approximation of Heroku’s Bamboo routing stack, based on their blog posts. Hacker News, as usual, is outraged that the difficulty of building fast, reliable distributed systems could prevent Heroku from building a magically optimal architecture. Coda Hale quips:

Really enjoying @RapGenius’s latest mix tape, “I Have No Idea How Distributed Systems Work”.

Coda understands the implications of the CAP theorem. This job is too big for one computer–any routing system we design must be distributed. Distribution increases the probability of a failure, both in nodes and in the network itself. These failures are usually partial, and often take the form of degradation rather than the system failing as a whole. Two nodes may be unable to communicate with each other, though a client can see both. Nodes can lie to each other. Time can flow backwards.

CAP tells us that under these constraints, we can pick two of three properties (and I’m going to butcher them in an attempt to be concise):

- Consistency: nodes agree on the system’s state.

- Availability: the system accepts requests.

- Partition tolerance: the system runs even when the network delays or drops some messages.

In the real world, partitions are common, and failing to operate during a partition is essentially a failure of availability. We must choose CP or AP, or some probabilistic blend of the two.

There’s a different way to talk about the properties of a distributed system–and I think Peter Bailis explains it well. Liveness means that at every point, there exists a sequence of operations that allows the “right thing” to happen–e.g. “threads are never deadlocked” or “you never get stuck in an infinite loop”. Safety means the system fails to do anything bad. Together, safety and liveness ensure the system does good things on time.

With this in mind, what kind of constraints apply to HTTP request routing?

- The system must be partition tolerant.

- The system must be available–as much as possible, anyway. Serving web pages slower is preferable to not serving them at all. In the language of CAP, our system must be AP.

- But we can’t wait too long, because requests which take more than a minute to complete are essentially useless. We have a liveness constraint.

- Requests must complete correctly, or not at all. We can’t route an HTTP POST to multiple servers at once, or drop pieces of requests on the floor. We have a safety constraint.

It’s impossible to do this perfectly. If all of our data centers are nuked, there’s no way we can remain available. If the network lies to us, it can be impractical to guarantee correct responses. And we can let latencies rise to accommodate failure: the liveness constraint is flexible.

Finally, we’re real engineers. We’re going to make mistakes. We have limited time and money, limited ability to think, and must work with existing systems which were never designed for the task at hand. Complex algorithms are extraordinarily difficult to prove–let alone predict–at scale, or under the weird failure modes of distributed systems. This means it’s often better to choose a dumb but predictable algorithm over an optimal but complex one.

What I want to make clear is that Heroku is full of smart engineers–and if they’re anything like the engineers I know, they’re trying their hardest to adapt to a rapidly changing problem, fighting fires and designing new systems at the same time. Their problems don’t look anything like yours or mine. Their engineering decisions are driven by complex and shifting internal constraints which we can’t really analyze or predict. When I talk about “improved routing models” or “possible alternatives”, please understand that those models may be too complex, incompatible, or unpredictable to build in a given environment.

Dealing with unreliability

Returning to our Bamboo stack simulation, I’d like to start by introducing failure dynamics.

Real nodes fail. We’ll make our dynos unreliable with the faulty function, which simulates a component which stays online for an exponentially-distributed time before crashing, then returns error responses instead of allowing requests to pass through. After another exponentially-distributed outage time, it recovers, and the process continues. You can interpret this as a physical piece of hardware, or a virtual machine, or a hot-spare scenario where another node spins up to take the downed one’s place, etc. This is a fail-fast model–the node returns failure immediately instead of swallowing messages indefinitely. Since the simulations we’re running are short-lived, I’m going to choose relatively short failure times so we can see what happens under changing dynamics.

(defn faulty-dyno []

(cable 2

; Mean time before failure of 20 seconds, and

; mean time before resolution of one second.

(faulty 20000 1000

(queue-exclusive

(delay-fixed 20

(delay-exponential 100

(server :rails))))))

Again, we’re using a pool of 250 dynos and a poisson-distributed load function. Let’s compare an even load balancer with a pool of perfect dynos vs a pool of faulty ones:

(test-node "Reliable min-conn -> pool of faulty dynos."

(lb-min-conn

(pool pool-size

(faulty-dyno)))))

. Ideal dynos 95% available dynos

Total reqs: 100000 100000

Selected reqs: 50000 50000

Successful frac: 1.0 0.62632

Request rate: 678.2972 reqs/s 679.6156 reqs/s

Response rate: 673.90894 reqs/s 676.74567 reqs/s

Latency distribution:

Min: 24.0 4.0

Median: 93.0 46.5

95th %: 323.0 272.0

99th %: 488.0 438.0

Max: 1044.0 914.0

Well that was unexpected. Even though our pool is 95% available, over a third of all requests fail. Because our faulty nodes fail immediately, they have smaller queues on average–and the min-conns load balancer routes more requests to them. Real load balancers like HAProxy keep track of which nodes fail and avoid routing requests to them. Haproxy uses active health checks, but for simplicity I’ll introduce a passive scheme: when a request fails, don’t decrement that host’s connection counter immediately. Instead, wait for a while–say 1 second, the mean time to resolution for a given dyno. We can still return the error response immediately, so this doesn’t stop the load balancer from failing fast, but it will reduce the probability of assigning requests to broken nodes.

(lb-min-conn :lb {:error-hold-time 1000}

(pool pool-size

(faulty-dyno)))))

Total reqs: 100000

Selected reqs: 50000

Successful frac: 0.98846

Request rate: 678.72076 reqs/s

Response rate: 671.3302 reqs/s

Latency distribution:

Min: 4.0

Median: 92.0

95th %: 323.0

99th %: 486.0

Max: 1157.0

Throughput is slightly lower than the ideal, perfect pool of dynos, but we’ve achieved 98% reliability over a pool of nodes which is only 95% available, and done it without any significant impact on latencies. This system is more than the sum of its parts.

This system has an upper bound on its reliability: some requests must fail in order to determine which dynos are available. Can we do better? Let’s wrap the load balancer with a system that retries requests on error, up to three requests total:

(test-node "Retry -> min-conn -> faulty pool"

(retry 3

(lb-min-conn :lb {:error-hold-time 1000}

(pool pool-size

(faulty-dyno))))))

Total reqs: 100000

Selected reqs: 50000

Successful frac: 0.99996

Request rate: 676.8098 reqs/s

Response rate: 670.16046 reqs/s

Latency distribution:

Min: 12.0

Median: 94.0

95th %: 320.0

99th %: 484.0

Max: 944.0

The combination of retries, least-conns balancing, and diverting requests away from failing nodes allows us to achieve 99.996% availability with minimal latency impact. This is a great building block to work with. Now let’s find a way to compose it into a large-scale distributed system.

Multilayer routing

Minimum-connections and round-robin load balancers require coordinated state. If the machines which comprise our load balancer are faulty, we might try to distribute the load balancer itself in a highly available fashion. That would require state coordination with low latency bounds–and the CAP theorem tells us this is impossible to do. We’d need to make probabilistic tradeoffs under partitions, like allowing multiple requests to flow to the same backend.

What if we punt on AP min-conns load balancers? What if we make them single machines, or CP clusters? As soon as the load balancer encountered a problem, it would become completely unavailable.

(defn faulty-lb

[pool]

(faulty 20000 1000

(retry 3

(lb-min-conn :lb {:error-hold-time 1000}

pool))))

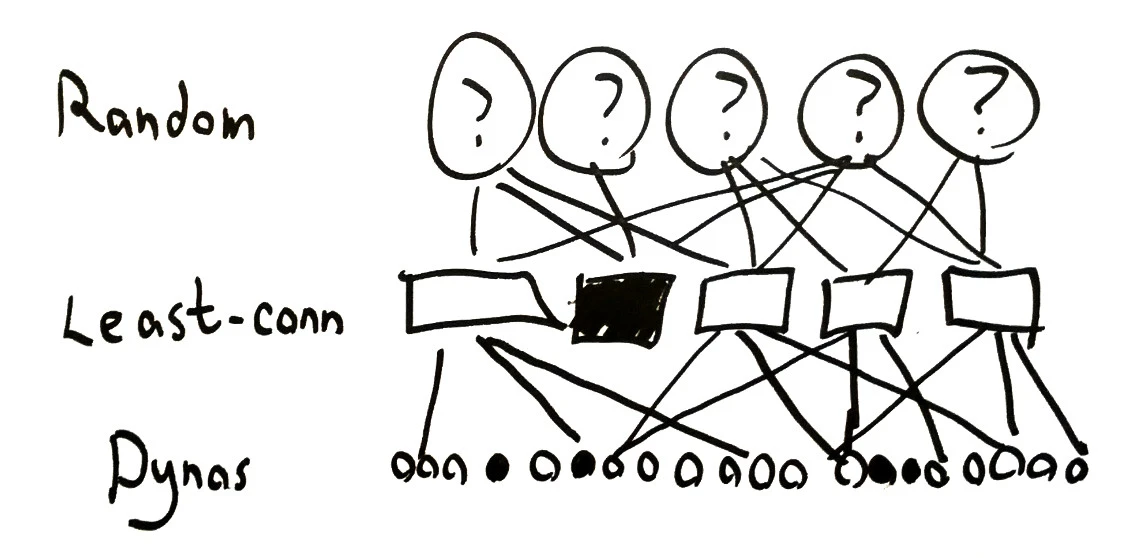

Let’s model the Bamboo architecture again: a stateless, random routing layer on top, which allocates requests to a pool of 10 faulty min-conns load balancers, all of which route over a single pool of faulty dynos:

(test-node "Random -> 10 faulty lbs -> One pool"

(let [dynos (dynos pool-size)]

(lb-random

(pool 10

(cable 5

(faulty-lb

dynos)))))))

Total reqs: 100000

Selected reqs: 50000

Successful frac: 0.9473

Request rate: 671.94366 reqs/s

Response rate: 657.87744 reqs/s

Latency distribution:

Min: 10.0

Median: 947.0

95th %: 1620.0

99th %: 1916.0

Max: 3056.0

Notice that our availability dropped to 95% in the two-layer distributed model. This is a consequence of state isolation: because the individual least-conns routers don’t share any state, they can’t communicate about which nodes are down. That increases the probability that we’ll allocate requests to broken dynos. A load-balancer which performed active state-checks wouldn’t have this problem; but we can work around it by adding a second layer of retries on top of the stateless random routing layer:

(let [dynos (pool pool-size (faulty-dyno))]

(retry 3

(lb-random

(pool 10

(cable 5

(faulty-lb

dynos))))))))

Total reqs: 100000

Selected reqs: 50000

Successful frac: 0.99952

Request rate: 686.97363 reqs/s

Response rate: 668.2616 reqs/s

Latency distribution:

Min: 30.0

Median: 982.0

95th %: 1639.0

99th %: 1952.010000000002

Max: 2878.0

This doesn’t help our latency problem, but it does provide three nines availability! Not bad for a stateless routing layer on top of a 95% available pool. However, we can do better.

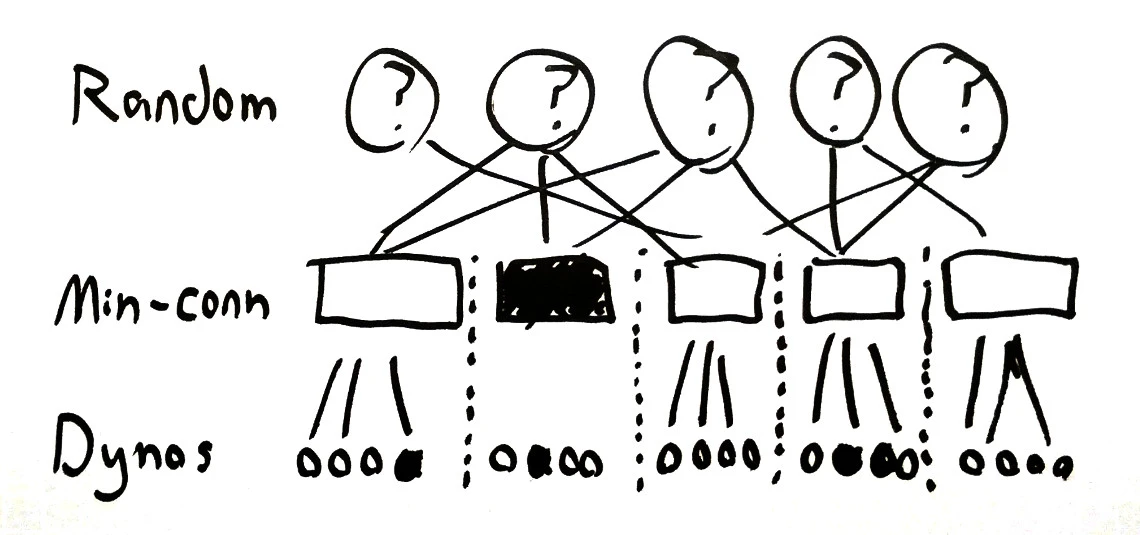

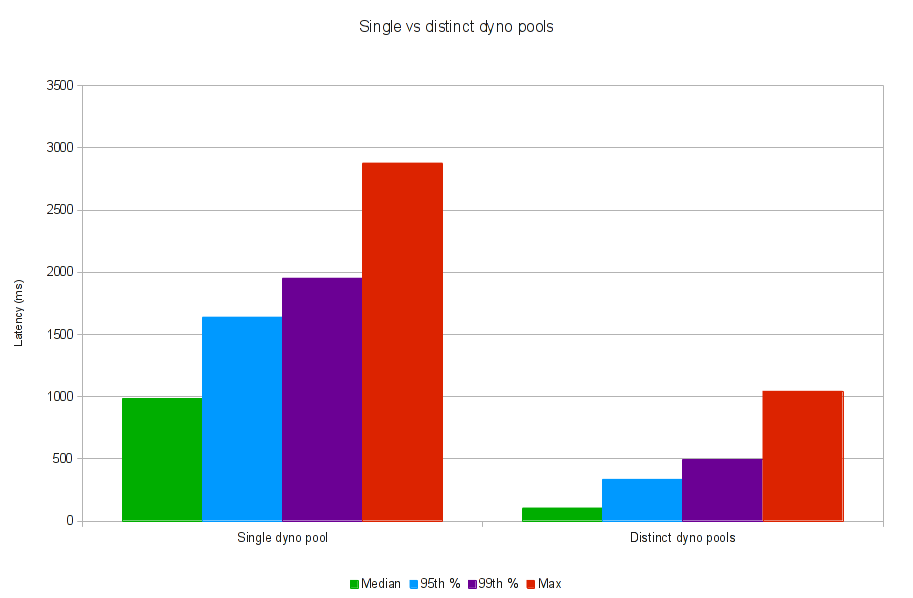

Isolating the least-conns routers from each other is essential to preserve liveness and availability. On the other hand, it means that they can’t share state about how to efficiently allocate requests over the same dynos–so they’ll encounter more failures, and queue multiple requests on the same dyno independently. One way to resolve this problem is to ensure that each least-conns router has a complete picture of its backends’ state. We isolate the dynos from one another:

This has real tradeoffs! For one, an imbalance in the random routing topology means that some min-conns routers will have more load than their neighbors–and they can’t re-route requests to dynos outside their pool. And since our min-conns routers are CP systems in this architecture, when they fail, an entire block of dynos is unroutable. We have to strike a balance between more dynos per block (efficient least-conns routing) and more min-conn blocks (reduced impact of a router failure).

Let’s try 10 blocks of 25 dynos each:

(test-node "Retry -> Random -> 10 faulty lbs -> 10 pools"

(retry 3

(lb-random

(pool 10

(cable 5

(faulty-lb

(pool (/ pool-size 10)

(faulty-dyno)))))))))

Total reqs: 100000

Selected reqs: 50000

Successful frac: 0.99952

Request rate: 681.8213 reqs/s

Response rate: 677.8099 reqs/s

Latency distribution:

Min: 30.0

Median: 104.0

95th %: 335.0

99th %: 491.0

Max: 1043.0

Whoah! We’re still 99.9% available, even with a stateless random routing layer on top of 10 95% available routers. Throughput is slightly down, but our median latency is nine times lower than the homogenous dyno pool.

I think system composition is important in distributed design. Every one of these components is complex. It helps to approach each task as an isolated system, and enforce easy-to-understand guarantees about that component’s behavior. Then you can compose different systems together to make something bigger and more useful. In these articles, we composed an efficient (but nonscalable) CP system with an inefficient (but scalable) AP system to provide a hybrid of the two.

If you have awareness of your network topology and are designing for singlethreaded, queuing backends, this kind of routing system makes sense. However, it’s only going to be efficient if you can situate your dynos close to their least-conns load balancer. One obvious design is to put one load balancer in each rack, and hook it directly to the rack’s switch. If blocks are going to fail as a group, you want to keep those blocks within the smallest network area possible. If you’re working in EC2, you may not have clear network boundaries to take advantage of, and correlated failures across blocks could be a real problem.

This architecture also doesn’t make sense for concurrent servers–and that’s a growing fraction of Heroku’s hosted applications. I’ve also ignored the problem of dynamic pools, where dynos are spinning up and exiting pools constantly. Sadly I’m out of time to work on this project, but perhaps a reader will chime in a model for for distributed routing over concurrent servers–maybe with a nonlinear load model for server latencies?

Thanks for exploring networks with me!

The strict definition of liveness is: for any partial execution, there exists a sequence of events/steps which will achieve the desired outcome. That does not imply something completes in a finite amount of time – that’s a safety property, because there is a specific point in time at which it can be violated. Liveness properties are violated when there is a state in which the system can never achieve the desired outcome.

Also, temporality is hard.