I needed a tool to evaluate internal and network benchmarks of Riemann, to ask questions like

- Is parser function A or B more efficient?

- How many threads should I allocate to the worker threadpool?

- How did commit 2556 impact the latency distribution?

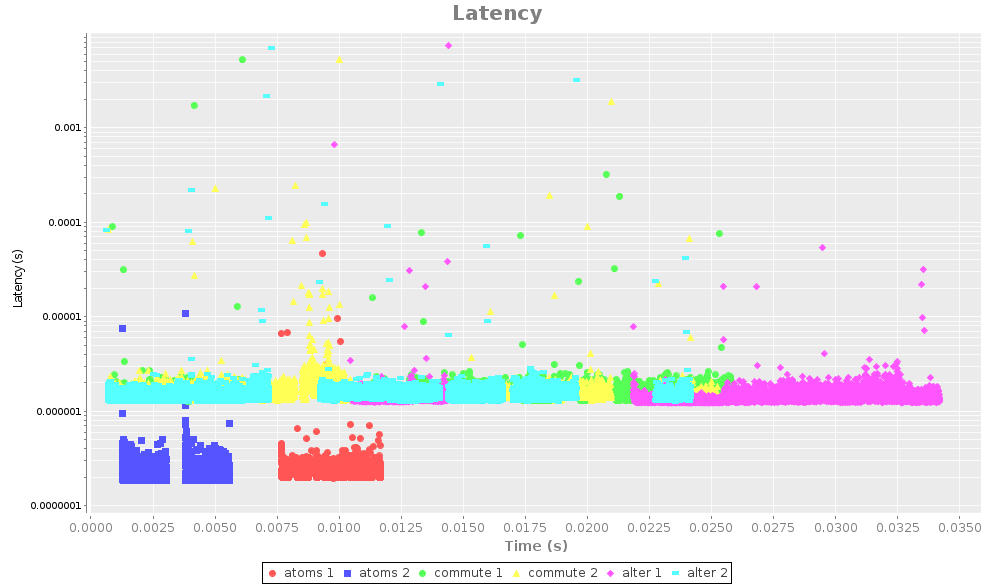

In dealing with “realtime” systems it’s often a lot more important to understand the latency distribution rather than a single throughput figure, and for GC reasons you often want to see a time dependence. Basho Bench does this well, but it’s in Erlang which rules out microbenchmarking of Riemann functions (e.g. at the repl). So I’ve hacked together this little thing I’m calling Schadenfreude (from German; “happiness at the misfortune of others”). Sums up how I feel about benchmarks in general.

; A run is a benchmark specification. :f is the function we're going to

; measure--in this case, counting using

;

; 1. an atomic reference

; 2. unordered (commute) transactions

; 3. ordered (alter) transactions.

;

; :before and :after are callbacks to set up and tear down for the test run.

(let [runs [(let [a (atom 0)]

{:name "atoms"

:before #(reset! a 0)

:f #(swap! a inc)})

(let [r (ref 0)]

{:name "commute"

:before #(dosync (ref-set r 0))

:f #(dosync (commute r inc))})

(let [r (ref 0)]

{:name "alter"

:before #(dosync (ref-set r 0))

:f #(dosync (alter r inc))})]

; For these benchmarks, we'll prime the JVM by doing the test twice and

; discarding the first one's results. We'll run each benchmark 10K times

runs (map #(merge % {:prime true

:n 10000}) runs)

; And we'll try each one with 1 and 2 threads

runs (mapcat (fn [run]

(map (fn [threads]

(merge run {:threads threads

:name (str (:name run) " " threads)}))

[1 2]))

runs)

; Actually run the function and collect data

runs (map record runs)

; And plot the results together

plot (latency-plot runs)]

; For this one we'll use a log plot.

(.setRangeAxis

(.getPlot plot)

(org.jfree.chart.axis.LogarithmicAxis. "Latency (s)"))

(view plot))

When I have something usable outside a REPL I’ll publish it to clojars and github. Right now I think the time alignment looks pretty dodgy so I’d like to normalize it correctly, and figure out what exactly “throughput” means. Oh, and the actual timing code is completely naive: no OS cache drop, no forced GC/finalizers, etc. I’m gonna look into tapping Criterium’s code for that.

Post a Comment