I’ve been putting more work into riemann-java-client recently, since it’s definitely the bottleneck in performance testing Riemann itself. The existing RiemannTcpClient and RiemannRetryingTcpClient were threadsafe, but almost fully mutexed; using one essentially serialized all threads behind the client itself. For write-heavy workloads, I wanted to do better.

There are two logical optimizations I can make, in addition to choosing careful data structures, mucking with socket options, etc. The first is to bundle multiple events into a single Message, which the API supports. However, your code may not be structured in a way to efficiently bundle events, so where higher latencies are OK, the client can maintain a buffer of outbound events and flush it regularly.

The second optimization is to take advantage of request pipelining. Riemann’s protocol is simple and synchronous: you send a Message over a TCP connection, and receive exactly one TCP message in response. The existing clients, however, forced you to wait n milliseconds for the message to cross the network, be processed by Riemann, and receive an acknowledgement. We can do better by pipelining requests: sending new requests before waiting for the previous responses, and matching up received messages with their corresponding requests later.

ThreadedClient does exactly that. All threads enqueue Messages into a lockfree queue, and receive Promise objects to be fulfilled when their response is available. The standard synchronous API is still available, and allows N threads to pipeline their requests together. Meanwhile, a writer thread sucks messages out of the write queue and sends them to Riemann, enqueuing written messages onto an in-flight queue. A reader thread pulls responses out of the socket and matches them to enqueued messages. Bounded queues provide backpressure, which limits the number of requests that can be in-flight at any time. This allows for reasonable bounds on event loss in the event of failure.

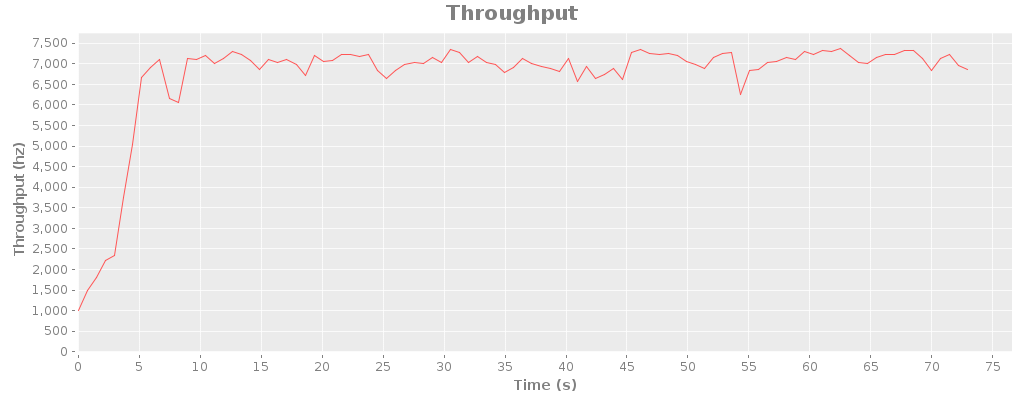

Here’s what the naive client (wait for round-trip requests) looks like on loopback:

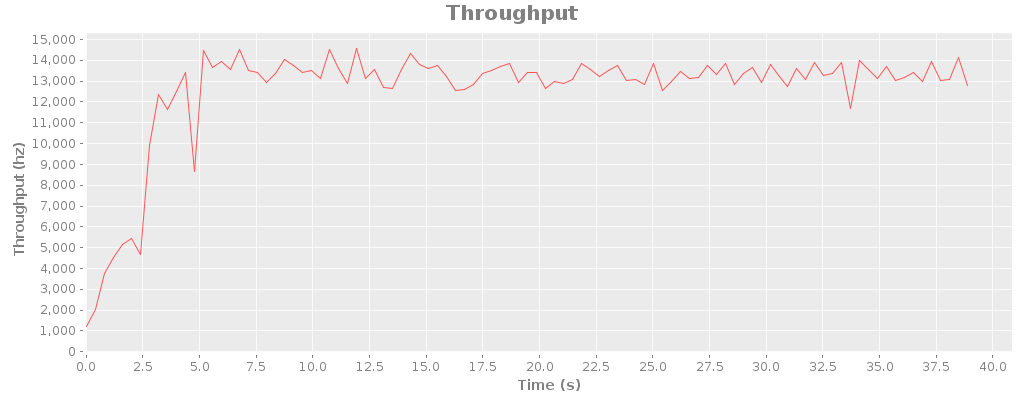

And here’s the same test with a RiemannThreadedClient:

I’ve done no tuning or optimization to this algorithm, and error handling is rough at best. It should perform best across real-world networks where latency is nontrivial. Even on loopback, though, I’m seeing roughly double the throughput at the cost of roughly double per-event latency.

Post a Comment