Previously, on Jepsen, we demonstrated stale and dirty reads in MongoDB. In this post, we return to Elasticsearch, which loses data when the network fails, nodes pause, or processes crash.

Nine months ago, in June 2014, we saw Elasticsearch lose both updates and inserted documents during transitive, nontransitive, and even single-node network partitions. Since then, folks continue to refer to the post, often asking whether the problems it discussed are still issues in Elasticsearch. The response from Elastic employees is often something like this:

In this Jepsen post, I’d like to follow up on Elastic’s reaction to the the first post, how they’ve addressed the bugs we discussed, and what data-loss scenarios remain. Most of these behaviors are now documented, so this is mainly a courtesy for users who requested a followup post. Cheers!

Reactions

The initial response from Elasticsearch was somewhat muted. Elasticsearch’s documentation remained essentially unchanged for months, and like Datastax with the Cassandra post, the company made no mention of the new findings in their mailing list or blog.

However, Elastic continued to make progress internally. Around September they released a terrific page detailing all known data-loss and consistency problems in Elasticsearch, with links to relevant tickets, simple explanations of the cause for each bug, and descriptions of the possible consequences. This documentation is exactly the kind of resource you need when evaluating a new datastore, and I’d like to encourage every database vendor to publish a similar overview. I would like to see Elasticsearch reference known failure modes in the documentation for particular features–for instance, Optimistic Concurrency Control could mention that version checks are not guaranteed to actually work: your update could be lost or misapplied–but the addition of a failure-modes page is a dramatic improvement and deserves congratulation!

Moreover, the resiliency page provides official confirmation that Elastic is using Jepsen internally to replicate this work and help improve their safety guarantees, in addition to expanding their integrated tests for failure scenarios.

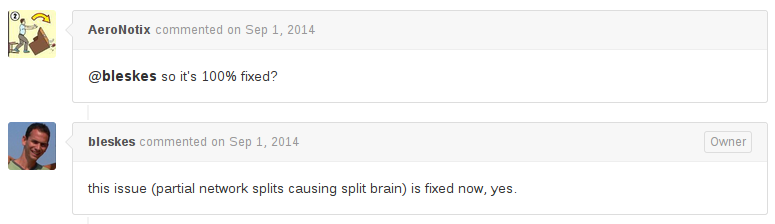

Elastic has previously expressed that they’d prefer to design their own consensus system (ZenDisco) instead of re-using an existing one. All the issues we discussed in the last Elasticsearch post essentially stem from that decision–and I was hoping that they might choose to abandon ZenDisco in favor of a proven algorithm. That hasn’t happened yet, but Elastic did land a massive series of patches and improvements to ZenDisco, and closed the intersecting-partitions split-brain ticket on September first, 2014. The fixes appeared definitive:

And as folks referenced the previous Jepsen post on Twitter, I noticed a common response from Elasticsearch users: because the ticket is closed, we can safely assume that Elasticsearch no longer loses data in network partitions. Elasticsearch’s resiliency page is very clear that this is not the case: ES currently fails their Jepsen tests and is still known to lose documents during a network partition.

So, is the intersecting-network-partitions-causing-split-brain bug fixed? Or are these problems still extant, and if so, how bad are they? I’ve updated the Jepsen tests for Elasticsearch to test the same scenarios on version 1.5.0.

Intersecting partitions

In this test, we insert a stream of documents, each containing a distinct integer, into a five-node Elasticsearch cluster. Meanwhile, we cut the network in half, but leave one node visible to both components. I’ve called this a “bridge”, or “nontransitive” partition, and the ticket refers to it as “partial” or “intersecting”–same topology in all cases. In version 1.1.0, this test caused Elasticsearch to elect a stable primary node on both sides of the partition (with the node in the middle considering itself a part of both clusters) for the entire duration of the partition. When the network healed, one primary would overwrite the other, losing about half of the documents written during the partition.

With the improvements to ZenDisco, the window for split-brain is significantly shorter. After about 90 seconds, Elasticsearch correctly elects a single primary, instead of letting two run concurrently. The new tests only show the loss of a few acknowledged documents, usually in a window of a few seconds around the start of a partition. In this case, 20 documents were successfully inserted, then discarded by Elasticsearch.

{:valid? false,

:lost

"#{795 801 809 813..815 822..827 830 832..833 837 839..840 843..846}",

:recovered

"#{46 49..50 251 255 271..272 289 292 308..309 329 345 362 365 382..383 435 445 447..448 453..455 459 464 478..479 493 499 517 519 526 529 540 546..547 582 586 590 605 610 618 621 623 626 628 631 636 641 643 646 651 653 656 658 661 663 668 671 673 676 678 681 683 686 691 693 696 698 703 708 710 714..715 718 720 729 748 753}",

:ok

"#{0..46 49..51 54..67 70..85 88..104 106 108..123 126..141 143 145..159 161..162 164..178 180 182..196 199..214 216..217 219..233 236..325 327..345 347..420 422 424..435 437 441..442 445 447..448 450 452..455 459 461 463..471 473 476..480 483..494 498..502 506..510 512 516..520 523 526 529..530 532 536..542 545..549 552..560 566 568..570 572 575..576 578..579 582..586 590..595 600 604..605 610 612 618 621 623 626 628 631 636 641 643 646 651 653 656 658 661 663 668 671 673 676 678 681 683 686 691 693 696 698 703 708 710 714..715 718 720 729 748 753}",

:recovered-frac 80/897,

:unexpected-frac 0,

:unexpected "#{}",

:lost-frac 22/897,

:ok-frac 553/897}

The root ticket here is probably 7572: A network partition can cause in flight documents to be lost, which was opened only a couple days after Mr. Leskes closed the intersecting-partitions ticket as solved. The window for split-brain is significantly shorter with the new patches, but the behavior of losing documents during this type of partition still exists despite the ticket being closed.

Expect delays

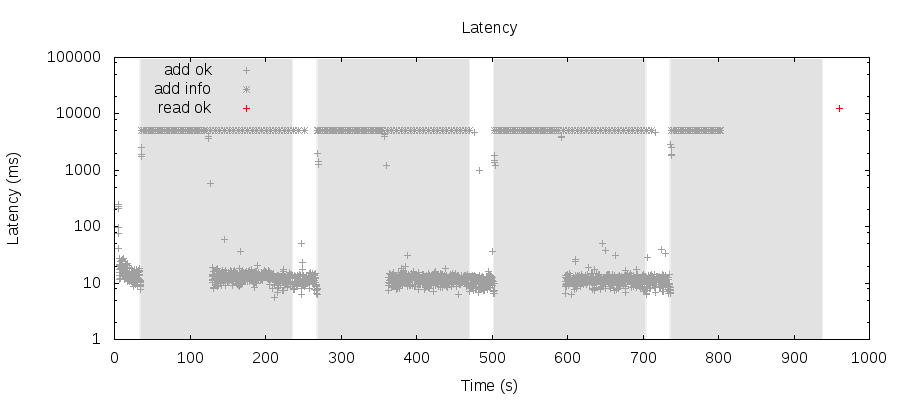

I touched on this in the previous post, but I’d like to restate: electing a new primary in Elasticsearch continues to be an expensive process. Because of that 90-second hardcoded timeout, no matter how low you set the failure detection timeouts and broadcast intervals, Elasticsearch will go globally unavailable for writes for a minute and a half when a network partition isolates a primary.

Shaded regions indicate when a network partition has isolated a primary node. + indicates a successful insert, and * shows a crashed update which may or may not have succeeded. Jepsen imposes a hard five-second timeout on all inserts, which forms the upper bound to the graph. Note that every operation times out for 90 seconds after a partition begins.

Because each of the five clients in this test are talking to a distinct node in the cluster, we can see partial availability in the steady state–clients talking to the isolated primary continue to time out, and clients talking to the majority component resume operations once that component elects a new primary.

Isolated primaries

One of the new bugs I discovered in Elasticsearch during the last round of Jepsen was that not only would it lose data during transitive partitions, but even partitions which cut the network into two completely connected isolated components–or even just isolating a single node–would induce the loss of dozens of documents.

That bug is unaffected by the changes to Zendisco in 1.5.0. In this particular run, isolating only primary nodes from the rest of the cluster could cause almost a quarter of acknowledged documents to be lost.

{:valid? false,

:lost

"#{471..473 546..548 551..565 567..568 570..584 586 588..602 604 606..621 623 625..639 641..642 644..658 660 662..676 678 680..694 696 698..712 714..715 717..731 733 735..750 752 754..768 770 772..777}",

:recovered

"#{49..50 58 79..82 84..87 89..92 94..97 99..102 104..107 109..112 114..123 128 149 170 195 217 241 264 298 332..333 335 337..338 340 342..345 347..350 352..353 355..356 358..361 363..366 368..371 373..375 378..381 383..386 388..391 810 812 817 820 825 830 834..835 837 839..840 842 844..845 847 849..850 852 854..855 857 859..860 862 864..867 869..872 874..877 879 881 913 932}",

:ok

"#{0..53 58 79..82 84..87 89..92 94..97 99..102 104..107 109..112 114..299 301 332..333 335 337..338 340 342..345 347..350 352..353 355..356 358..361 363..366 368..371 373..376 378..381 383..386 388..391 393..405 407..428 430..450 452..470 810 812 817 820 825 830 834..835 837 839..840 842 844..845 847 849..850 852 854..855 857 859..860 862 864..867 869..872 874..877 879..882 884..895 897..902 904..919 921..939 941..946}",

:recovered-frac 134/947,

:unexpected-frac 0,

:unexpected "#{}",

:lost-frac 209/947,

:ok-frac 490/947}

This is not a transient blip for in-flight docs–the window of data loss extends for about 90 seconds, which is, coincidentally, one of the hard-coded timeouts in electing a new primary.

I’ve opened 10406 for this problem, because it’s also a split-brain issue. For several seconds, Elasticsearch is happy to believe two nodes in the same cluster are both primaries, will accept writes on both of those nodes, and later discard the writes to one side.

Sometimes the cluster never recovers from this type of partition, and hangs until you do a rolling restart. Wooooooo!

GC pauses

One of the things Elasticsearch users complain about is garbage collection-induced split-brain. This isn’t documented as something that can cause data loss on the resiliency page, so I’ve written a test which uses SIGSTOP and SIGCONT to pause a random primary process, simulating a long garbage-collection cycle, swap pressure, or an IO scheduler hiccup.

This causes Elasticsearch to lose data as well. Since the paused node isn’t executing any requests while it’s stuck, it doesn’t have the opportunity to diverge significantly from its peers–but when it wakes up, it’s happy to process an in-flight write as if it were still the legal primary, not realizing that it’s been superceded by an election while it was asleep. That can cause the loss of a few in-flight documents.

Repeated pauses, like you might see in a swapping system, a node under severe memory pressure, or one with a faulty disk, can result in more catastrophic data loss–in this case, ~9.3% of 2143 acknowledged documents were actually lost.

{:valid? false,

:lost

"#{1794 1803..1806 1808..1809 1811..1812 1817 1819..1820 1823 1828 1830 1832..1835 1837..1838 1841..1845 1847 1849 1851 1854..1856 1859 1861..1863 1865..1868 1871 1873 1875 1877 1879 1881..1882 1886..1889 1891 1894..1897 1900..1902 1904..1907 1909 1911..1912 1917..1919 1925 1927..1928 1931..1936 1938 1941..1943 1945 1947..1951 1953..1954 1956..1957 1959..1962 1964..1966 1970..1971 1973..1974 1977..1978 1980 1982 1986..1987 1989..1990 1992..1994 1998..1999 2001 2003 2007..2008 2010 2012 2014..2021 2023..2025 2031..2034 2039 2044 2046..2048 2050 2053..2057 2060..2062 2064..2068 2073..2075 2077..2078 2081..2082 2084..2087 2089..2090 2092 2094 2097..2098 2100 2102..2103 2107 2110 2112..2114 2116..2123 2127 2130..2134 2136 2138..2139 2141..2142}",

:recovered

"#{0 9 26 51 72 96 118 141 163 187 208 233 254 278 300 323 346 370 393 435 460 481 505 527 552 573 598 621 644 668 691 715 737 761 783 806 829 851 891 906 928 952 977 998 1022 1044 1069 1090 1113 1136 1159 1182 1204 1228 1251 1275 1298 1342..1344 1348 1371 1416 1438 1460 1484 1553 1576 1598 1622 1668 1692 1714 1736 1783..1784 1786..1787 1790..1792 1795..1797 1807 1852 1876 1898 1922 1967 1991 2105}",

:ok

"#{0..1391 1393..1506 1508..1529 1531..1644 1646..1779 1783..1784 1786..1788 1790..1793 1795..1802 1807 1810 1813..1816 1818 1821..1822 1824..1827 1831 1836 1839..1840 1846 1848 1850 1852..1853 1857..1858 1860 1864 1869..1870 1872 1874 1876 1878 1880 1883..1885 1890 1892..1893 1898..1899 1903 1908 1910 1913..1916 1920..1924 1926 1929..1930 1937 1939..1940 1944 1952 1955 1958 1963 1967..1969 1972 1975..1976 1979 1981 1983..1985 1988 1991 1995..1997 2000 2002 2004..2006 2009 2011 2022 2026..2030 2035..2036 2038 2040..2043 2045 2049 2051..2052 2058 2063 2069..2072 2076 2079..2080 2088 2091 2093 2095..2096 2099 2101 2104..2106 2108..2109 2111 2115 2124..2126 2128 2135 2137 2140}",

:recovered-frac 92/2143,

:unexpected-frac 0,

:unexpected "#{}",

:lost-frac 200/2143,

:ok-frac 1927/2143}

These sorts of issues are endemic to systems like ZenDisco which use failure detectors to pick authoritative primaries without threading the writes themselves through a consensus algorithm. The two must be designed in tandem.

Elastic is taking the first steps towards a consensus algorithm by introducing sequence numbers on writes. Like Viewstamped Replication and Raft, operations will be identified by a monotonic [term, counter] tuple. Coupling those sequence numbers to the election and replication algorithms is tricky to do correctly, but Raft and VR lay out a roadmap for Elasticsearch to follow, and I’m optimistic they’ll improve with each release.

I’ve opened a ticket for pause-related data loss, mostly as a paper trail–Elastic’s engineers and I both suspect 7572, related to the lack of a consensus algorithm for writes, covers this as well. Initially Elastic thought this might be due to a race condition in index creation, so Lee Hinman (Dakrone) helped improve the test so it verified the index status before starting. That index-creation problem can also cause data loss, but it’s not the sole factor in this Jepsen test–even with these changes, Elasticsearch still loses documents when nodes pause.

{:valid? false,

:lost "#{1761}",

:recovered

"#{0 2..3 8 30 51 73 97 119 141 165 187 211 233 257 279 302 324 348 371 394 436 457 482 504 527 550 572 597 619 642 664 688 711 734 758 781 804 827 850 894 911 934 957 979 1003 1025 1049 1071 1092 1117 1138 1163 1185 1208 1230 1253 1277 1299 1342 1344 1350 1372 1415 1439 1462 1485 1508 1553 1576 1599 1623 1645 1667 1690 1714 1736 1779 1803 1825 1848 1871 1893 1917 1939 1964 1985 2010 2031 2054 2077 2100 2123 2146 2169 2192}",

:ok "#{0..1344 1346..1392 1394..1530 1532..1760 1762..2203}",

:recovered-frac 24/551,

:unexpected-frac 0,

:unexpected "#{}",

:lost-frac 1/2204,

:ok-frac 550/551}

Crashed nodes

You might assume, based on the Elasticsearch product overview, that Elasticsearch writes to the transaction log before confirming a write.

Elasticsearch puts your data safety first. Document changes are recorded in transaction logs on multiple nodes in the cluster to minimize the chance of any data loss.

I’d like to draw your attention to a corner of the Elasticsearch documentation you may not have noticed: the translog config settings, which notes:

index.gateway.local.syncHow often the translog is

fsynced to disk. Defaults to5s.

To be precise, Elasticsearch’s transaction log does not put your data safety first. It puts it anywhere from zero to five seconds later.

In this test we kill random Elasticsearch processes with kill -9 and restart them. In a datastore like Zookeeper, Postgres, BerkeleyDB, SQLite, or MySQL, this is safe: transactions are written to the transaction log and fsynced before acknowledgement. In Mongo, the fsync flags ensure this property as well. In Elasticsearch, write acknowledgement takes place before the transaction is flushed to disk, which means you can lose up to five seconds of writes by default. In this particular run, ES lost about 10% of acknowledged writes.

{:valid? false,

:lost

"#{0..49 301..302 307 309 319..322 325 327 334 341 351 370 372 381 405 407 414 416 436 438 447 460..462 475 494 497 499 505 .......... 10339..10343 10345..10347 10351..10359 10361 10363..10365 10367 10370 10374 10377 10379 10381..10385 10387..10391 10394..10395 10397..10405 10642 10649 10653 10661 10664 10668..10669 10671 10674..10676 10681 10685 10687 10700..10718}",

:recovered

"#{2129 2333 2388 2390 2392..2395 2563 2643 2677 2680 2682..2683 4470 4472 4616 4635 4675..4682 4766 4864 4967 5024..5026 5038 5042..5045 5554 5556..5557 5696..5697 5749..5757 5850 5956 6063 6115..6116 6146 6148..6149 6151 6437 6541 6553..6554 6559 6561 11037 11136 11241 11291..11295}",

:ok

"#{289..300 303..306 308 310..318 323..324 326 328..333 335..338 340 343..346 348..350 352 354..359 361..363 365..368 371 ........ 10648 10650..10652 10654..10660 10662..10663 10665..10667 10670 10672..10673 10677..10680 10682..10684 10686 10688..10699 10964 10966..10967 10969 10972..11035 11037 11136 11241 11291..11299}",

:recovered-frac 37/5650,

:unexpected-frac 0,

:unexpected "#{}",

:lost-frac 23/226,

:ok-frac 1463/2825}

Remember that fsync is necessary but not sufficient for durability: you must also get the filesystem, disk controller, and disks themselves to correctly flush their various caches when requested.

I don’t believe this risk is anywhere near as serious as the replication problems we’ve discussed so far. Elasticsearch replicates data to multiple nodes; my tests suggest it requires coordinated crashes of multiple processes to induce data loss. You might see this if a DC or rack loses power, if you colocate VMs, if your hosting provider restarts a bunch of nodes, etc, but those are not, in my experience, as common as network partitions.

However, it is a fault worth considering, and Zookeeper and other consensus systems do fsync on a majority of nodes before considering a write durable. On the other hand, systems like Riak, Cassandra, and Elasticsearch have chosen not to fsync before acknowledgement. Why? I suspect this has to do with sharding.

Fsync in Postgres is efficient because it can batch multiple operations into a single sync. If you do 200 writes every millisecond, and fsync once per millisecond, all 200 unflushed writes are contiguous in a single file in the write-ahead log.

In Riak, a single node may run dozens of virtual nodes, and each one has a distinct disk store and corresponding WAL. Elasticsearch does something similar: it may have n Lucene instances on a given node, and a separate translog file for each one. If you do 200 writes per millisecond against a Riak or Elasticsearch node, they’ll likely end up spread across all n logs, and each must be separately fsynced. This reduces locality advantages, forcing the disk and caches to jump back and forth between random blocks–which inflates fsync latencies.

Anyway, depending on how durable Elasticsearch aims to be, they may wind up implementing a unified translog and blocking acknowledgements until writes may have been flushed to disk. Or they may decide that this is the intended balance and keep things where they are–after all, replication mitigates the risk of loss here. Since crash-restart durable storage is a prerequisite for most consensus algorithms, I have a hunch we’ll see stronger guarantees as Elasticsearch moves towards consensus on writes.

Recap

Elasticsearch 1.5.0 still loses data in every scenario tested in the last Jepsen post, and in some scenarios I wasn’t able to test before. You can lose documents if:

- The network partitions into two intersecting components

- Or into two discrete components

- Or if even a single primary is isolated

- If a primary pauses (e.g. due to disk IO or garbage collection)

- If multiple nodes crash around the same time

However, the window of split-brain has been reduced; most notably, Elasticsearch will no longer maintain dual primaries for the full duration of an intersecting partition.

My recommendations for Elasticsearch users are unchanged: store your data in a database with better safety guarantees, and continuously upsert every document from that database into Elasticsearch. If your search engine is missing a few documents for a day, it’s not a big deal; they’ll be reinserted on the next run and appear in subsequent searches. Not using Elasticsearch as a system of record also insulates you from having to worry about ES downtime during elections.

Finally, Elastic has gone from having essentially no failure-mode documentation to a delightfully detailed picture of the database’s current and past misbehaviors. This is wonderful information for users and I’d love to see this from other vendors.

Up next: Aerospike.

This work is a part of my research at Stripe, and I would like to thank everyone there (especially Marc Hedlund) for helping me test distributed systems and publish articles like this. I’m indebted to Boaz Leskes, Lee Hinman and Shay Banon for helping to replicate and explain these test cases. Finally, my thanks to Duretti Hirpa, Coda Hale, and Camille Fournier–and especially to my accomplished and lovely wife, Mrs. Caitie McCaffrey–for their criticism and helpful feedback on earlier drafts.

“my lovely wife”

wat. confused.