Jepsen: CockroachDB beta-20160829

Last fall, I worked with CockroachDB to review and extend their Jepsen test suite. We found new bugs leading to serializability violations, improved documentation, and demonstrated documented behavior around nonlinearizable multi-key transactions. You can read the full analysis on jepsen.io.

Jepsen: MongoDB 3.4.0-rc3

This fall, I worked with MongoDB to design a new Jepsen test for MongoDB. We discovered design flaws in the v0 replication protocol, plus implementation bugs in the v1 protocol, both of which allowed for the loss of majority-committed updates. While the v0 protocol remains broken, patches for v1 are available in MongoDB 3.2.12 and 3.4.0, and now pass the expanded Jepsen test suite.

You can read the full analysis at jepsen.io.

Jepsen: RethinkDB 2.2.3 reconfiguration

In the previous Jepsen analysis of RethinkDB, we tested single-document reads, writes, and conditional writes, under network partitions and process pauses. RethinkDB did not exhibit any nonlinearizable histories in those tests. However, testing with more aggressive failure modes, on both 2.1.5 and 2.2.3, has uncovered a subtle error in Rethink’s cluster membership system. This error can lead to stale reads, dirty reads, lost updates, node crashes, and table unavailability requiring an unsafe emergency repair. Versions 2.2.4 and 2.1.6, released last week, address this issue.

Until now, Jepsen tests have used a stable cluster membership throughout the test. We typically run the system being tested on five nodes, and although the network topology between the nodes may change, processes may crash and restart, and the system may elect new nodes as leaders, we do not introduce or remove nodes from the system while it is running. Thus far, we haven’t had to go that far to uncover concurrency errors.

Since RethinkDB passed its stable-membership partitioning tests, I offered the team a more aggressive failure model: we’d dynamically reconfigure the cluster membership during the test. This is a harder problem than consensus with fixed membership: both old and new nodes must gracefully agree on the membership change, ensure that both sets of nodes will agree on any operations performed during the handover, and finally transition to normal consensus on the new set of nodes. The delicate handoff of operations from old nodes to new provides ample opportunities for mistakes.

Jepsen: Aerospike

Previously, on Jepsen, we reviewed Elasticsearch’s progress in addressing data-loss bugs during network partitions. Today, we’ll see Aerospike 3.5.4, an “ACID database”, react violently to a basic partition.

[Update, 2018-03-07] See the followup analysis of 3.99.0.3

Aerospike is a high-performance, distributed, schema-less, KV store, often deployed in caching, analytics, or ad tech environments. Its five-dimensional data model is similar to Bigtable or Cassandra: namespaces (databases) contain sets (tables) of records, where keys identify records. Each record is a map of bin names to values. Aerospike has put a good deal of work into performance across good-size (~100TB) datasets, and is repositioning itself as a general purpose datastore competitive with, say, MongoDB.

Jepsen: Elasticsearch 1.5.0

Previously, on Jepsen, we demonstrated stale and dirty reads in MongoDB. In this post, we return to Elasticsearch, which loses data when the network fails, nodes pause, or processes crash.

Nine months ago, in June 2014, we saw Elasticsearch lose both updates and inserted documents during transitive, nontransitive, and even single-node network partitions. Since then, folks continue to refer to the post, often asking whether the problems it discussed are still issues in Elasticsearch. The response from Elastic employees is often something like this:

Jepsen: MongoDB stale reads

Please note: our followup analysis of 3.4.0-rc3 revealed additional faults in MongoDB’s replication algorithms which could lead to the loss of acknowledged documents–even with Majority Write Concern, journaling, and fsynced writes.

In May of 2013, we showed that MongoDB 2.4.3 would lose acknowledged writes at all consistency levels. Every write concern less than MAJORITY loses data by design due to rollbacks–but even WriteConcern.MAJORITY lost acknowledged writes, because when the server encountered a network error, it returned a successful, not a failed, response to the client. Happily, that bug was fixed a few releases later.

Since then I’ve improved Jepsen significantly and written a more powerful analyzer for checking whether or not a system is linearizable. I’d like to return to Mongo, now at version 2.6.7, to verify its single-document consistency. (Mongo 3.0 was released during my testing, and I expect they’ll be hammering out single-node data loss bugs for a little while.)

Automating Jepsen

If you, as a database vendor, implement a few features in your API, I can probably offer repeatable automated tests of your DB’s partition tolerance through Jepsen.

The outcome of these tests would be a set of normalized metrics for each DB like “supports linearizability”, “available for writes when a majority partition exists”, “available for writes when no majority available”, “fraction of writes successful”, “fraction of writes denied”, “fraction of writes acked then lost”, “95th latency during condition X”, and so forth. I’m thinking this would be a single-page web site–a spreadsheet, really–making it easy to compare and contrast DBs and find one that fits your safety needs.

At a minimum, I need to know:

Jepsen: Redis

Previously on Jepsen, we explored two-phase commit in Postgres. In this post, we demonstrate Redis losing 56% of writes during a partition.

Redis is a fantastic data structure server, typically deployed as a shared heap. It provides fast access to strings, lists, sets, maps, and other structures with a simple text protocol. Since it runs on a single server, and that server is single-threaded, it offers linearizable consistency by default: all operations happen in a single, well-defined order. There’s also support for basic transactions, which are atomic and isolated from one another.

Because of this easy-to-understand consistency model, many users treat Redis as a message queue, lock service, session store, or even their primary database. Redis running on a single server is a CP system, so it is consistent for these purposes.

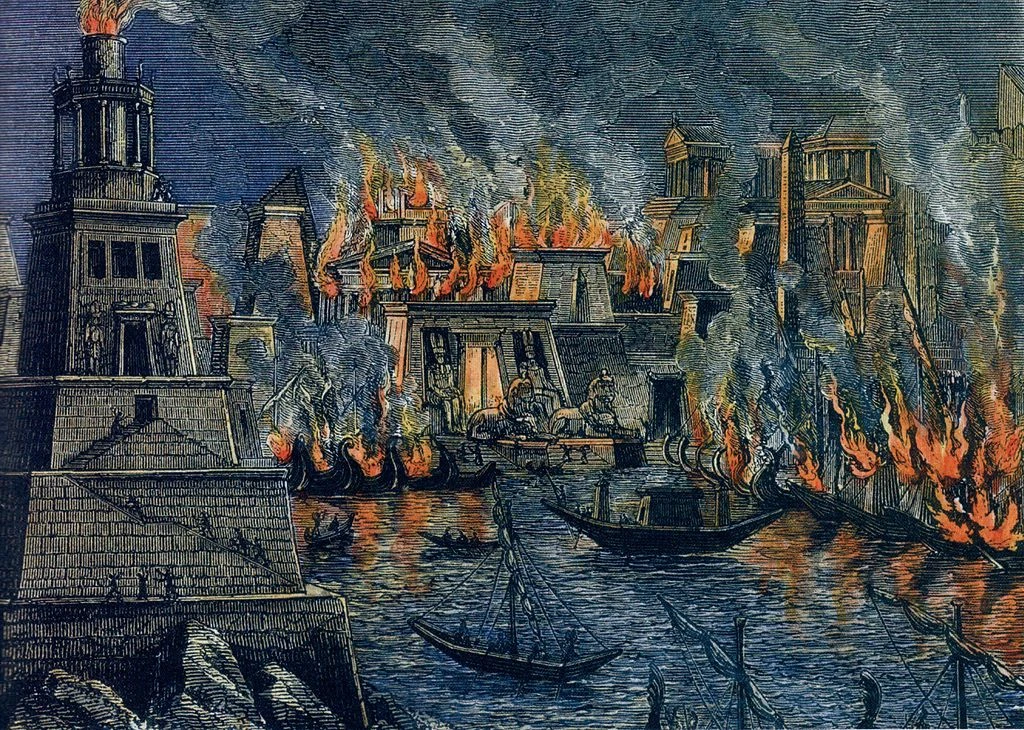

Burn the Library

Write contention occurs when two people try to update the same piece of data at the same time.

We know several ways to handle write contention, and they fall along a spectrum. For strong consistency (or what CAP might term “CP”) you can use explicit locking, perhaps provided by a central server; or optimistic concurrency where writes proceed through independent transactions, but can fail on conflicting commits. These approaches need not be centralized: consensus protocols like Paxos or two-phase-commit allow a cluster of machines to agree on an isolated transaction–either with pessimistic or optimistic locking, even in the face of some failures and partitions.

Do not expose Riak to the internet

Major thanks to John Muellerleile (@jrecursive) for his help in crafting this.

Actually, don’t expose pretty much any database directly to untrusted connections. You’re begging for denial-of-service issues; even if the operations are semantically valid, they’re running on a physical substrate with real limits.

Riak, for instance, exposes mapreduce over its HTTP API. Mapreduce is code; code which can have side effects; code which is executed on your cluster. This is an attacker’s dream.