Chronos is a distributed task scheduler (cf. cron) for the Mesos cluster management system. In this edition of Jepsen, we’ll see how simple network interruptions can permanently disrupt a Chronos+Mesos cluster

Chronos relies on Mesos, which has two flavors of node: master nodes, and slave nodes. Ordinarily in Jepsen we’d refer to these as “primary” and “secondary” or “leader” and “follower” to avoid connotations of, well, slavery, but the master nodes themselves form a cluster with leaders and followers, and terms like “executor” have other meanings in Mesos, so I’m going to use the Mesos terms here.

Mesos slaves connect to masters and offer resources like CPU, disk, and memory. Masters take those offers and make decisions about resource allocation using frameworks like Chronos. Those decisions are sent to slaves, which actually run tasks on their respective nodes. Masters form a replicated state machine with a persistent log. Both masters and slaves rely on Zookeeper for coordination and discovery. Zookeeper is also a replicated persistent log.

Chronos runs on several nodes, and uses Zookeeper to discover Mesos masters. The Mesos leading master offers CPU, disk, etc to Chronos, which in turn attempts to schedule jobs at their correct times. Chronos persists job configuration in Zookeeper, and may journal additional job state to Cassandra. Chronos has its own notion of leader and follower nodes, independent from both Mesos and Zookeeper.

There are, in short, a lot of moving parts here–which leads to the question at the heart of every Jepsen test: will it blend?

Designing a test

Zookeeper will run across all 5 nodes. Our production Mesos installation separates control from worker nodes, so we’ll run Mesos masters on n1, n2, and n3; and Mesos slaves on n4 and n5. Finally, Chronos will run across all 5 nodes. We’re working with Zookeeper version 3.4.5+dfsg-2, Mesos 0.23.0-1.0.debian81, and Chronos 2.3.4-1.0.81.debian77; the most recent packages available in Wheezy and the Mesosphere repos as of August 2015.

Jepsen works by generating random operations and applying them to the system, building up a concurrent history of operations. We need a way to create new, randomized jobs, and to see what runs have occurred for each job. To build new jobs, we’ll write a stateful generator which emits jobs with a unique integer :name, a :start time, a repetition :count, a run :duration, an :epsilon window allowing jobs to run slightly late, and finally, an :interval between the start of each window.

This may seem like a complex way to generate tasks, and indeed earlier generators were much simpler–however, they led to failed constraints. Chronos takes a few seconds to spin up a task, which means that a task could run slightly after its epsilon window. To allow this minor fault we add an additional epsilon-forgiveness as padding, allowing Chronos to fudge its guarantees somewhat. Chronos also can’t run tasks immediately after their submission, so we have a small head-start delaying the beginning of a job. Finally, Chronos tries not to run tasks concurrently, which bounds the interval between targets. We ensure that the interval is large enough that the task could run at the end of the target’s epsilon window, plus that epsilon forgiveness, and still complete running before the next window begins.

Once jobs are generated, we transform them into a suitable JSON representation and make an HTTP POST to submit them to Chronos. Only successfully acknowledged jobs are required for the analysis to pass.

We need a way to identify which tasks ran and at what times. Our jobs will open a new file and write their job ID and current time, sleep for some duration, then, to indicate successful completion, write the current time again to the same file. We can reconstruct the set of all runs by parsing the files from all nodes. Runs are considered complete iff they wrote a final timestamp. In this particular test, all node clocks are perfectly synchronized, so we can simply union times from each node without correction.

With the basic infrastructure in place, we’ll write a client which takes add-job and read operations and applies them to the cluster. As with all Jepsen clients, this one is specialized via (setup! client test node) into a client bound to a specific node, ensuring we route requests to both leaders and non-leaders.

Finally, we bind together the database, OS, client, and generators into a single test. Our generator emits add-job operations with a 30 second delay between each, randomly staggered by up to 30 seconds. Meanwhile, the special nemesis process cycles between creating and resolving failures every 200 seconds. This phase proceeds for a few seconds, after which the nemesis resolves any ongoing failures and we allow the system to stabilize. Finally, we have a single client read the current runs.

In order to evaluate the results, we need a checker, which examines the history of add-job and read operations, and identifies whether Chronos did what it was supposed to.

How do you measure a task scheduler?

What does it mean for a cron system to be correct?

The trivial answer is that tasks run on time. Each task has a schedule, which specifies the times–call them targets–at which a job should run. The scheduler does its job iff, for every target time, the task is run.

Since we aren’t operating in a realtime environment, there will be some small window of time during which the job should run–call that epsilon. And because we can’t control how long tasks run for, we just want to ensure that the run begins somewhere between the target time t and t + epsilon–we’ll allow tasks to complete at their leisure.

Because we can only see runs that have already occurred, not runs from the future, we need to limit our targets to those which must have completed by the time the read began.

Since this is a distributed, fault-tolerant system, we should expect multiple, possibly concurrent runs for a single target. If a task doesn’t complete successfully, we might need to retry it–or a node running a task could become isolated from a coordinator, forcing the coordinator to spin up a second run. It’s a lot easier to recover from multiple runs than no runs!

So, given some set of jobs acknowledged by Chronos, and a set of runs for each job, we expand each job into a set of targets, attempt to map each target to some run, and consider the job valid iff every target is satisfied.

Assigning values to possibly overlapping bins is a constraint logic problem. We can use Loco, a wrapper around the Choco constraint solver to find a unique mapping from targets to runs. In the degenerate case when targets don’t overlap, we can simply sort both targets and runs and riffle them together. This approach is handy for getting partial solutions when the entire constraint problem can’t be satisfied.

This allows us to determine whether a set of runs satisfies a single job. To check multiple jobs, we simply group all runs by their job ID and solve each job independently, and consider the system valid iff every job is satisfiable by its runs.

Finally, we have to transform the history of operations–all those add-job operations followed by a read–into a set of jobs and a set of runs, and identify the time of the read so we can compute the targets that should have been satisfied. We can use the mappings of job targets to runs to compute overall correctness results, and to build graphs showing the behavior of the system over time.

With our test framework in place, it’s time to go exploring!

Results

To start, Chronos error messages are less than helpful. In response to an invalid job–perhaps due to a malformed date, for instance, it simply returns HTTP 400 with an empty body.

{:orig-content-encoding nil,

:request-time 121

:status 400

:headers {"Server" "Jetty(8.y.z-SNAPSHOT"

"Connection" "close"

"Content-Length" "0"

"Content-Type" "text/html;charset=ISO-8859-1"

"Cache-Control" "must-revalidate,no-cache,no-store"}

:body ""}

Chronos can also crash when proxying requests to the leader, causing invalid HTTP responses:

org.apache.http.ConnectionClosedException: Premature end of Content-Length delimited message body (expected: 1290; received: 0)

Or the brusque:

org.apache.http.NoHttpResponseException: n3:4400 failed to respond

Or the delightfully enigmatic:

{:orig-content-encoding nil,

:trace-redirects ["http://n4:4400/scheduler/iso8601"]

:request-time 19476

:status 500

:headers {"Server" "Jetty(8.y.z-SNAPSHOT"

"Connection" "close"

"Content-Length" "1290"

"Content-Type" "text/html;charset=ISO-8859-1"

"Cache-Control" "must-revalidate,no-cache,no-store"}

:body "<html>\n

<head>\n

<meta http-equiv=\"Content-Type\" content=\"text/html;charset=ISO-8859-1\"/>\n

<title>Error 500 Server Error</title>\n

</head>\n>

<body>\n

<h2>HTTP ERROR: 500</h2>\n

<p>Problem accessing /scheduler/iso8601. Reason:\n

<pre> Server Error</pre></p>\n

<hr /><i><small>Powered by Jetty://</small></i>\n \n \n ... \n</html>\n"}

In other cases, you may not get a response from Chronos at all, because Chronos’ response to certain types of failures–for instance, losing its Zookeeper connection–is to crash the entire JVM and wait for an operator or supervising process, e.g. upstart, to restart it. This is particularly vexing because the Mesosphere Debian packages for Chronos don’t include a supervisor, and service chronos start isn’t idempotent, which makes it easy to run zero or dozens of conflicting copies of the Chronos process.

Chronos is the only system tested under Jepsen which hard-crashes in response to a network partition. The Chronos team asserts that allowing the process to keep running would allow split brain behavior, making this expected, if undocumented behavior. As it turns out, you can also crash the Mesos master with a network partition, and Mesos maintainers say this is not how Mesos should behave, so this “fail-fast” philosophy may play out differently depending on what Mesos components you’re working with.

If you schedule jobs with intervals that are too frequent–even if they don’t overlap–Chronos can fail to run jobs on time, because the scheduler loop can’t handle granularities finer than --schedule_horizon, which is, by default, 60 seconds. Lowering the scheduler horizon to 1 second allows Chronos to satisfy all executions for intervals around 30 seconds–so long as no network failures occur.

However, if the network does fail (for instance, if a partition cleanly isolates two nodes from the other three), Chronos will fail to run any jobs–even after the network recovers.

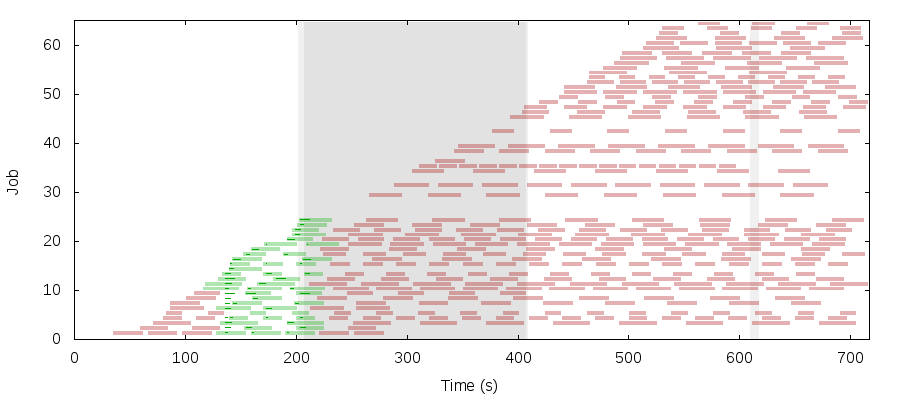

This plot shows targets and runs for each job over time. Targets are thick bars, and runs are narrow, darker bars. Green targets are satisfied by a run beginning in their time window, and red targets show where a task should have run but didn’t. The Mesos master dies at the start of the test and no jobs run until a failover two minutes later.

The gray region shows the duration of a network partition isolating [n2 n3] from [n1 n4 n5]. Chronos stops accepting new jobs for about a minute just after the partition, then recovers. ZK can continue running in the [n1 n4 n5] component, as can Chronos, but Mesos, to preserve a majority of its nodes [n1 n2 n3], can only allow a leading master in [n2 n3]. Isolating Chronos from the Mesos master prevents job execution during the partition–hence every target during the partition is red.

This isn’t the end of the world–it does illustrate the fragility of a system with three distinct quorums, all of which must be available and connected to one another, but there will always be certain classes of network failure that can break a distributed scheduler. What one might not expect, however, is that Chronos never recovers when the network heals. It continues accepting new jobs, but won’t run any jobs at all for the remainder of the test–every target is red even after the network heals. This behavior persists even when we give Chronos 1500+ seconds to recover.

The timeline here is roughly:

- 0 seconds: Mesos on n3 becomes leading master

- 15 seconds: Chronos on n1 becomes leader

- 224 seconds: A partition isolates [n1 n4] from [n2 n3 n5]

- 239 seconds: Chronos on n1 detects ZK connection loss and does not crash

- 240 seconds: A few rounds of elections; n2 becomes Mesos leading master

- 270 seconds: Chronos on n3 becomes leader and detects n2 as Mesos leading master

- 375 seconds: The partition heals

- 421 seconds: Chronos on n1 recovers its ZK connection and recognizes n3 as new Chronos leader.

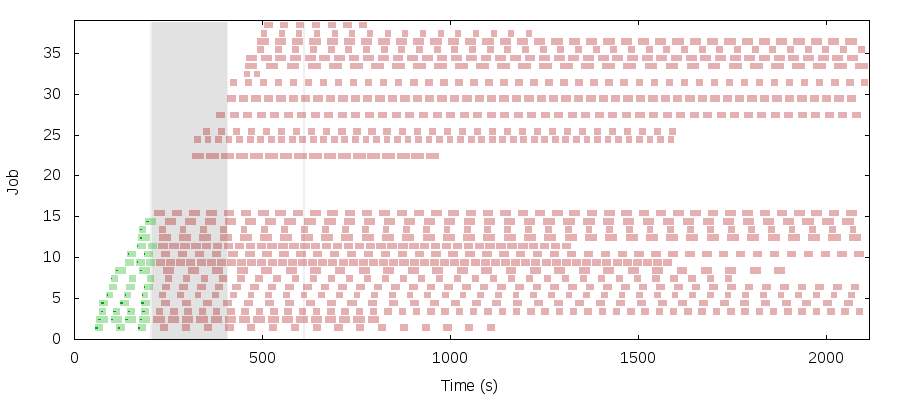

This is bug #520: after Chronos fails over, it registers with Mesos as an entirely new framework instead of re-registering. Mesos assumes the original Chronos framework still owns every resource in the cluster, and refuses to offer resources to the new Chronos leader. Why did the first leader consume all resources when it only needed a small fraction of them? I’m not really sure.

I0812 12:13:06.788936 12591 hierarchical.hpp:955] No resources available to allocate!

Studious readers may also have noticed that in this test, Chronos leader and non-leader nodes did not crash when they lost their connections, but instead slept and reconnected at a later time. This contradicts the design statements made in #513, where a crash was expected and necessary behavior. I’m not sure what lessons to draw from this, other than that operators should expect the unexpected.

As a workaround, the Chronos team recommended setting --offer_timeout (I chose 30secs) to allow Mesos to reclaim resources from the misbehaving Chronos framework. They also recommend automatically restarting both Chronos and Mesos processes–both can recover from some kinds of partitions but others cause them to crash.

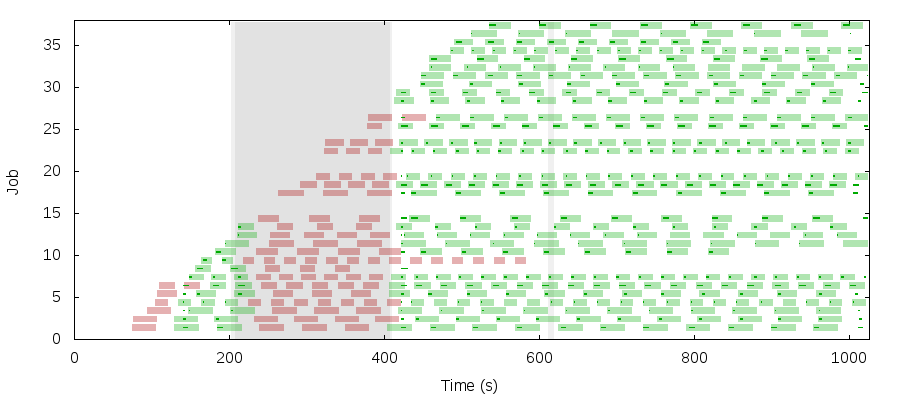

With these changes in place, Mesos may be able to recover some jobs but not others. Just after the partition resolves, it runs most (but not all!) jobs outside their target times. For instance, Job 14 runs twice in too short a window, just after the partition ends. Job 9, on the other hand, never recovers at all.

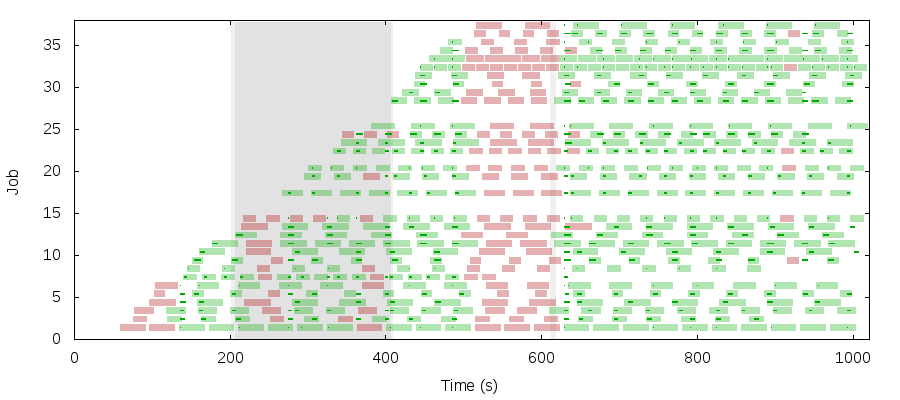

Or maybe you’ll get some jobs that run during a partition, followed by a wave of failures a few minutes after resolution–and sporadic scheduling errors later on.

I’m running out of time to work on Chronos and can’t explore much further, but you can follow the Chronos team’s work in #511.

Recommendations

In general, the Mesos and Chronos documentation is adequate for developers but lacks operational guidance; for instance, it omits that Chronos nodes are fragile by design and must be supervised by a daemon to restart them. The Mesosphere Debian packages don’t provide these supervisory daemons; you’ll have to write and test your own.

Similar conditions (e.g. a network failure) can lead to varied failure modes: for instance, both Mesos and Chronos can sleep and recover from some kinds of network partitions isolating leaders from Zookeeper, but not others. Error messages are unhelpful and getting visibility into the system is tricky.

In Camille Fournier’s excellent talk on consensus systems, she advises that “Zookeeper Owns Your Availability.” Consensus systems are a necessary and powerful tool, but they add complexity and new failure modes. Specifically, if the consensus system goes down, you can’t do work any more. In Chronos’s case, you’re not just running one consensus system, but three. If any one of them fails, you’re in for a bad time. An acquaintance notes that at their large production service, their DB has lost 2/3 quorum nodes twice this year.

Transient resource or network failures can completely disable Chronos. Most systems tested with Jepsen return to some sort of normal operation within a few seconds to minutes after a failure is resolved. In no Jepsen test has Chronos ever recovered completely from a network failure. As an operator, this fragility does not inspire confidence.

Production users confirm that Chronos handles node failure well, but can get wedged when ZK becomes unavailable.

If you are evaluating Chronos, you might consider shipping cronfiles directly to redundant nodes and having tasks coordinate through a consensus system–it could, depending on your infrastructure reliability and need for load-balancing, be simpler and more reliable. Several engineers suggest that Aurora is more robust, though more difficult to set up, than Chronos. I haven’t evaluated Aurora yet, but it’s likely worth looking in to.

If you already use Chronos, I suggest you:

- Ensure your Mesos and Chronos processes are surrounded with automatic-restart wrappers

- Monitor Chronos and Mesos uptime to detect restart loops

- Ensure your Chronos

schedule_horizonis shorter than job intervals - Set Mesos’

--offer_timeoutto some reasonable (?) value - Instrument your jobs to identify whether they ran or not

- Ensure your jobs are OK with being run outside their target windows

- Ensure your jobs are OK with never being run at all

- Avoid network failures at all costs

I still haven’t figured out how to get Chronos to recover from a network failure; presumably some cycle of total restarts and clearing ZK can fix a broken cluster state, but I haven’t found the right pattern yet. When Chronos fixes this issue, it’s likely that it will still refuse to run jobs during a partition. Consider whether you would prefer multiple or zero runs during network disruption–if zero is OK, Chronos may still be a good fit. If you need jobs to keep running during network partitions, you may need a different system.

This work is a part of my research at Stripe, where we’re trying to take systems reliability more seriously. My thanks to Siddarth Chandrasekaran, Brendan Taylor, Shale Craig, Cosmin Nicolaescu, Brenden Matthews, Timothy Chen, and Aaron Bell, and to their respective teams at Stripe, Mesos, and Mesosphere for their help in this analysis. I’d also like to thank Caitie McCaffrey, Kyle Conroy, Ines Sombra, Julia Evans, and Jared Morrow for their feedback.

I need a date ! help!