Previously, on Jepsen, we saw Chronos fail to run jobs after a network partition. In this post, we’ll see MariaDB Galera Cluster allow transactions to read partially committed state.

Galera Cluster extends MySQL (and MySQL’s fork, MariaDB) to clusters of machines, all of which support reads and writes. It uses a group communication system to broadcast writesets and certify each for use. Unlike most Postgres replication systems, it handles the failure and recovery of all nodes automatically, and unlike MySQL Cluster, it has only one (as opposed to three) types of node. The MariaDB Galera packages are particularly easy to install and configure.

Galera Cluster uses the normal InnoDB isolation levels locally–but we’re interested in cluster-wide consistency guarantees. Between nodes, Galera claims to implement Snapshot Isolation–a reasonably strong consistency model.

Transaction isolation also occurs at the cluster level. Between transactions processing on separate nodes, Galera Cluster implements a transaction level called SNAPSHOT-ISOLATION. The SNAPSHOT-ISOLATION level occurs between REPEATABLE-READ and SERIALIZABLE.

This is not strictly speaking correct–but to understand why, we’ll need to know a little more about SQL isolation levels.

SQL Consistency Models

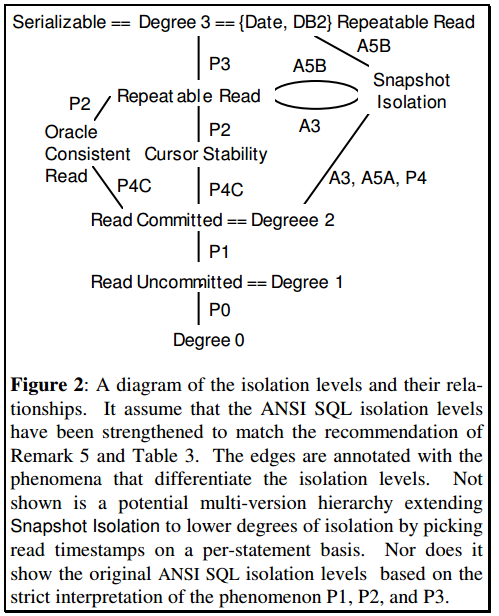

The standard ANSI SQL isolation models define four isolation levels for transactions: read uncommitted, read committed, repeatable read, and serializable. Each level prevents an additional kind of unwanted phenomena: dirty reads, fuzzy reads, and phantoms. The standard’s definition of these phenomena is somewhat ambiguous, and has been extended to a more rigorous spec by Adya and others.

For instance, Read Uncommitted (RU) must prohibit Dirty Write, which Adya terms P0. In P0, one transaction modifies a record written by another before that transaction commits.

| Dirty Write | (P0): | w1(x) ... w2(x) |

In this notation, r1(x) means “transaction T1 read x”. A w denotes a write, a means abort, and c indicates transaction commit.

Read Committed (RC) prohibits P0 and also P1: Dirty Read. Repeatable Read (RR) goes one step further and prohibits P2: “Fuzzy Read”. Finally, Serializability (1SR) adds one final exclusion: P3, or “Phantom”. Serializability is a very strong constraint: all transactions must appear to execute atomically–results should be equivalent to a system in which the transactions ran strictly one after the next, rather than concurrently.

| Dirty Write | (P0): | w1(x) ... w2(x) | Prohibited by RU, RC, RR, 1SR |

| Dirty Read | (P1): | w1(x) ... r2(x) | Prohibited by RC, RR, 1SR |

| Fuzzy Read | (P2): | r1(x) ... w2(x) | Prohibited by RR, 1SR |

| Phantom | (P3): | r1(P) ... w2(y in P) | Prohibited by 1SR |

There’s a neat kind of symmetry here: P1 and P2 are duals of each other, preventing a read from seeing an uncommitted write, and preventing a write from clobbering an uncommitted read, respectively. P0 prevents two writes from stepping on each other, and we could imagine its dual r1(x) … r2(x)–but since reads don’t change the value of x they commute, and we don’t need to prevent them from interleaving. Finally, preventing P3 ensures the stability of a predicate P, like a where clause–if you read all people named “Maoonga”, no other transaction can sneak in and add someone with the same name until your transaction is done.

If you’re having trouble figuring out what these isolation levels actually allow, you’re not alone. The anomalies prevented (and allowed!) by Read Uncommitted, Read Committed, etc are derived from specific implementation strategies. If you use locks for concurrency control, and lock records which are written until the transaction commits (a “long” lock), you prevent P0. If you add a short lock on reads (just for the duration of the read, not until commit time), you prevent P1. If you acquire long locks on both writes and reads you prevent P2, and locking predicates prevents P3. The standard doesn’t really guarantee understandable behavior–it just codifies the behavior given by existing, lock-oriented databases.

Locks aren’t the only ways we can protect transactional integrity. Some MVCC databases, like Oracle and Postgres, offer a different kind of model: Snapshot Isolation.

Snapshot Isolation

Snapshot Isolation arises from Berenson et al’s critique of the ANSI SQL isolation levels. The paper is subtle and dense, but the gist of it is that there are some anomalies allowed by the original SQL definitions that are undesirable, and we might define a new isolation level that gives us most, but not all, of the benefits of Serializability, while still being relatively efficient.

In Snapshot Isolation, every transaction operates on an isolated snapshot of the committed data, taken at any time prior to the transaction’s first read. The transaction can freely modify its snapshot, and those values are visible within the transaction, but are not visible to other transactions unless the transaction commits.

A transaction T1 may commit if the data it plans to write has not been written by any other transaction which committed after T1’s initial snapshot was taken. If a committed transaction conflicts with T1’s writes, T1 must abort. This is called First-committer-wins, and prevents transactions from stepping on each other’s updates without seeing them. This is the “lost update” anomaly: P4.

P4: r1(x) … w2(x) … w1(x) … c1

Like Repeatable Read, Snapshot Isolation prohibits P0, P1, and P2, but allows some types of Phantom anomalies (P3). It is therefore weaker than Serializability, and strictly stronger than Read Committed. However, it differs from Repeatable Read in two respects:

- Repeatable Read allows an anomaly called A3–a type of Phantom, which SI prevents.

- Snapshot isolation allows an anomaly called A5B, called Write Skew, which RR prevents.

A3 occurs when T1 reads some predicate P, T2 modifies that predicate set and commits, and T1 subsequently reads that predicate set again and commits.

A3: r1(P) … w2(y in P) … c2 … r1(P) … c1.

Note that A3 is a specific case of P3: it only applies if both transactions commit, whereas P3 includes all cases where a predicate set is read and modified by another transaction. Snapshot Isolation precludes A3 (but still allows some other anomalies in P3) because T1 always reads the data from its snapshot. So with respect to this particular anomaly, Snapshot Isolation is stronger than Repeatable Read.

On the other hand, SI allows A5B, or Write Skew. Write Skew occurs when two transactions read two distinct values, then write on top of the other transaction’s read. Formally:

A5B: r1(x) … r2(y) … w1(y) … w2(x) … (c1 and c2)

A5B is prevented by Repeatable Read, but allowed by Snapshot Isolation since the write sets of both transactions do not overlap. We also know of one additional anomaly allowed by Snapshot Isolation, in which a read-only transaction can sneak in between two transactions which legally commit out of order because their write sets are disjoint.

A6: r2(x) … w1(y) … c1 … r3(x) … r3(y) … c3 … w2(x) … c2

In light of this, we should revisit Galera’s claim that “SNAPSHOT-ISOLATION level occurs between REPEATABLE-READ and SERIALIZABLE.” This is not quite correct: Berenson and Adya are clear that SNAPSHOT ISOLATION lies between Read Committed and Serializable, but is neither a superset nor subset of Repeatable Read.

Given Snapshot Isolation allows these anomalies, a natural question to ask is whether we can prevent the anomalies, obtaining full serializability, by restricting the classes of transaction that can run on the system. It turns out the answer is yes: we can force the transactions to conflict by promoting some reads to writes (forcing the write set to intersect and preventing commit), or by analyzing dependency cycles in transactions. These techniques allow us to turn a Snapshot Isolation system into a Serializable one. This is how Postgresql’s Serializable isolation level is implemented–on top of an underlying Snapshot Isolation system.

So: we’ve learned about the ANSI SQL isolation levels (RU, RC, RR, and 1SR), and seen that Snapshot Isolation fits in alongside Repeatable Read, and below Serializability. It prevents Dirty Writes, Dirty Reads, and Lost Updates, and some types of Phantoms. However, it still allows an anomaly called Write Skew, so not all transactions in an SI system are guaranteed to serialize correctly. In order to verify Galera’s claims of Snapshot Isolation, we’ll have to design a test that fits within those guarantees.

Designing a test

Jepsen has a linearizability checker in Knossos, but that won’t work here–Snapshot Isolation, and even Serializability, don’t require that operations take place now. They simply have to take place atomically at some point in the history–maybe in the past or future. We need a different kind of checker!

Imagine a system of two bank accounts, each with a balance of $10.

create table if not exists accounts

(id int not null primary key,

balance bigint not null);

INSERT INTO accounts ( id, balance ) VALUES ( 0, 10 );

INSERT INTO accounts ( id, balance ) VALUES ( 1, 10 );

Then we’ll generate and apply transactions that transfer random amounts of money from one account to the other, so long as no account goes negative:

SET SESSION TRANSACTION ISOLATION LEVEL SERIALIZABLE

set autocommit=0

select * from accounts where id = 0

select * from accounts where id = 1

UPDATE accounts SET balance = 8 WHERE id = 0

UPDATE accounts SET balance = 12 WHERE id = 1

COMMIT

Because these transactions write every record they read, they must be serializable under Snapshot Isolation. This is not the same as Galera offering serializability for all transactions–we’re just asserting that these particular transactions appear to take place atomically. By serializable, remember, we mean any history which is equivalent to the transactions executing in some sequential order. It’s OK for operations from two transactions to interleave, so long as their outcomes are the same as if T1 had executed by itself, then T2 had executed after.

Claim: Given a set of transactions, each beginning with a read and writing every value they read, a Snapshot Isolation system must always produce serializable histories.

Proof by contradiction: assume these transactions may not always serialize. Then there must exist some possible history in which one transaction T1 appears to execute interleaved with another T2.

Lemma 1: Since the start time for a transaction must precede its first read, and the first operation in every transaction is a read, every snapshot’s start time must occur before any of its operations. Similarly, each transaction’s commit time must occur after its last operation. Therefore, the [start-time, commit-time] interval for a transaction covers all its operations.

Without loss of generality, assume T1 starts before T2 starts.

- Case 1: T1 commits before T2’s start time. Operations from T1 and T2 cannot interleave, by Lemma 1, because their intervals do not overlap.

- Case 2: T1 and T2 operate on disjoint sets of accounts. They serialize trivially.

- Case 3: T1 and T2 operate on intersecting sets of accounts, and T1 commits before T2 commits. Then T1 wrote data that T2 also wrote, and committed in T2’s interval, which violates First-committer-wins. T2 must abort.

- Case 4: T1 and T2 operate on intersecting sets of accounts, and T1 commits after T2 commits. Then T2 wrote data that T1 also wrote, and committed in T1’s interval, which violates First-committer-wins. T1 must abort.

Cases 1 and 2 contradict our assumption that T1 and T2 do not serialize. Cases 3 and 4 contradict the Snapshot Isolation invariants. Therefore, if the system provide Snapshot Isolation, T1 and T2 must serialize. Since every pair of transactions must serialize, the total history of transactions must also comprise a serializable history. ∎

Note that we’ve restricted ourselves to transactions that write every value they read in order to avoid phenomena A5B and A6. If we had transactions with intersecting read sets and disjoint writes sets, A5B and A6 might occur, and nonserializable histories could result!

Now introduce read-only transactions, listing all balances:

SET SESSION TRANSACTION ISOLATION LEVEL SERIALIZABLE

set autocommit=0

select * from accounts

COMMIT

Read-only transactions trivially serialize with one another. Do they serialize with respect to transfer transactions? The answer is yes: since every read-only transaction sees only committed data in a Snapshot Isolation system, and commits no data itself, it must appear to take place atomically at some time between other transactions.

So the set of all transfer and read transactions must serialize. This gives us two important invariants:

- Since each transfer conserves money, the total amount of money in the system remains constant.

- Since read transactions serialize and read every balance, every read should see the same total.

With invariant 2, we can write a function to verify that every read sees the correct total balance. Then we generate a mix of randomized transfer and read operations, and apply them to our Galera cluster.

Results

At low levels of concurrency–say five clients, performing about one op per second, things are fine.

{:valid? true,

:perf ...

:bank {:valid? true, :bad-reads []}}

But increase the probability of conflicting transactions by running, say, 20 clients pushing an aggregate ~150 transactions/sec for about a minute, and things go terribly, terribly wrong.

INFO jepsen.core - Analysis invalid! (ノಥ益ಥ)ノ ┻━┻

{:valid? false,

:perf ...

:bank

{:valid? false,

:bad-reads

[{:type :wrong-total,

:expected 20,

:found 18,

:op {:value [6 12], :time 1717930325, :process 15, :type :ok, :f :read}}

{:type :wrong-total,

:expected 20,

:found 16,

:op {:value [2 14], :time 3253699251, :process 13, :type :ok, :f :read}}

{:type :wrong-total,

:expected 20,

:found 17,

:op {:value [8 9], :time 5110345929, :process 17, :type :ok, :f :read}}

...

Every transaction should have seen a total balance of 20–but instead we read different values, like 18, 16, or 17. This means our read transactions don’t see a consistent snapshot of the database. They actually see intermediate results from transfer transactions.

These inconsistent reads aren’t just limited to read-only transactions–transfers can see them too. Here’s an excerpt from the query log, showing a transfer transaction which saw an inconsistent snapshot of the world.

66 Connect jepsen@192.168.122.1 as anonymous on jepsen

66 Query show variables like 'max_allowed_packet'

66 Query SELECT @@tx_isolation

66 Query SET SESSION TRANSACTION ISOLATION LEVEL SERIALIZABLE

66 Query set autocommit=0

66 Query select * from accounts where id = 1

66 Query select * from accounts where id = 0

66 Query UPDATE accounts SET balance = 8 WHERE id = 1

66 Query UPDATE accounts SET balance = 9 WHERE id = 0

66 Query COMMIT

66 Query ROLLBACK

66 Query set autocommit=1

66 Query SET SESSION TRANSACTION ISOLATION LEVEL REPEATABLE READ

66 Quit

8 + 9 is only 17: the client read an inconsistent snapshot of the two accounts, tried to move some money from one to the other, resulting in new balances 8 and 9, and tried to write those values back. Luckily this transaction failed to commit: it rolled back–as did every inconsistent transfer transaction in this test case. In the ticket I filed for this issue, I initially believed the problem might be limited to read-only transactions–not a great result, but not as catastrophic as permanent data corruption.

Unfortunately, not all inconsistent transfer transactions correctly abort. Some can commit, permanently creating or destroying money. In this run, for instance, an inconsistent read in a transfer transaction fabricates $2 out of thin air:

...

{:type :wrong-total,

:expected 20,

:found 22,

:op {:value [10 12], :time 200102098751, :process 12, :type :ok, :f :read}}

{:type :wrong-total,

:expected 20,

:found 22,

:op {:value [6 16], :time 200109803013, :process 7, :type :ok, :f :read}}

{:type :wrong-total,

:expected 20,

:found 22,

:op {:value [10 12], :time 200113103237, :process 6, :type :ok, :f :read}}

{:type :wrong-total,

:expected 20,

:found 22,

:op {:value [6 16], :time 200128852818, :process 3, :type :ok, :f :read}}]}}

The transfer transactions should have kept the total amount of money at $20, but by the end of the test the totals all sum to $22. And in this run, 25% of the funds in the system mysteriously vanish. These results remain stable after all other transactions have ended–they are not a concurrency anomaly.

...

{:type :wrong-total,

:expected 20,

:found 15,

:op {:value [15 0], :time 130519175659, :process 14, :type :ok, :f :read}}]}}

In summary, Galera does not provide Snapshot Isolation. A transaction does not operate on an isolated snapshot of the world; other transactions may modify the data it’s reading.

Dirty reads?

So reads can see data that’s being modified by a transaction. Is this P1: Dirty Read? We saw this behavior in MongoDB, where a read could see invalid data from a transaction that never committed.

To measure this, we’ll design a similar test. This time, each operation writes a unique value to every row in a single transaction, allowing us to identify precisely which transaction was responsible for the values a read sees.

If Snapshot Isolation held, every read would see the same number in every row. We know that Galera allows inconsistent reads, so we expect to see a mixture of different numbers in each row. But we can go one step further, and distinguish between values that were written by successful transactions, and those written by failed transactions. If we see data from transactions that never committed, that would be P1: Dirty Reads.

Luckily, the test results suggest that Galera does not allow Dirty Reads.

INFO jepsen.core - Everything looks good! ヽ(‘ー`)ノ

{:valid? true,

:perf ...

:dirty-reads

{:valid? true,

:inconsistent-reads

[[21462 21466 21466 21466]

[21462 21466 21466 21466]

...

[34449 34449 34460 34460]

[34460 34460 34463 34463]],

:dirty-reads []}}

We have plenty of inconsistent reads here–writes in this test always set every row to the same value, but we see different values in reads. However, none of the values we read came from a transaction which did not (eventually) commit. So: there are no dirty reads–at least, not in this test. There might be other conditions that allow Galera to expose uncommitted data, but I haven’t found them yet. This suggests that Galera could support Read Committed.

Given these results, I suspect the inconsistent reads we’re seeing could be A5A: Read Skew. In a Read Skew anomaly, a transaction reads x, but before it can read y, a second transactions sneaks in and updates x and y together. Because both transactions commit this isn’t a Dirty Read, but it has similar effects: two records which should only change together can be changing independently.

A5A: r1(x) … w2(x) … w2(y) … c2 … r1(y) … (c1 or a1).

Snapshot Isolation prohibits A5A by taking isolated snapshots for reads. This anomaly is supposed to be impossible in Galera–but, as we’ve seen, snapshots aren’t correctly isolated.

The Galera team responded by explaining that Galera does not honor first-committer-wins for performance reasons. No first-committer-wins, no snapshot isolation. No snapshot isolation, well… I’m not sure exactly what Galera does guarantee, but it’s not what it says on the tin.

Recommendations

Galera is easy to install–I spent weeks trying to set up MySQL Cluster to no avail, and got Galera Cluster running in a matter of hours. It offers support contracts, reasonable documentation, and doesn’t require you to navigate the choose-your-own-adventure of Postgres replication strategies. With homogenous nodes, simple configuration, and the wealth of MySQL tooling available, it seems like a solid choice from an operational perspective.

Unfortunately, even in totally healthy clusters, with no node failures or network failures, Galera Cluster does not satisfy its claims of Snapshot Isolation. At moderate concurrency, you should expect to read inconsistent state from other transactions, and to be able to write that state back to the database. Designing your applications to avoid write skew is insufficient: even if writes completely cover the read set, SI fails to hold.

Since Galera appears to forbid reading uncommitted data, I suspect (but cannot show) Galera supports Read Committed isolation.

The probability of data corruption scales with client concurrency, with the duration of transactions, and with the increased probability of intersecting working sets. Placing nodes further apart (for example, on the other side of the planet) will dramatically increase commit times, raising the probability of consistency errors.

I’m not aware of a workaround for these issues. I assumed materializing transaction conflicts or promoting reads to writes would be sufficient, but those techniques failed. If you’re a Galera user with a support contract, you can try asking them to address the issue. You might adopt a different database–though since Galera is the first distributed SQL system I’ve analyzed, and FoundationDB disapparated, I’m not sure what to recommend yet. Or you may decide that your transactions don’t overlap often enough, or aren’t high-value enough, to be a serious problem. Not all consistency errors are critical!

Galera has indicated that they may provide actual Snapshot Isolation, and possibly full Serializability, in future releases. So… stay tuned!

This work is a part of my research at Stripe, where we’re trying to raise the industry’s standards for distributed systems safety. My thanks to sjaakola from the Galera team for his timely response to the bug report, and to Caitie McCaffrey, Peter Bailis, Coda Hale, Jason Pellerin, Camille Fournier, Jorge Ortiz, and Peter Alvaro for their feedback.

I think you proof might be missing a case; couldn’t transactions start at the exact same time? (Fwiw, I don’t think it changes the conclusion at all, just wanted to be super-pedantic.)