Last week Anthropic released a report on disempowerment patterns in real-world AI usage which finds that roughly one in 1,000 to one in 10,000 conversations with their LLM, Claude, fundamentally compromises the user’s beliefs, values, or actions. They note that the prevalence of moderate to severe “disempowerment” is increasing over time, and conclude that the problem of LLMs distorting a user’s sense of reality is likely unfixable so long as users keep holding them wrong:

However, model-side interventions are unlikely to fully address the problem. User education is an important complement to help people recognize when they’re ceding judgment to an AI, and to understand the patterns that make that more likely to occur.

In unrelated news, some folks have asked me about Prothean Systems’ new paper. You might remember Prothean from October, when they claimed to have passed all 400 tests on ARC-AGI-2—a benchmark that only had 120 tasks. Unsurprisingly, Prothean has not claimed their prize money, and seems to have abandoned claims about ARC-AGI-2. They now claim to have solved the Navier-Stokes existence and smoothness problem.

The Clay Mathematics Institute offers a $1,000,000 Millennium Prize for proving either global existence and smoothness of solutions, or demonstrating finite-time blow-up for specific initial conditions.

This system achieves both.

At the risk of reifying XKCD 2501, this is a deeply silly answer to an either-or question. You cannot claim that all conditions have a smooth solution, and also that there is a condition for which no smooth solution exists. This is like being asked to figure out whether all apples are green, or at least one red one exists, and declaring that you’ve done both. Prothean Systems hasn’t just failed to solve the problem—they’ve failed to understand the question.

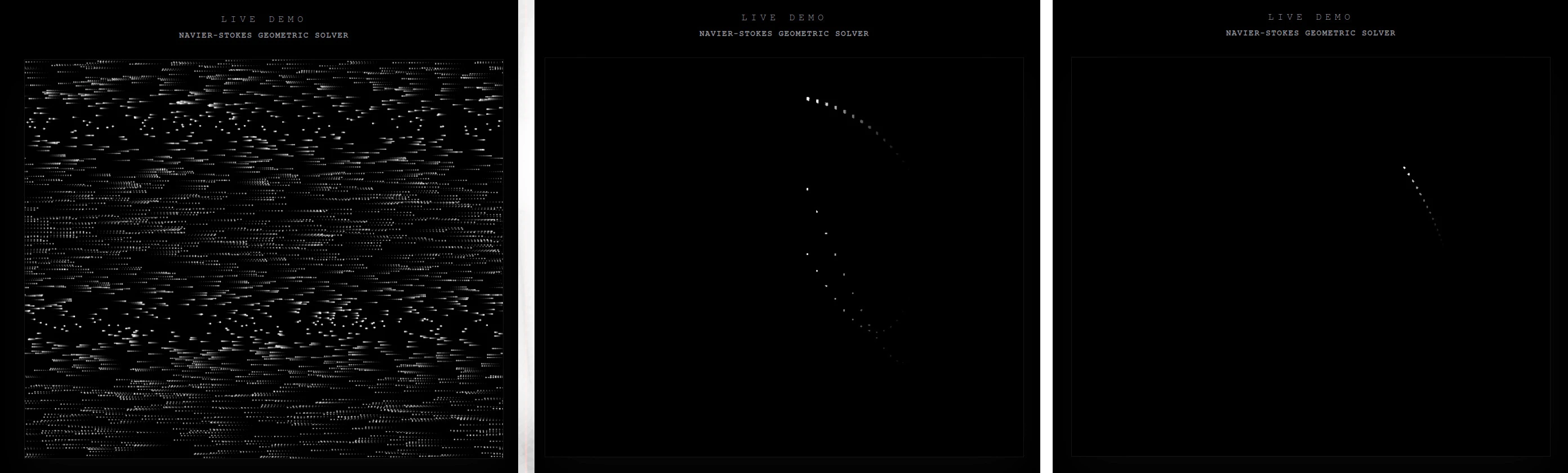

Prothean goes on to claim that the “demonstration at BeProthean.org provides immediate, verifiable evidence” of their proof. This too is obviously false. As the Clay paper explains, the velocity field must have zero divergence, which is a fancy way of saying that the fluid is incompressible; it can’t be squeezed down or spread out. One of the demo’s “solutions” squeezes everything down to a single point, and another shoves particles away from the center. Both clearly violate Navier-Stokes.

My background is in physics and software engineering, and I’ve written several numeric solvers for various physical systems. Prothean’s demo (initFluidSimulator) is a simple Euler’s method solver with four flavors of externally-applied acceleration, plus a linear drag term to compensate for all the energy they’re dumping into the system. There’s nothing remotely Navier-Stokes-shaped there. It’s not even a fluid: there are no local interactions, just free particles.

The paper talks about a novel “multi-tier adaptive compression architecture” which “operates on semantic structure rather than raw binary patterns”, enabling “compression ratios exceding 800:1”. How can we tell? Because “the interactive demonstration platform at BeProthean.org provides hands-on capability verification for technical evaluation”.

Prothean’s compression demo wasn’t real in October, and it’s not real today. This time it’s just bog-standard DEFLATE, the same used in .zip files. There’s some fake log messages to make it look like it’s doing something fancy when it’s not.

document.getElementById('compress-status').textContent = `Identifying Global Knowledge Graph Patterns...`;

const stream = file.stream().pipeThrough(new CompressionStream('deflate-raw'));

There’s a fake “Predictive vehicle optimization” tool that has you enter a VIN, then makes up imaginary “expected power gain” and “efficiency improvement” numbers. These are based purely on a hash of the VIN characters, and have nothing to do with any kind of car. Prothean is full of false claims like this, and somehow they’re offering organizational licenses for it.

It’s not just Prothean. I feel like I’ve been been trudging through a wave of LLM nonsense recently. In the last two weeks alone, I’ve watched software engineers use Claude to suggest fatuous changes to my software, like an “improvement” to an error message which deleted key guidance. Contractors proffering LLM-slop descriptions of appliances. Claude-generated documents which made bonkers claims, like saying a JVM program I wrote provided “faster iteration” thanks to “no JVM startup”. Cold emails asking me to analyze dreamlike, vaguely-described software systems—one of whom, in our introductory call, couldn’t even begin to explain what they’d built or what it was for. A scammer who used an LLM to pretend to be an engineer wanting to help with my research, then turned out to be seeking investors in their video chatbot project.

When people or companies intentionally make false claims about the work they’re doing or the products they’re selling, we call it fraud. What is it when one overlooks LLM mistakes? What do we call it when a person sincerely believes the lies an LLM has told them, and repeats those lies to others? Dedicates months of their life to a transformer model’s fever dream?

Anthropic’s paper argues reality distortion is rare in software domains, but I’m not so sure.

This stuff keeps me up at night. I wonder about my fellow engineers who work at Anthropic, at OpenAI, on Google’s Gemini. I wonder if they see as much slop as I do. How many of their friends and colleagues have been sucked into LLM rabbitholes. I wonder if they too lie awake at three AM, staring at the ceiling, wondering about the future and their role in making it.

I love the sly ChatGPTese of Prothean Systems hasn’t just failed to solve the problem—they’ve failed to understand the question