Riemann 0.2.0

Riemann 0.2.0 is ready. There’s so much left that I want to build, but this release includes a ton of changes that should improve usability for everyone, and I’m excited to announce its release.

Version 0.2.0 is a fairly major improvement in Riemann’s performance and capabilities. Many things have been solidified, expanded, or tuned, and there are a few completely new ideas as well. There are a few minor API changes, mostly to internal structure–but a few streams are involved as well. Most functions will continue to work normally, but log a deprecation notice when used.

I dedicated the past six months to working on Riemann full-time. I was fortunate to receive individual donations as well as formal contracts with Blue Mountain Capital, SevenScale, and Iovation during that time. That money gave me months of runway to help make these improvements–but even more valuable was the feedback I received from production users, big and small. I’ve used your complaints, frustrations, and ideas to plan Riemann’s roadmap, and I hope this release reflects that.

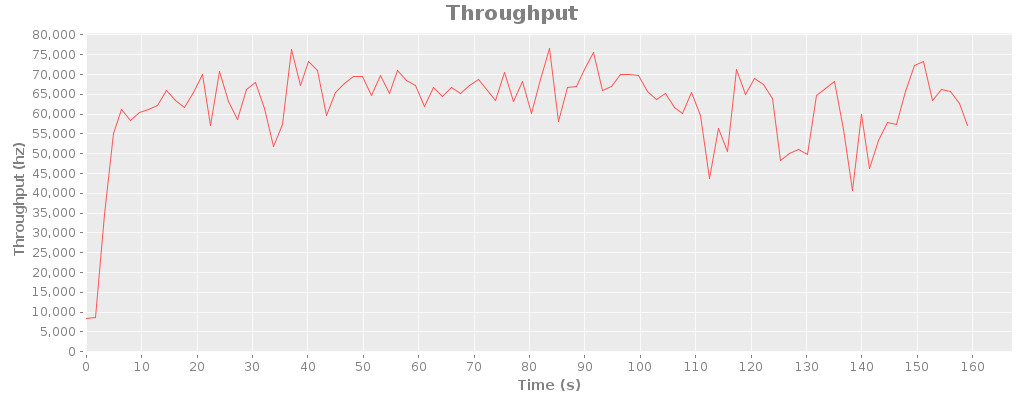

65K messages/sec

The Netty redesign of riemann-java-client made it possible to expose an end-to-end asynchronous API for writes, which has a dramatic improvement on messages with a small number of events. By introducing a small queue of pipelined write promises, riemann-clojure-client can now push 65K events per second, as individual messages, over a single TCP socket. Works out to about 120 mbps of sustained traffic.

I’m really happy about the bulk throughput too: three threads using a single socket, sending messages of 100 events each, can push around 185-200K events/sec, at over 200 mbps. That throughput took 10 sockets and hundreds of threads to achieve in earlier tests.

Blathering about Riemann consistency

tl;dr Riemann is a monitoring system, so it emphasizes liveness over safety.

Riemann is aimed at high-throughput (millions of events/sec/node), partial-harvest event processing, where it is acceptable to trade completeness for throughput at low latencies. For instance, it’s probably fine to drop half of your request latency events on the floor, if you’re calculating a lossy histogram with sampling anyway. It’s also typically acceptable to have nondeterministic behavior with respect to time windows: if one node’s clock is skewed, it’s better to process it “soonish” rather than waiting an unbounded amount of time for it to check in.

There is no synchronization or relationship between events. Events are immutable and have a total order, even though a given server or client may only have a fraction of the relevant events for a system. The events are, in a sense, the transaction log–except that the semantics of those transactions depend on the stream configuration.

Reaching 200K events/sec

I’ve been doing a lot of performance tuning in Riemann recently, especially in the clients–but I’d like to share a particularly spectacular improvement from yesterday.

The Riemann protocol

Riemann’s TCP protocol is really simple. Send a Msg to the server, receive a response Msg. Messages might include some new events for the server, or a query; and a response might include a boolean acknowledgement or a list of events matching the query. The protocol is ordered; messages on a connection are processed in-order and responses sent in-order. Each Message is serialized using Protocol Buffers. To figure out how large each message is, you read a four-byte length header, then read length bytes, and parse that as a Msg.

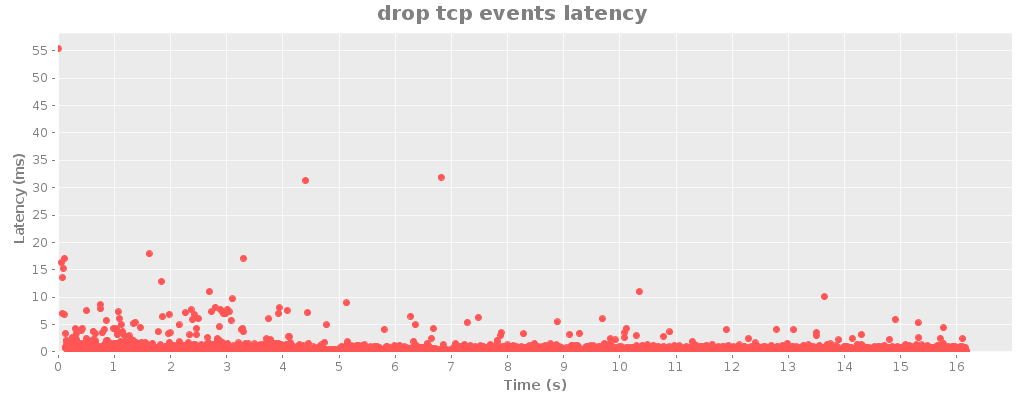

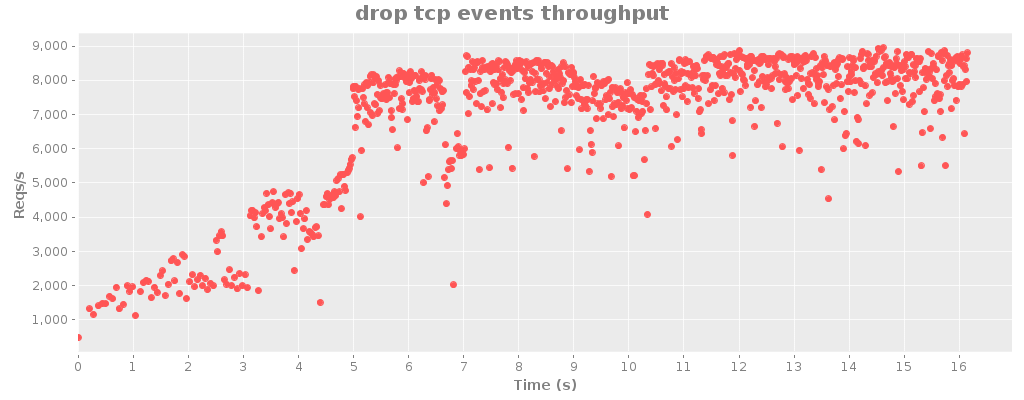

Pipelining requests

I’ve been putting more work into riemann-java-client recently, since it’s definitely the bottleneck in performance testing Riemann itself. The existing RiemannTcpClient and RiemannRetryingTcpClient were threadsafe, but almost fully mutexed; using one essentially serialized all threads behind the client itself. For write-heavy workloads, I wanted to do better.

There are two logical optimizations I can make, in addition to choosing careful data structures, mucking with socket options, etc. The first is to bundle multiple events into a single Message, which the API supports. However, your code may not be structured in a way to efficiently bundle events, so where higher latencies are OK, the client can maintain a buffer of outbound events and flush it regularly.

The second optimization is to take advantage of request pipelining. Riemann’s protocol is simple and synchronous: you send a Message over a TCP connection, and receive exactly one TCP message in response. The existing clients, however, forced you to wait n milliseconds for the message to cross the network, be processed by Riemann, and receive an acknowledgement. We can do better by pipelining requests: sending new requests before waiting for the previous responses, and matching up received messages with their corresponding requests later.

Riemann: longs and doubles

In the just-released riemann-java-client 0.0.6, riemann-clojure-client 0.0.6, riemann-ruby-client 0.0.8, and the upcoming riemann 0.1.4 (presently in master), Riemann will support two new types of metrics for events.

https://github.com/aphyr/riemann-java-client/blob/master/src/main/proto/riemann/proto.proto#L24

# signed 64-bit integers (variable-width-encoded)

optional sint64 metric_sint64 = 13;

# double-precision IEEE 754 floats

optional double metric_d = 14;

Riemann 0.1.3

Ready? Grab the tarball or deb from http://aphyr.github.com/riemann/

0.1.3 is a consolidation release, comprising 2812 insertions and 1425 deletions. It includes numerous bugfixes, performance improvements, features–especially integration with third-party tools–and clearer code. This release includes the work of dozens of contributors over the past few months, who pointed out bugs, cleaned up documentation, smoothed over rough spots in the codebase, and added whole new features. I can’t say thank you enough, to everyone who sent me pull requests, talked through designs, or just asked for help. You guys rock!

I also want to say thanks to Boundary, Blue Mountain Capital, Librato, and Netflix for contributing code, time, money, and design discussions to this release. You’ve done me a great kindness.

The Future of Riemann

For the last three years Riemann (and its predecessors) has been a side project: I sketched designs, wrote code, tested features, and supported the community through nights and weekends. I was lucky to have supportive employers which allowed me to write new features for Riemann as we needed them. And yet, I’ve fallen behind.

Dozens of people have asked for sensible, achievable Riemann improvements that would help them monitor their systems, and I have a long list of my own. In the next year or two I’d like to build:

- Protocol enhancements: high-resolution times, groups, pubsub, UDP drop-rate estimation

- Expanding the websockets dashboard

- Maintain index state through restarts

- Expanded documentation

- Configuration reloading

- SQL-backed indexes for faster querying and synchronizing state between multiple Riemann servers

- High-availability Riemann clusters using Zookeeper

- Some kind of historical data store, and a query interface for it

- Improve throughput by an order of magnitude

Eliminating reflection

As a quick follow-up, I managed to squeeze an extra 10% or so out of riemann.server by adding a few type hints.

Riemann Recap: Client Libraries and Performance

I’ve been focusing on Riemann client libraries and optimizations recently, both at Boundary and on my own time.

Boundary uses the JVM extensively, and takes advantage of Coda Hale’s Metrics. For our applications I’ve written a Riemann Java UDP and TCP client, which also includes a Metrics reporter. The Metrics reporter (I’ll be submitting that to metrics-contrib later) will just send periodic events for each of the metrics in a registry, and optionally some VM statistics as well. It can prefix each service, filter with predicates, and has been reporting for two of our production systems for about a week now.

The Java client has been integrated into Riemann itself, replacing the old Aleph client. It’s about on par with the old Aleph client, owing to its use of standard Socket and friends as opposed to Netty. Mårten Gustafson and Edward Ribeiro have been instrumental in getting the Java client up and running, so my sincere thanks go out to both of them.

Riemann 0.1.0

The initial stable release of Riemann 0.1.0 is available for download. This is the culmination of the 0.0.3 development path and 2 months of production use at Showyou.

Is it production ready? I think so. The fundamental stream operators are in place. A comprehensive test suite checks out. Riemann has never crashed. Its performance characteristics should be suitable for a broad range of scales and applications.

There is a possible memory leak, on the order of 1% per day in our production setup. I can’t replicate it under a variety of stress tests. It’s not clear to me whether this is legitimate state information (i.e. an increase in tracked data), GC/malloc implementations being greedy, or an actual memory leak. Profiling and understanding this is my top priority for Riemann. If this happens to you, restarting the daemon every few weeks should not be prohibitive; it takes about five seconds to reload. Should you encounter this issue, please drop me a line with your configuration; it may help me identify the cause.

Riemann: Breaking the 10k barrier

When I designed UState, I had a goal of a thousand state transitions per second. I hit about six hundred on my Macbook Pro, and skirted 1000/s on real hardware. Eventmachine is good, but I started to bump up against concurrency limits in MRI’s interpreter lock, my ability to generate and exchange SQL with SQLite, and protobuf parse times. So I set out to write a faster server. I chose Clojure for its expressiveness and powerful model of concurrent state–and more importantly, the JVM, which gets me Netty, a mature virtual machine with a decent thread model, and a wealth of fast libraries for parsing, state, and statistics. That project is called Riemann.

Today, I’m pleased to announce that Riemann crossed the 10,000 event/second mark in production. In fact it’s skirting 11k in my stress tests. (That final drop in throughput is an artifact of the graph system showing partially-complete data.)