It's always DNS's fault

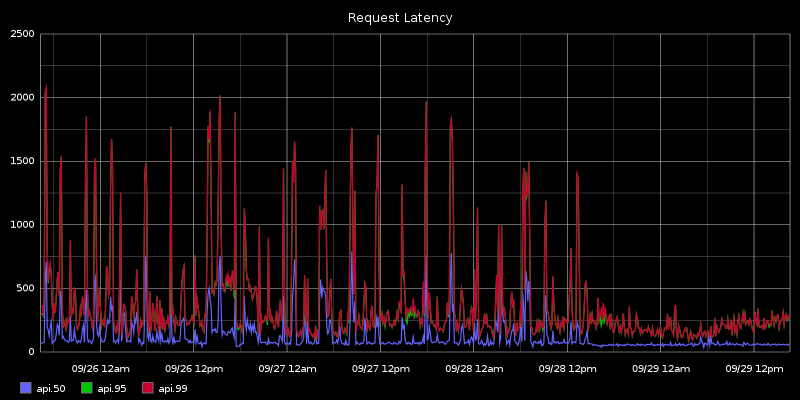

One of the hard-won lessons of the last few weeks has been that inexplicable periodic latency jumps in network services should be met with an investigation into named.

API latency has been wonky the last couple weeks; for a few hours it will rise to roughly 5 to 10x normal, then drop again. Nothing in syslog, no connection table issues, ip stats didn’t reveal any TCP/IP layer difficulties, network was solid, no CPU, memory, or disk contention, no obviously correlated load on other hosts. Turns out it was Bind getting overwhelmed (we have, er, nontrivial DNS demands) and causing local domain resolution to slow down. For now I’m just pushing everything out in /etc/hosts, but will probably drop a local bind9 on every host as a cache.

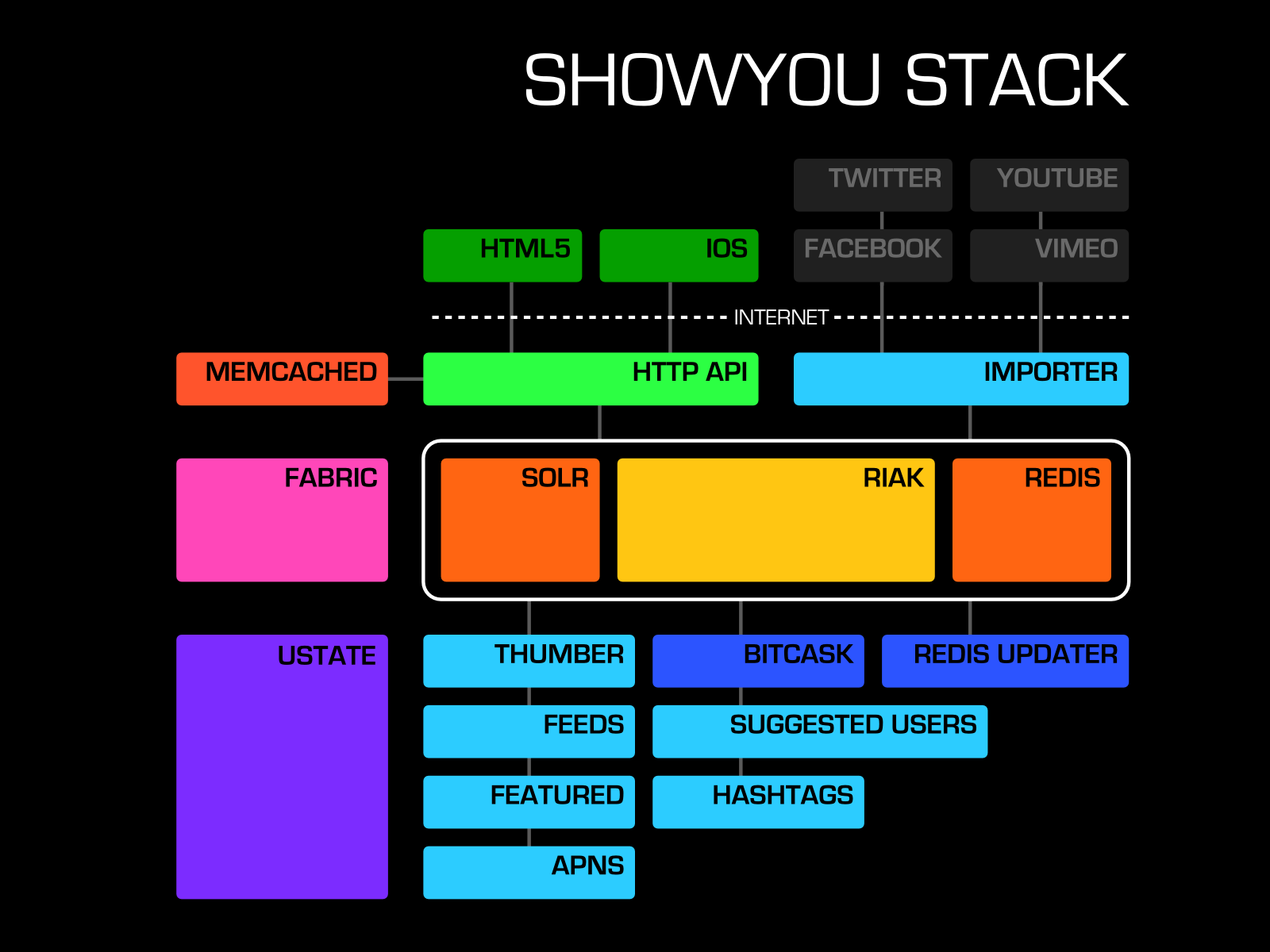

Scaling at Showyou

John Mullerleile, Phil Kulak, and I gave a talk tonight, entitled “Scaling at Showyou.”

I gave an overview of the Showyou architecture, including our use of Riak, Solr, and Redis; strategies for robust systems; and our comprehensive monitoring system. You may want to check out: