Previously in Jepsen, we discussed Redis. In this post, we’ll see MongoDB drop a phenomenal amount of data. See also: followup analyses of 2.6.7 and 3.4.0-rc3.

MongoDB is a document-oriented database with a similar distribution design to Redis. In a replica set, there exists a single writable primary node which accepts writes, and asynchronously replicates those writes as an oplog to N secondaries. However, there are a few key differences.

First, Mongo builds in its leader election and replicated state machine. There’s no separate system which tries to observe a replica set in order to make decisions about what it should do. The replica set decides among itself which node should be primary, when to step down, how to replicate, etc. This is operationally simpler and eliminates whole classes of topology problems.

This article is part of Jepsen, a series on network partitions. We’re going to learn about distributed consensus, discuss the CAP theorem’s implications, and demonstrate how different databases behave under partition.

Previously on Jepsen, we explored two-phase commit in Postgres. In this post, we demonstrate Redis losing 56% of writes during a partition.

Redis is a fantastic data structure server, typically deployed as a shared heap. It provides fast access to strings, lists, sets, maps, and other structures with a simple text protocol. Since it runs on a single server, and that server is single-threaded, it offers linearizable consistency by default: all operations happen in a single, well-defined order. There’s also support for basic transactions, which are atomic and isolated from one another.

Because of this easy-to-understand consistency model, many users treat Redis as a message queue, lock service, session store, or even their primary database. Redis running on a single server is a CP system, so it is consistent for these purposes.

Previously on Jepsen, we introduced the problem of network partitions. Here, we demonstrate that a few transactions which “fail” during the start of a partition may have actually succeeded.

Postgresql is a terrific open-source relational database. It offers a variety of consistency guarantees, from read uncommitted to serializable. Because Postgres only accepts writes on a single primary node, we think of it as a CP system in the sense of the CAP theorem. If a partition occurs and you can’t talk to the server, the system is unavailable. Because transactions are ACID, we’re always consistent.

Right?

Riemann 0.2.0 is ready. There’s so much left that I want to build, but this release includes a ton of changes that should improve usability for everyone, and I’m excited to announce its release.

Version 0.2.0 is a fairly major improvement in Riemann’s performance and capabilities. Many things have been solidified, expanded, or tuned, and there are a few completely new ideas as well. There are a few minor API changes, mostly to internal structure–but a few streams are involved as well. Most functions will continue to work normally, but log a deprecation notice when used.

I dedicated the past six months to working on Riemann full-time. I was fortunate to receive individual donations as well as formal contracts with Blue Mountain Capital, SevenScale, and Iovation during that time. That money gave me months of runway to help make these improvements–but even more valuable was the feedback I received from production users, big and small. I’ve used your complaints, frustrations, and ideas to plan Riemann’s roadmap, and I hope this release reflects that.

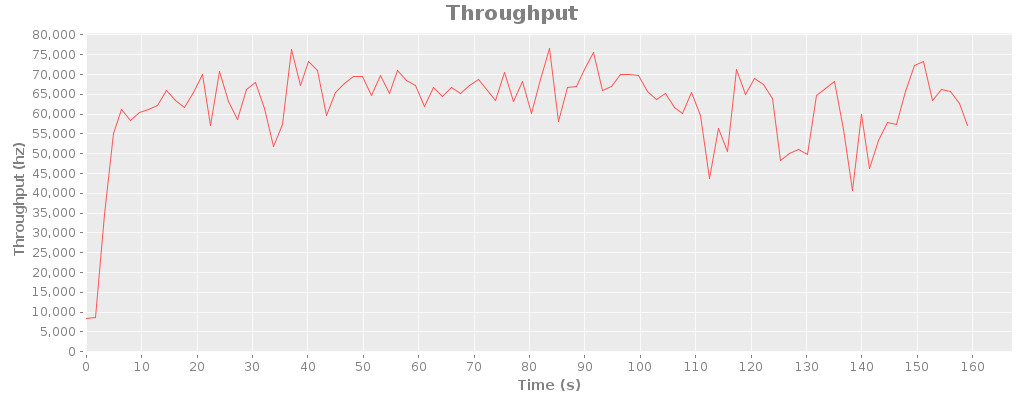

The Netty redesign of riemann-java-client made it possible to expose an end-to-end asynchronous API for writes, which has a dramatic improvement on messages with a small number of events. By introducing a small queue of pipelined write promises, riemann-clojure-client can now push 65K events per second, as individual messages, over a single TCP socket. Works out to about 120 mbps of sustained traffic.

I’m really happy about the bulk throughput too: three threads using a single socket, sending messages of 100 events each, can push around 185-200K events/sec, at over 200 mbps. That throughput took 10 sockets and hundreds of threads to achieve in earlier tests.

In the previous post, I described an approximation of Heroku’s Bamboo routing stack, based on their blog posts. Hacker News, as usual, is outraged that the difficulty of building fast, reliable distributed systems could prevent Heroku from building a magically optimal architecture. Coda Hale quips:

Really enjoying @RapGenius’s latest mix tape, “I Have No Idea How Distributed Systems Work”.

Coda understands the implications of the CAP theorem. This job is too big for one computer–any routing system we design must be distributed. Distribution increases the probability of a failure, both in nodes and in the network itself. These failures are usually partial, and often take the form of degradation rather than the system failing as a whole. Two nodes may be unable to communicate with each other, though a client can see both. Nodes can lie to each other. Time can flow backwards.

For more on Timelike and routing simulation, check out part 2 of this article: everything fails all the time. There’s also more discussion on Reddit.

RapGenius is upset about Heroku’s routing infrastructure. RapGenius, like many web sites, uses Rails, and Rails is notoriously difficult to operate in a multithreaded environment. Heroku operates at large scale, and made engineering tradeoffs which gave rise to high latencies–latencies with adverse effects on customers. I’d like to explore why Heroku’s Bamboo architecture behaves this way, and help readers reason about their own network infrastructure.

To start off with, here’s a Rails server. Since we’re going to be discussing complex chains of network software, I’ll write it down as an s-expression:

I’m not a big fan of legal documents. I just don’t have the resources or ability to reasonably defend myself from a lawsuit; retaining a lawyer for a dozen hours would literally bankrupt me. Even if I were able to defend myself against legal challenge, standard contracts for software consulting are absurd. Here’s a section I encounter frequently:

Ownership of Work Product. All Work Product (as defined below) and benefits thereof shall immediately and automatically be the sole and absolute property of Company, and Company shall own all Work Product developed pursuant to this Agreement.

“Work Product” means each invention, modification, discovery, design, development, improvement, process, software program, work of authorship, documentation, formula, data, technique, know-how, secret or intellectual property right whatsoever or any interest therein (whether or not patentable or registrable under copyright or similar statutes or subject to analogous protection) that is made, conceived, discovered, or reduced to practice by Contractor (either alone or with others) and that (i) relates to Company’s business or any customer of or supplier to Company or any of the products or services being developed, manufactured or sold by Company or which may be used in relation therewith, (ii) results from the services performed by Contractor for Company or (iii) results from the use of premises or personal property (whether tangible or intangible) owned, leased or contracted for by Company.

These paragraphs essentially state that any original thoughts I have during the course of the contract are the company’s property. If the ideas are defensible under an IP law, I could be sued for using them in another context later. One must constantly weigh the risk of thinking under such a contract. “If I consider this idea now, I run the risk of inventing something important which I can never use again.”

Michael Robertson writes:

@Jason @MicahSingleton Biz can pursue profits or racism, but not both. Tech industry is a meritocracy as is all industries in a free market.

and Jason Calacanis adds:

I have it pretty good, in America. I’m White, male, young. Grew up with books. With enough food on the table during critical phases of brain development. In a neighborhood composed of people who looked and spoke like me, a neighborhood with a creek, and trees, and street hockey, somewhere safe. Through deterministic happenstance–a confluence of genetics and education and economics and municipal investment in public education and intellectually challenging parents and the right teachers at pivotal moments–I’m good at thinking about a class of problem which too few people are working on, and present market dynamics allow me to do what I love for far more money than I need.

People grant me the authority to speak as is expected of males, with the lack of recognition of my skin color that comes for people of northern European origin, and for my youth I am forgiven all manner of brash and disrespectful rejoinders. I am significantly more likely to be a victim of a murder, and feel constant pressure to be resolute, correct, gruff. I have never worried for my physical safety in the presence of male companions, and think nothing of walking alone at night. As a motorcyclist and as an engineer I am never the odd one out. I can wear comfortable clothes at formal gatherings. I can enter any building freely, and when boarding a bus, folks never rustle and stare at the delay. I feel tremendously self-conscious when surrounded by people of color. My coworkers never comment about how pretty I am. I am never expected to speak for all young, White males.

I will never have the experience of being a woman; to keep it together when my male coworkers take credit for my ideas and I’m still making $20,000 less than the junior devs fresh out of college. To move from Pakistan to the rural Midwest, to a new culture and bureaucracy, and struggling to learn math my classmates can barely cope with–in a language not my own. To be told all my life that I was White, because the family that adopted me was so much darker, and I was told I had beautiful light skin and to keep from getting tan, and when I moved to New York they called me Black, but I don’t know how to be Black and no one will teach me. To be the only kid from the Rez who went to college, and to have pleaded with admissions to let my dad off the hook for when he wouldn’t lift a fucking drunk-ass finger to fill out the FAFSA, and to remember my grandmother’s strength and intellect and passion: my inspiration always.

tl;dr Riemann is a monitoring system, so it emphasizes liveness over safety.

Riemann is aimed at high-throughput (millions of events/sec/node), partial-harvest event processing, where it is acceptable to trade completeness for throughput at low latencies. For instance, it’s probably fine to drop half of your request latency events on the floor, if you’re calculating a lossy histogram with sampling anyway. It’s also typically acceptable to have nondeterministic behavior with respect to time windows: if one node’s clock is skewed, it’s better to process it “soonish” rather than waiting an unbounded amount of time for it to check in.

There is no synchronization or relationship between events. Events are immutable and have a total order, even though a given server or client may only have a fraction of the relevant events for a system. The events are, in a sense, the transaction log–except that the semantics of those transactions depend on the stream configuration.