Previously on Jepsen, we explored Zookeeper. Next up: Kafka.

NuoDB came to my attention through an amazing mailing list thread by the famous database engineer Jim Starkey, in which he argues that he has disproved the CAP theorem:

The CAP conjecture, I am convinced, is false and can be proven false.

The CAP conjecture has been a theoretical millstone around the neck of all ACID systems. Good riddance.

This is the first wooden stake for the heart of the noSQL movement. There are more coming.

I, and every database user on the planet, not to mention a good part of the distributed systems research community, would love to find a counterexample which disproves the CAP theorem. For that matter, I’m tremendously excited about the possibilities of causal and lattice consistency, which we know are achievable in asynchronous networks. So I was curious: what was NimbusDB (now named NuoDB) up to? How does their consistency model work?

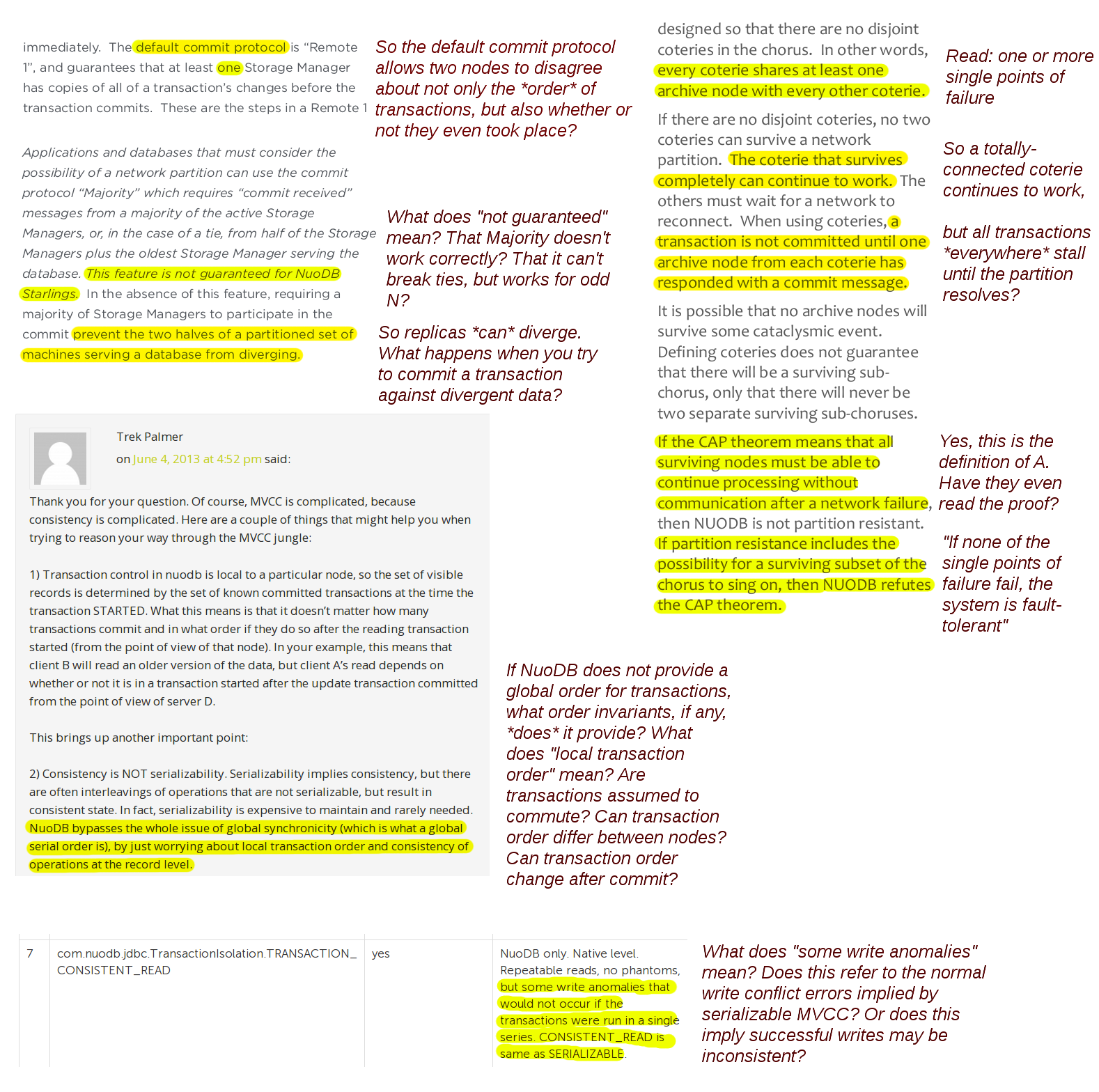

I usually try to understand a new system by reading the documentation, scanning for words like “safety”, “order”, “serializability”, “linearizability”, “consistency”, “conflict”, and “replica”. I keep notes as I go. Here are a few excerpts from my first six hours trying to figure out NuoDB’s consistency invariants:

In particular, I want to draw attention to this excerpt:

If the CAP theorem means that all surviving nodes must be able to continue processing without communication after a network failure, than NUODB is not partition resistant.

This is kind of an odd statement to make, because Gilbert and Lynch’s proof defines “availability” as “every request received by a non-failing node in the system must result in a response.” That would seem to imply that NuoDB does not satisfy CAP availability.

If partition resistance includes the possibility for a surviving subset of the chorus to sing on, then NUODB refutes the CAP theorem.

We know systems exist in which a surviving subset of nodes continue processing during a partition. They are consistent with the CAP theorem because in those systems (e.g. Zookeeper) some requests to non-failing nodes do not succeed. Claiming this “refutes the CAP theorem” is incoherent.

This isn’t getting us anywhere. To figure out how NuoDB actually behaves, we’ll need to set up a cluster and test it ourselves.

Operational notes

Setting up a NuoDB cluster turned out to be more difficult than I anticipated. For starters, there are race conditions in the cluster join process. Each node has a seed node to join to, which determines the cluster it will become a part of. If that seed is inaccessible at startup, the node will quietly become a part of a new, independent cluster–and will not, as far as I can tell, join the original cluster even if the node becomes accessible later. Consequently, performing a cold start is likely to result in several independent clusters, up to and including every node considering itself the sole node in its own cluster.

This is a catastrophic outcome: if any clients manage to connect to one of these isolated clusters, their operations will almost certainly disagree with the other clusters. You’ll see conflicting row values, broken primary keys, invalid foreign key relationships, and so on. I have no idea how you go about repairing that kind of damage without simply dropping all the writes on one side of the split-brain.

You can join a node to itself. This is easy to do accidentally if you, say, deploy the same seed node to every node’s configuration file. The consequences are… interesting.

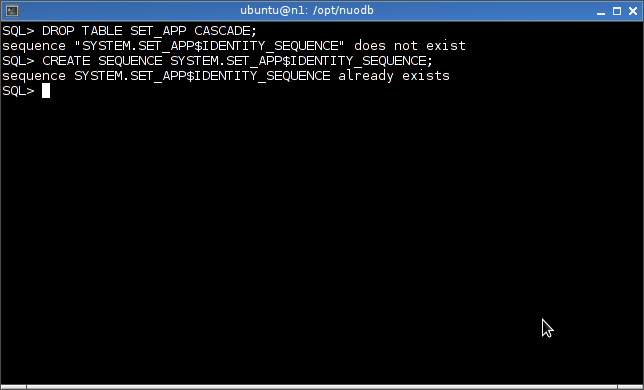

There are also race conditions in database creation. For instance, if you create and delete the same simple table a few times in succession, you can back yourself into this corner, where you can neither use, delete, nor recreate a table, short of nuking the entire cluster:

I’ve talked with the NuoDB team about these bugs, and they’re working on fixing them. Hopefully they won’t be present in future releases.

Finally, be aware that restarting a crashed NuoDB node does not restore its transaction managers or storage managers; if you do a naive rolling restart, all the data vanishes. In my conversations with NuoDB’s engineering staff, it looks like this is actually intended behavior for their customers’ use cases. The cluster also doesn’t set up failover replicas when nodes become unavailable, so it’s easy to accidentally lose all the storage nodes if your membership shifts. NuoDB plans to improve that behavior in future releases.

What happens during partition?

In This NuoDB test, we check the consistency of compare-and-set updates to a single cell, by having transactions compete at the SERIAL consistency level to read, update, and write a vector of numbers. Note that this test does not check multi-key linearizability, or, for that matter, exclude behaviors like P4 or P3.

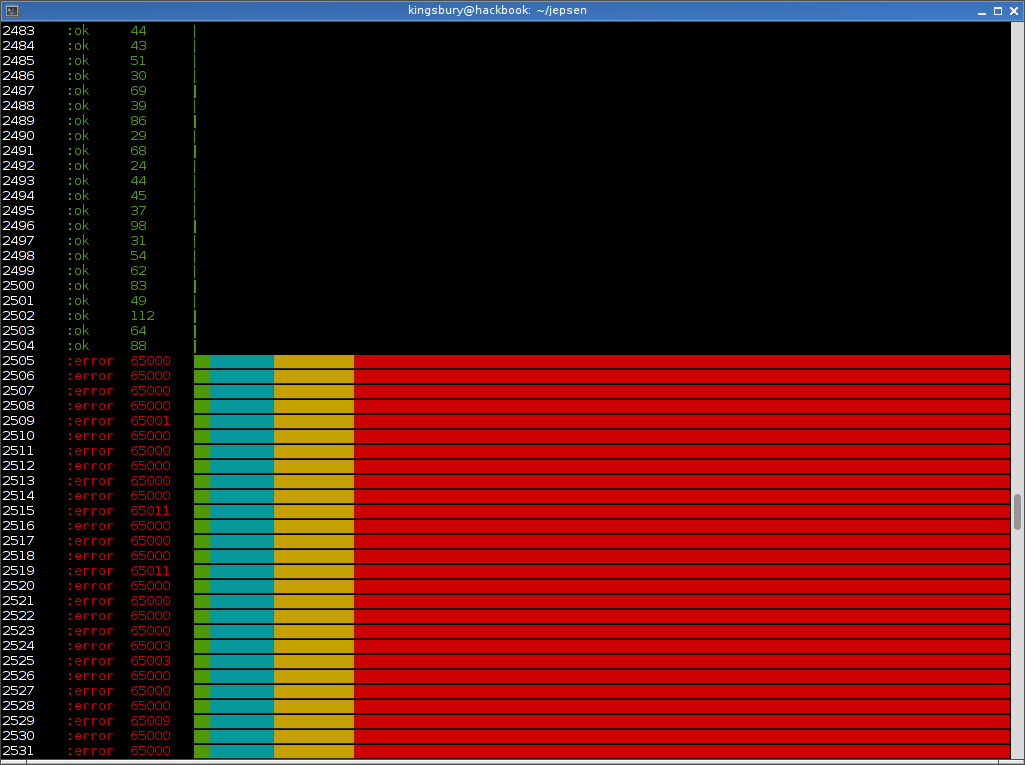

During a partition, with the Java driver, you could see a variety of failure modes:

- “Duplicate value in unique index SEQUENCES..PRIMARY_KEY”

- End of stream reached

- Broken pipe

- Connection reset

- Indefinite latency

And I do mean indefinite. I haven’t actually found an upper limit to how long NuoDB will block for. As far as I can tell, when a node is inaccessible, operations will queue up for as long as the partition lasts. Moreover, they block globally: no subset of the cluster, even though a fully connected majority component existed, responded during partition.

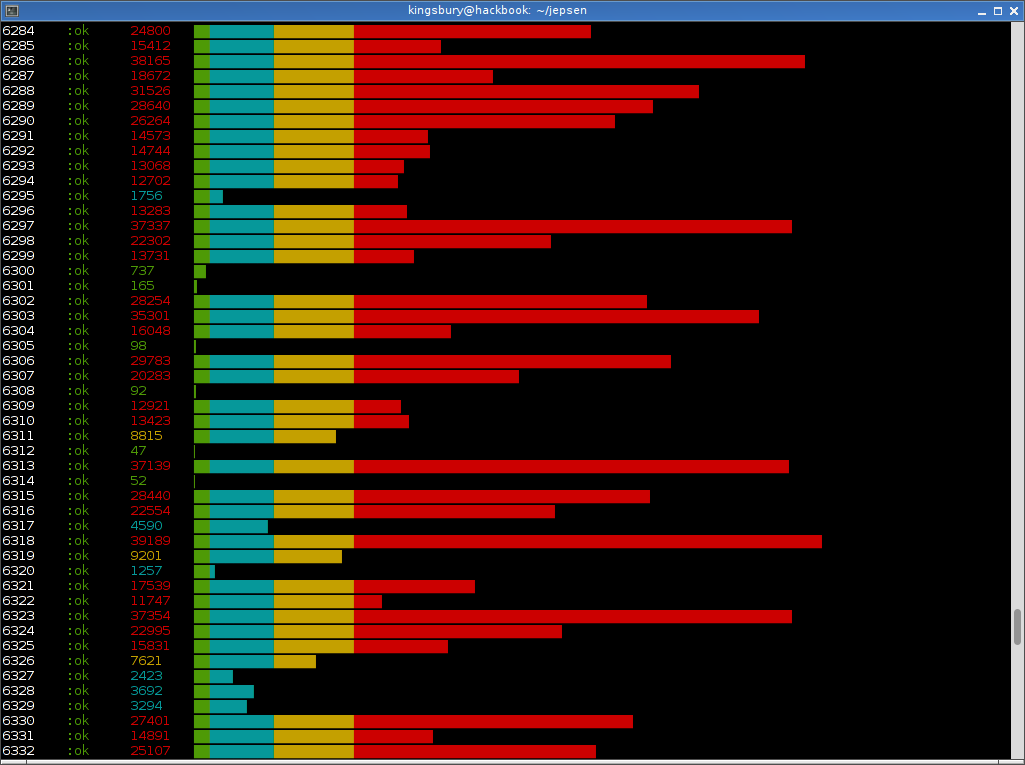

Perhaps because all operations are queued without timeout, it takes a long time for NuoDB latencies to recover after the partition resolves. In my tests, latencies continued to spike well into the 30-60 second range for as many as 1500 seconds after the partition ended. I haven’t found an upper limit for this behavior, but eventually, something somewhere must run out of ram.

Results

NuoDB typically acknowledged 55% of writes in my tests–most, but not all, writes made during the partition failed due to CaS conflict and were not retried after Jepsen’s internal timeout. The good news is that all acknowledged writes made at the SERIAL consistency level were present in the final dataset: no dropped writes. There were also a trivial fraction of false negatives, which is typical for most CP systems. This indicates that NUODB is capable of preserving some sort of linear order over CaS operations to a single cell, even in the presence of a partition.

Note that NuoDB isn’t fully CP, because it does not enforce serializability for all write operations–just “local transaction order”. I’m not exactly sure how the local orders interact, and whether there are practical scenarios which would violate serializability but be allowed by NuoDB’s local transaction invariants. So far I haven’t been able to construct a test to demonstrate the difference.

Does NuoDB refute the CAP theorem? Of course it doesn’t. By deferring all operations until the partition resolves, NuoDB is not even close to available. In fact, it’s a good deal less available than more consistent systems: Zookeeper, for example, remains available on all nodes connected to a majority component. NuoDB is another example of the adage that systems which purport to be CA or CAP usually sacrifice availability or consistency when a partition does occur–and often in spectacular ways.

Blocking all writes during partition is, according to the NuoDB team, intended behavior. However, there is experimental liveness detection code in the most recent release, which will hopefully allow NuoDB to begin timing out requests to inaccessible nodes. I haven’t been able to test that code path yet, but future releases may enable it by default.

If you are considering using NuoDB, be advised that the project’s marketing and documentation may exceed its present capabilities. Try to enable the liveness detection code, and set up your own client timeouts to avoid propagating high latencies to other systems. Try to build backpressure hints into your clients to reduce the requests against NuoDB during failure; the latency storm which persists after the network recovers is proportional to the backlog of requests. Finally, be aware of the operational caveats mentioned earlier: monitor your nodes carefully, restart their storage and transaction managers as appropriate, and verify that newly started nodes have indeed joined the cluster before exposing them to clients.

Finally, I want to note (as always) that the presence of bugs does not mean that the NuoDB engineers are incompetent–in fact, I want to assert the opposite. In my discussions with the NuoDB team I’ve found them to be friendly, capable, aware of the product’s limitations, and doing their best to solve a difficult problem within constraints of time, budget, and complexity. Given time, I’m sure they’ll get past these initial hurdles. From one employee:

I only hope you’ll footnote that crazy CAP rambling with the disclaimer that no one at NuoDB today actually agrees with Jim’s comments in that thread.

In the next post, we’ll learn about Kafka 0.8’s proposed replication model.

Any discussion of the CAP “theorem” revolves around the A – exactly what does availability mean?

The narrow sense is that availability means that all surviving nodes in all partitions continue to process work. This reduces the CAP idea to nothing more than “there is no consistency without communication.” Well, duh. This is of interest only to third rate academics a few papers short of a tenure package.

A more useful interpretation of availability is that maximizes availability while insuring that a) at most one partition survives, b) no transaction committed in the surviving partition is lost, and c) no transaction not actually committed in the surviving partition is reported or treated as committed.

A useful discussion of a database system vis a vis CAP is whether or not it maintains strict and robust ACID properties in the face of an arbitrary partition event and to degree to which the surviving partition provides availability. This is not a low bar.

The design of NuoDB had these properties: at most one partition could survive a partition event, no transaction committed in the surviving partition could be lost, and no transaction in a non-surviving partition could be reported as committed (admittedly, this required the DBA to set the highest level of commit synchronization). As I left the company before V1 shipped, I will leave the discussion of whether the implementation was faithful to the design to others.