Previously, on Jepsen, we reviewed Elasticsearch’s progress in addressing data-loss bugs during network partitions. Today, we’ll see Aerospike 3.5.4, an “ACID database”, react violently to a basic partition.

[Update, 2018-03-07] See the followup analysis of 3.99.0.3

Aerospike is a high-performance, distributed, schema-less, KV store, often deployed in caching, analytics, or ad tech environments. Its five-dimensional data model is similar to Bigtable or Cassandra: namespaces (databases) contain sets (tables) of records, where keys identify records. Each record is a map of bin names to values. Aerospike has put a good deal of work into performance across good-size (~100TB) datasets, and is repositioning itself as a general purpose datastore competitive with, say, MongoDB.

Data is sharded and balanced between servers using a Paxos-based membership algorithm. Stored procedures are available in Lua and allow for MapReduce-style parallel queries. There’s a lot to like here. However, Aerospike makes a dangerous assumption for a distributed datastore: it assumes the network is reliable. In this post, we’ll explore what happens in Aerospike 3.5.4 when the network is not reliable.

Availability

Aerospike’s marketing copy delivers. The home page, for example, advertises “100% Uptime”. This makes Aerospike’s uptime infinitely better than the Ericsson AXD301 switch, which delivered somewhere between five and nine nines of availability in a system comprised of 1.1 million lines of Erlang. That this level of reliability can be obtained in a distributed database–notoriously fickle beasts–is nothing short of remarkable.

I would be delighted to use any software this dependable–but how does it work?

In the ACID architecture documentation they elaborate:

Aerospike is by and large an AP system that provides high consistency by using the following techniques:

Recall that an AP system provides total availability: every request to a non-crashed node must succeed, regardless of any disruption in the network between the nodes. How do they provide “high consistency”?

- Trade off availability and consistency at a finer granularity in each subsystem

OK!

- Restrict communication latencies between nodes to be sub-millisecond

Real network disruptions are usually finite in length: you should expect lots of short-term delays on the order of microseconds, a few on the order of minutes, and rarely, for hours or days. If your system’s characteristic latencies (e.g. timeouts) are on the order of minutes, disruption of a few seconds won’t affect logical correctness. If, however, you consider a node dead after a millisecond, that means leader elections–and the chance for multiple primaries to commit conflicting data–will occur more often. On the other hand, the window for data loss might be shorter because isolated primaries step down sooner. Tradeoffs.

- Leverage the high vertical scale of Aerospike (1 million TPS and multiple terabyte capacity per node) to ensure that cluster sizes stay small (between 1 and 100 nodes)

Small cluster sizes do reduce the probability of seeing any partition, but in a sharded system like Aerospike, Riak, or Cassandra, the only network paths that matter are those between the n (usually 2 to 5) replicas of any given key. As the number of machines N grows, n remains constant: link failures for individual nodes affect less and less of the keyspace. What matters more is higher-order topology: top-of-rack and distribution switches–and those should usually sit between replicas in your cluster anyway to maximize availability.

In a cloud environment, most bets are off.

- Virtually eliminate partition formation as proven by years of deployments in data center and cloud environments

As software engineers, the network is the Symplegades to our Argo, the Velociraptors in our Jurassic Park, the Aristotle Benson to our Jamie. The network decides what it’s going to allow and we’ve got to work within those bounds.

- Ensure extremely high consistency and availability during node failures and rolling upgrades (in the absence of partitions that are rare anyway)

“Provides high consistency by … ensur[ing] extremely high consistency.”

- Provide automatic conflict resolution to ensure newer data overrides older data during cluster formation

Fair enough. Every AP system is going to have some sort of conflict management strategy.

So, what does “high consistency” mean, exactly?

Consistency

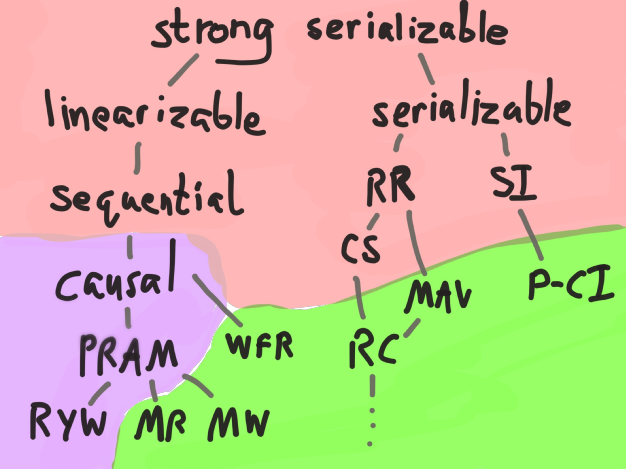

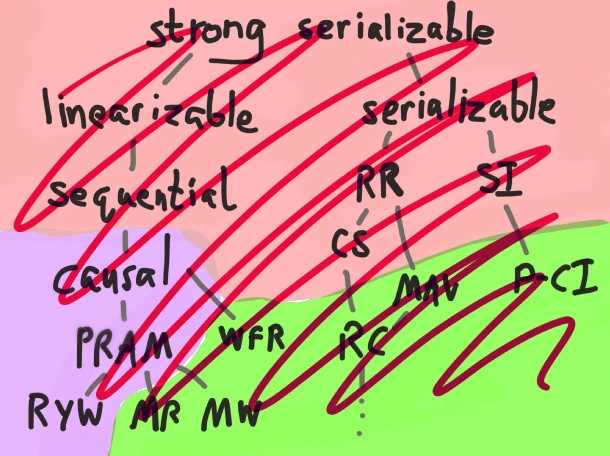

On the home page and throughout their white papers, Aerospike claims to offer “ACID consistency”. ANSI SQL 92 defines (sort of; you can interpret their English definitions in either “anomalous” or “strict” terms) four levels for transaction isolation, which place increasingly stronger invariants around the interleaving of operations from different transactions in terms of four disallowed phenomena:

- Read Uncommitted (prohibits P0: dirty writes)

- Read Committed (prohibits P0 & P1: dirty reads)

- Repeatable Read (prohibits P0, P1 & P2: fuzzy reads)

- Serializable (prohibits P0, P1, P2, & P3: phantom reads)

… and there are also MVCC isolation models, Snapshot Isolation, Monotonic Atomic View, and so on, each with varying guarantees and different levels of availability. In the map to the right, the right hand branch shows various ACID isolation models. Purple denotes sticky-available ones, and red shows models that must sacrifice availability during a partition.

Which of these isolation levels does Aerospike support? The ACID whitepaper elaborates:

Aerospike provides read-committed isolation level using record locks to ensure isolation between multiple transactions.

Read-committed is achievable in a totally available (AP) system! It falls within the green region. So far, everything’s consistent. But the white paper goes on:

For operations on single keys with replication and secondary indexes, Aerospike provides immediate consistency using synchronous writes to replicas within the cluster, i.e., the client will be intimated about the successful write only if the replica is also updated.

And then,

After a write is completely applied and the client is notified of success, all subsequent read requests are guaranteed to find the newly written data: there is no possibility of reading stale data. Therefore, Aerospike transactions provide immediate consistency.

This suggests reads must linearize with respect to writes–and we know from the CAP theorem that linearizable systems cannot be totally available. This system must sacrifice either availability or consistency when a partition occurs. But which?

AP vs CP mode

The whitepaper helpfully includes a discussion of this tradeoff in its final section: Deployment modes – AP vs CP. The section on “CP mode” is mostly speculation, mainly because CP mode doesn’t exist.

The AP mode that Aerospike supports today prioritizes availability and therefore can be consistent only when partitions do not occur. In the future, Aerospike will add support for CP mode as an additional deployment option.

They go on to warn:

A key design point of Aerospike is to setup cluster nodes that are tightly coupled so that partitions are virtually impossible to create.

OK. So what magical network does Aerospike recommend we deploy on? The deploy page leads with Google Compute Engine and Amazon EC2, both of which have notoriously flaky networks.

How do you get sub-millisecond latency bounds out of EC2’s network? The Amazon Deployment Guide “recommend[s] the use of placement groups for Aerospike server instances,” e.g., you should put all your nodes in the same Availability Zone. Availability zones tend to fail dramatically every year or so, which raises the question of how we’re going to achieve that 100% uptime promised on the Aerospike home page.

To add further redundancy to Aerospike in AWS using Availability Zone (AZ), you can set up two cluster across two different availability zones such that there is one set of data in each AZ.

If you wish to configure an Aerospike cluster across two zones or regions, we suggest you use an Application-level Queue like Kafka or RabbitMQ.

Aerospike Enterprise Edition has Cross Data Center Replication (XDR) feature, which handles clusters located in multiple data centers. Please contact us for further details.

This is starting to sound less like “100% uptime with ACID consistency” than I’d hoped. Let’s put it to the test.

Linearizable CAS registers

To establish whether operations are linearizable, we’ll use a single bin in a single key as a register, and use Aerospike’s conditional writes to implement a compare-and-set register on top of that record. Our Jepsen client will handle reads, cas, and write ops by fetching the current value, performing a read+conditional-write, and an unconstrained write, respectively.

Then we’ll feed a mixture of randomly selected read, write, and CaS ops to that client, while interrupting the network in a 10-seconds-on, 10-seconds-off schedule, and analyze the results with Knossos to see whether they’re consistent with a linearizable history. When a client thinks a value has successfully been written, is it actually visible to others?

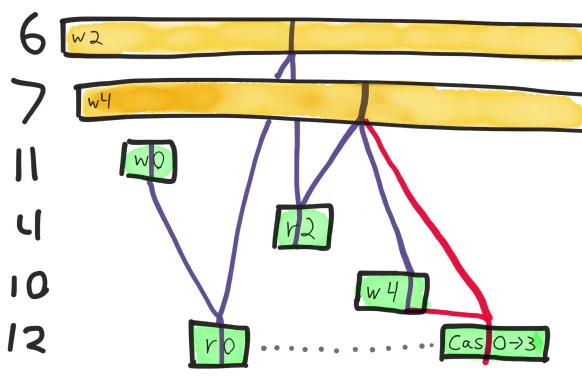

Inconsistent state transitions:

([{:value 4} "can't CAS 4 from 0 to 3"])

The answer is no.

Jepsen routinely detects linearizability violations in a matter of seconds, on both read and CaS operations. For instance, this analysis follows a history where the only possible state for the register was 4, but an Aerospike client was able to execute a compare-and-set of 0 to 3. This implies both reads and conditional writes are unsafe, since the Jepsen client validates that the read value was 0 before issuing a conditional write.

In this timeline, process 6 and process 7 have pending writes of the values 2 and 4 respectively, which timed out due to a network partition. Process 11 writes 0, and that write is visible to process 12, which reads 0. Then process 4 reads 2, which means process 6’s write must have gone through.

Next, process 10 writes 4 successfully–and process 12 executes a compare-and-set from 0 to 3. This should be impossible–process 12 should have seen the most recent write of 4, not 0!

Could the value have been changed by another crashed write? No–the only other concurrent write was process 7’s write of 4, which would leave the value unchanged. This history is not consistent with a linearizable register.

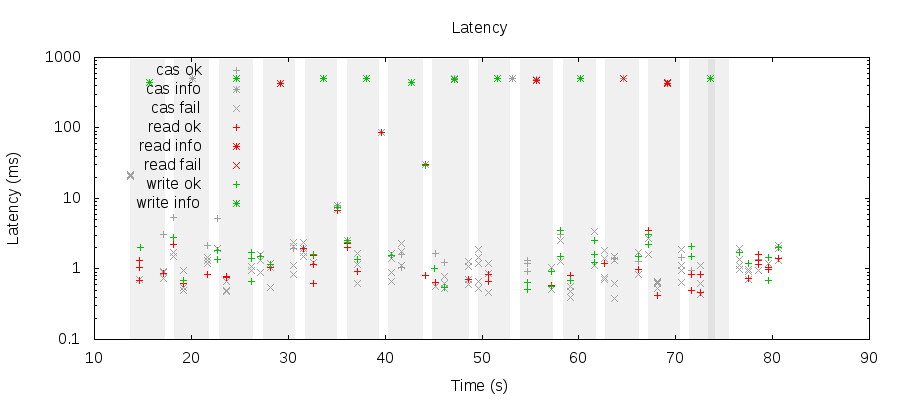

We don’t even have to wait 10 seconds between network transitions. Even disruptions which resolve within a second or two is enough to induce data loss and unavailability. I expect that even millisecond-scale network disruptions should be sufficient, but Jepsen can’t control the network on that fine a timescale yet. This graph shows partitions as grey regions, and point type indicates what the Aerospike client thought happened: successful ops as +, known failed operations as x, and indeterminate ops as *.

We’re setting a timeout of 500ms here, and operations still time out every time a partition between nodes occurs. In these tests we aren’t interfering with client-server traffic at all.

Aerospike may claim “100% uptime”, but this is only meaningful with respect to particular latency bounds. Given Aerospike claims millisecond-scale latencies, you may want to reconsider whether you consider this “uptime”.

Counters

Linearizability is a relatively strict constraint, though. Aerospike is often deployed as an analytics datastore for high-volume things like counters. Counter increments are commutative, which make them excellent candidates for conflict resolution! Aerospike may not be able to offer linearizability, but it might offer eventually consistent counters.

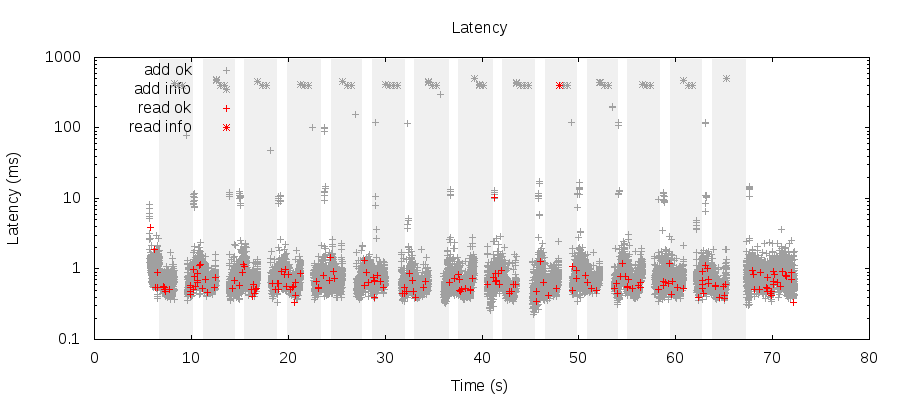

We’ll use the built-in add method from the Aerospike Java client to build a client that accepts add ops to increment a counter, and read ops to fetch the current value.

We know the true value of a counter should be at most the number of attempted increments, and at least the number of acknowledged increments. This analyzer verifies that any read operations overlap with a counter value in that range–and can tell us how far out of bounds each read falls.

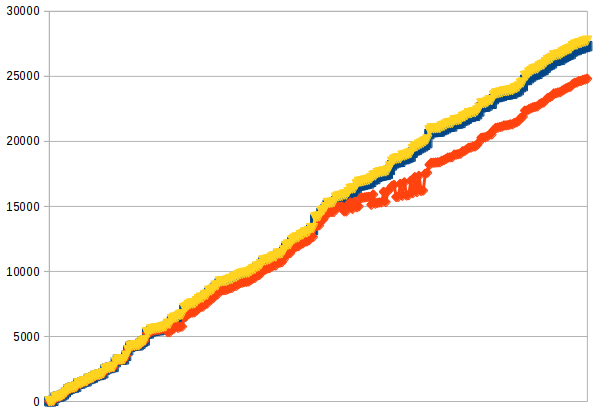

The (awful, I know) graph to the right shows the counter value from a typical run, plotted over something roughly analogous to time. The observed value, in orange, should lie within the blue and yellow bounds given by the number of successful and acknowledged increments. However, as the network shifts, the counter drifts lower and lower. By the time of the final read, about 10% of the increment operations have been lost.

There’s an interesting anomaly in the middle of this graph–for a time, the observed value fluctuates between two clusters of incrementing values. This is a visible consequence of split-brain: two primary nodes both believe they are authoritative, and are processing updates and reads for significantly different values of the counter.

Just like the CaS register test, increment and read latencies will jump from ~1 millisecond to ~500 milliseconds when a partition occurs. Timeouts are not availability.

However, we may be willing to tolerate higher latencies!

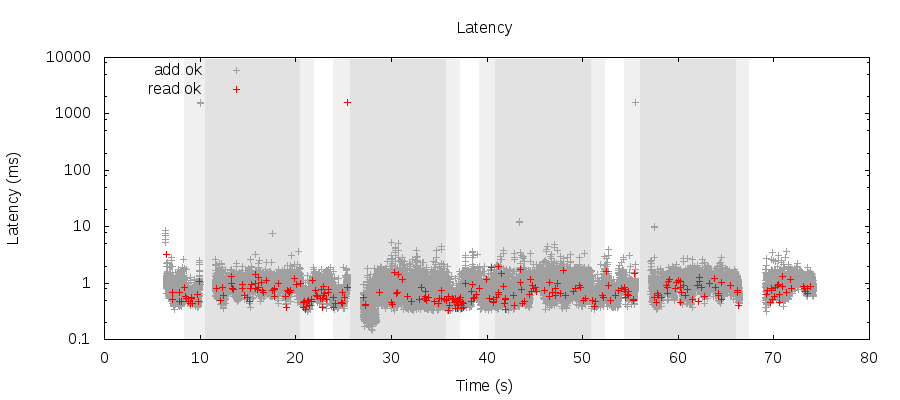

If we raise the timeouts arbitrarily high (and increase the length of partitions to 10 seconds to ensure operations can’t just block for the duration of the partition itself) Aerospike can service every request successfully, peaking at ~2 seconds. Note that fewer requests time out because Jepsen uses a fixed-concurrency test–slower response times mean fewer requests in any given interval.

I’d consider these very good figures for latency overall; many systems have characteristic recovery times on the order of 60 seconds, not 2 seconds! I think Aerospike deserves a round of applause here.

Conflict resolution

A CRDT like a PN-counter wouldn’t show this type of behavior. Although read values would drop below bounds temporarily (in the scope of a partition), when the partition resolved, the PN-counter’s merge function would recombine the increments from both sides and we’d read a value within bounds.

Aerospike’s counter behavior is characteristically different: it may be eventually consistent in that clients agree on a value, but it’s not the value we want. The damage is done, so to speak. Why?

At a later point, if the factions rejoin, data that has been written in both factions will be detected as inconsistent. Two policies may be followed. Either Aerospike will auto-merge the two data items (default behavior today) or keep both copies for application to merge later (future). Auto merge works as follows:

- TTL (time-to-live) based: The record with the highest TTL wins

- Generation based: The record with the highest generation win

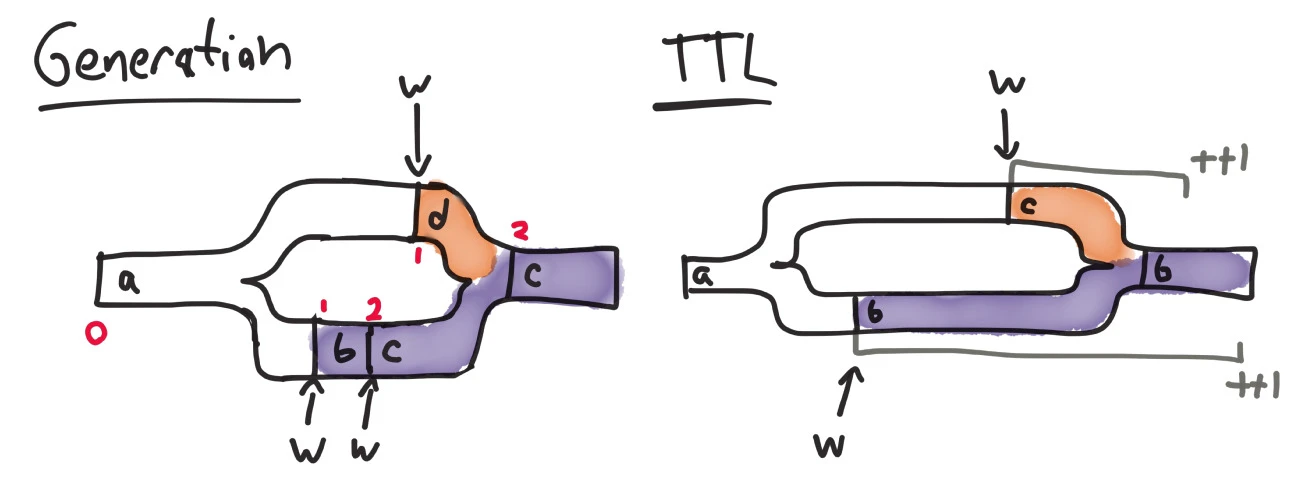

Using the generation number preserves the record that has gone through the most changes since divergence. In the diagram below, a register containing a, with generation 0, is split by a network partition. The lower replica accepts two writes of b and c, respectively, making its generation 2. The upper replica then accepts a single write of d, and has generation 1. Since the lower replica containing c underwent more changes, it wins, clobbering the subsequent write of d.

In TTL-based conflict resolution, the version with the higher Time To Live wins. It’s not clear to me whether Aerospike uses the size of the TTL or the time the record is supposed to expire, but both are inconsistent. An earlier write can clobber a later one if its TTL happens to be higher, or if its local clock is misaligned, etc. If the values are equal, the best option is for Aerospike to fall back to generation-based conflict resolution. If the generations are equal? It’s a coin flip.

There’s no way for these strategies to not lose updates. I asked Aerospike’s support about the data-loss issues I saw in my tests, and they recommended using the generation policy for counters and TTL resolution for “idempotent changes”. In my tests, both conflict resolution strategies resulted in identical data-loss patterns.

Lost updates (and related anomalies in Aerospike) invalidate its claims to every ACID isolation level. We’re not just talking about the temporary visibility of garbage data–we’re talking about accepting updates that never should have happened in the first place, like claiming a unique ID twice, or double-withdrawing a balance.

So long as Aerospike automatically merges data in this way, we can’t avoid write loss. But we can take advantage of a feature called “application merge”, which, like Riak or CouchDB, presents divergent versions of a record to the client for conflict resolution. From the ACID whitepaper:

Application merge works as follows:

- When two versions of the same data item are available in the cluster, a read of this value will return both versions, allowing the application to resolve the inconsistency.

- The client application – the only entity with knowledge of how to resolve these differences – must then re-write the data in a consistent fashion.

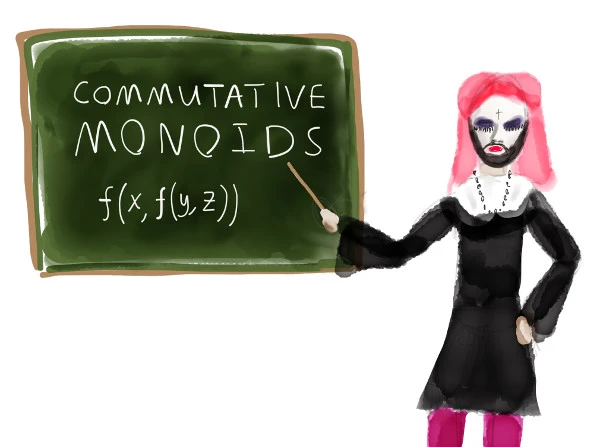

If our merge function is associative and commutative, we obtain a structure called a commutative monoid, which frees us from having to care about which side performed updates in which order. We can simply mash all the updates together any which way and get the same result. However, this isn’t enough! We don’t have just a list of updates–we only have the merged result of two possibly intersecting sets of updates on two different replicas. A normal merge would double-count operations common to both histories.

We have to add a third property: idempotence. merge(merge(x, y), y) should be the same as merge(x, y).

If our merge function is associative, commutative, and idempotent, we obtain what’s called a CRDT, or Commutative Replicated Datatype. CRDTs ensure that reads eventually converge on a least upper bound for all past operations. No updates lost, nothing double-counted. We still have the possibility of stale reads, but they’ll eventually converge on the right value.

So, let’s test it! We’ll just google for “aerospike application merge” to figure out where this feature lives, and find this question on the Aerospike forums:

“Wanted to know if Application based merge still works?”

Currently, TTL and generation are still the only 2 options available for handling conflict.

Like CP mode, this important safety feature does not actually exist.

Recommendations

Aerospike offers phenomenal latencies and throughput–but in terms of data safety, its strongest guarantees are similar to Cassandra or Riak in Last-Write-Wins mode. It may be a safe store for immutable data, but updates to a record can be silently discarded in the event of network disruption. Because Aerospike’s timeouts are so aggressive–on the order of milliseconds–even small network hiccups are sufficient to trigger data loss.

If you are an Aerospike user, you should not expect “immediate”, “read-committed”, or “ACID consistency”; their marketing material quietly assumes you have a magical network, and I assure you this is not the case. It’s certainly not true in cloud environments, and even well-managed physical datacenters can experience horrible network failures.

Aerospike delivers millisecond-scale latencies when healthy, but even small network hiccups can cause timeouts. This is not particularly surprising–most AP systems go briefly unavailable (or equivalently, have much higher latencies) while converging on a new state when the network changes, but you should keep it in mind: “100% uptime” probably won’t hold through network failure if you demand responses within 50 or even 500 ms. If you’re willing to wait a few seconds for requests, though, Aerospike appears to satisfy.

Aerospike uses a Paxos implementation as a part of their membership algorithm, so they’re clearly no strangers to consensus systems. I asked whether they planned to address these problems by threading writes through their Paxos implementation, and they responded that this would impose unacceptable overhead for their latency-sensitive customers.

Aerospike synchronously replicates to all replicas by default, and fast generalized Paxos only requires acknowledgement from a majority, so on paper using Paxos would actually improve performance–but the extra state machinery required might outweigh the network latency costs. Or their particular Paxos implementation may require more rounds. That’s a balance they’ll have to explore!

Keep in mind that even if Aerospike doesn’t use Paxos for their writes, write loss may be tolerable! Many ad tech systems process millions of requests per second, and losing data for a tenth of those over a few minutes might mean nothing to their bottom line. What does cost money is serving ads slower than one’s competitors–so a system like Aerospike could be a perfect fit. The same goes for many analytics stores–so long as the cluster is healthy more often than not, lost increments can wash out in the noise. Most caches tolerate lost updates just fine.

Bottom line: consider Aerospike as a high-volume, lossy key-value store, not as a source of record for high-value mutable data.

This work is a part of my research at Stripe, and I would like to thank everyone there–especially Marc Hedlund–for helping me test distributed systems and publish articles like this. I’d like to thank Lucien Volmar, Kevin Porter, and Peter Corless from Aerospike for their help in getting the cluster set up and evaluating test results. Finally, my thanks to Caitie McCaffrey, Duretti Hirpa, Coda Hale, and Inés Sombra for their very helpful comments.

The legend on the latency graph isn’t really well placed IMO.