The trouble with timestamps

Some folks have asked whether Cassandra or Riak in last-write-wins mode are monotonically consistent, or whether they can guarantee read-your-writes, and so on. This is a fascinating question, and leads to all sorts of interesting properties about clocks and causality.

There are two families of clocks in distributed systems. The first are often termed wall clocks, which correspond roughly to the time obtained by looking at a clock on the wall. Most commonly, a process finds the wall-time clock via gettimeofday(), which is maintained by the operating system using a combination of hardware timers and NTP–a network time synchronization service. On POSIX-compatible systems, this clock returns integers which map to real moments in time via a certain standard, like UTC, POSIX time, or less commonly, TAI or GPS.

The second type are the logical clocks, so named because they measure time associated with the logical operations being performed in the system. Lamport clocks, for instance, are a monotonically increasing integer which are incremented on every operation by a node. Vector clocks are a generalization of Lamport clocks, where each node tracks the maximum Lamport clock from every other node.

Burn the Library

Write contention occurs when two people try to update the same piece of data at the same time.

We know several ways to handle write contention, and they fall along a spectrum. For strong consistency (or what CAP might term “CP”) you can use explicit locking, perhaps provided by a central server; or optimistic concurrency where writes proceed through independent transactions, but can fail on conflicting commits. These approaches need not be centralized: consensus protocols like Paxos or two-phase-commit allow a cluster of machines to agree on an isolated transaction–either with pessimistic or optimistic locking, even in the face of some failures and partitions.

Do not expose Riak to the internet

Major thanks to John Muellerleile (@jrecursive) for his help in crafting this.

Actually, don’t expose pretty much any database directly to untrusted connections. You’re begging for denial-of-service issues; even if the operations are semantically valid, they’re running on a physical substrate with real limits.

Riak, for instance, exposes mapreduce over its HTTP API. Mapreduce is code; code which can have side effects; code which is executed on your cluster. This is an attacker’s dream.

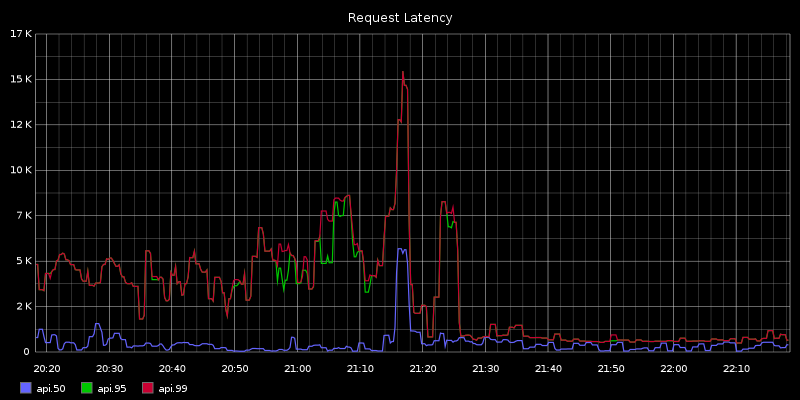

Riak-pipe mapreduce

As a part of the exciting series of events (long story…) around our riak cluster this week, we switched over to riak-pipe mapreduce. Usually, when a node is down mapreduce times shoot through the roof, which causes slow behavior and even timeouts on the API. Riak-pipe changes that: our API latency for mapreduce-heavy requests like feeds and comments fell from 3-7 seconds to a stable 600ms. Still high, but at least tolerable.

[Update] I should also mention that riak-pipe MR throws about a thousand apparently random, recoverable errors per day. Things like map_reduce_error with no explanation in the logs, or {“lineno”:466,“message”:“SyntaxError: syntax error”,“source”:“()”} when the source is definitely not “()”. Still haven’t figured out why, but it seems vaguely node-dependent.

Systems Security: A Primer

The riak-users list receives regular questions about how to secure a Riak cluster. This is an overview of the security problem, and some general techniques to approach it.

Theory

You can skip this, but it may be a helpful primer.