Previously in Jepsen, we discussed MongoDB. Today, we’ll see how last-write-wins in Riak can lead to unbounded data loss.

So far we’ve examined systems which aimed for the CP side of the CAP theorem, both with and without failover. We learned that primary-secondary failover is difficult to implement safely (though it can be done; see, for example, ZAB or Raft). Now I’d like to talk about a very different kind of database–one derived from Amazon’s Dynamo model.

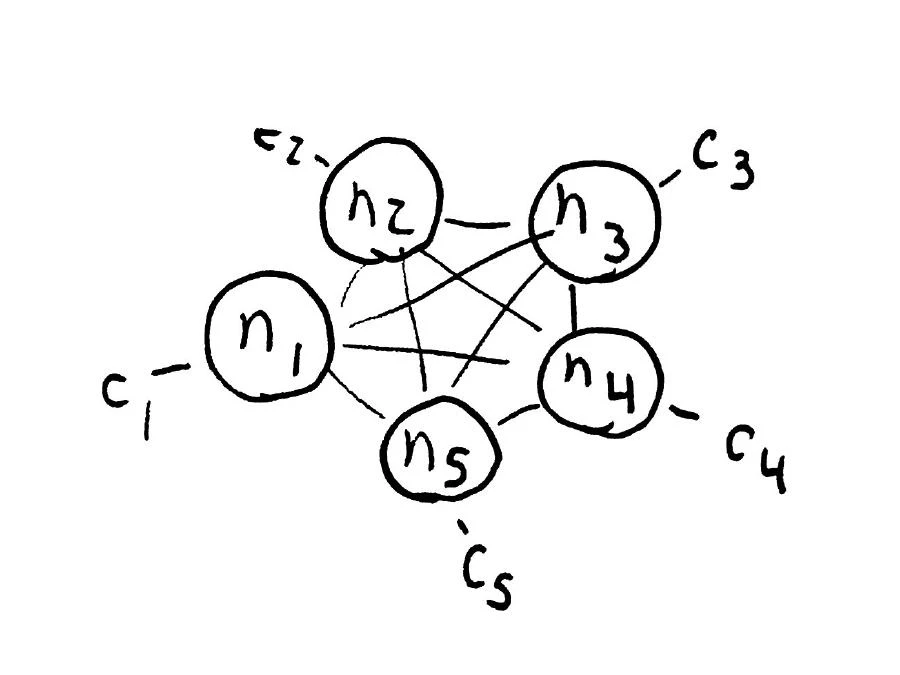

Amazon designed Dynamo with the explicit goals of availability and partition tolerance–and partition-tolerant systems automatically handle node failure. It’s just a special kind of partition. In Dynamo, all nodes are equal participants in the cluster. A given object is identified by a key, which is consistently hashed into N slots (called “partitions”; not to be confused with a network partition) on a ring. Those N slots are claimed by N (hopefully distinct) nodes in the cluster, which means the system can, once data is replicated, tolerate up to N-1 node failures without losing data.

When a client reads from a Dynamo system, it specifys an R value: the number of nodes required to respond for a read to be successful. When it writes, it can specify W: the number of nodes which have to acknowledge the write. There’s also DW for “durable write”, and others. Riak has sometimes referred to these as “tunable CAP controls”: if you choose R=W=1, your system will be available even if all but one node fail–but you may not read the latest copy of data. If R + W is greater than N/2, you’re “guaranteed to read acknowledged writes”, with caveats. The defaults tend to be R=W=quorum, where quorum is N/2+1.

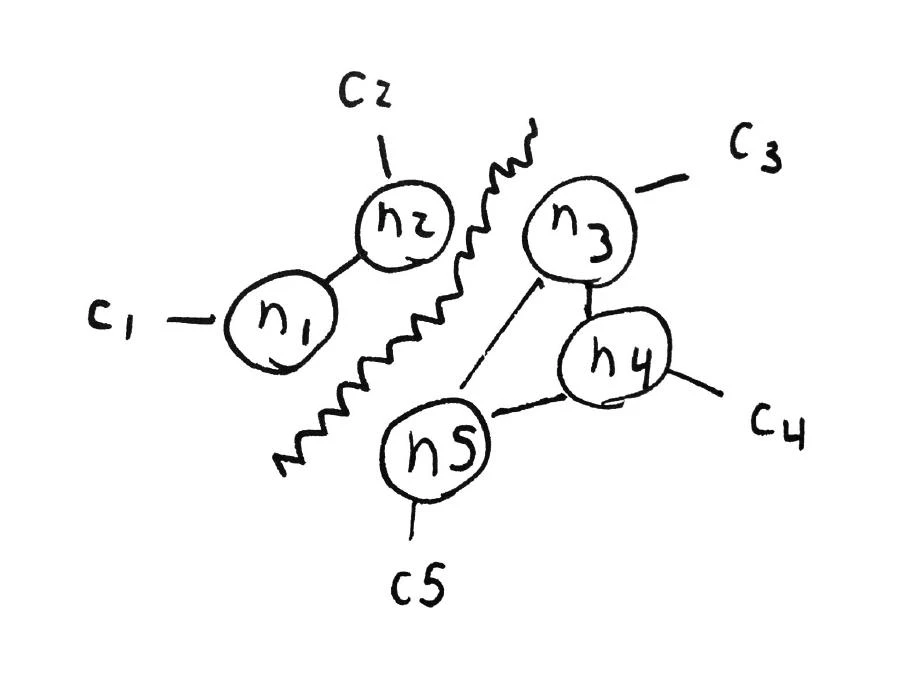

Dynamo handles partitions by healing the ring. Each connected set of machines establishes a set of fallback vnodes, to handle the portions of the ring which are no longer accessible. Once failover is complete, a Dynamo cluster split into two disjoint components will have two complete hash rings, and (eventually, as repair completes) 2 * N copies of the data (N in each component). When the partition heals, the fallback vnodes engage in hinted handoff, giving their data back to the original “primary” vnodes.

Since any node can accept writes for its portion of the keyspace, a Dynamo system can theoretically achieve 100% availability, even when the network fails entirely. This comes with two drawbacks. First, if no copy of a given object is available in an isolated set of nodes, that part of the cluster can accept writes for that object, but the first reads will return 404. If you’re adding items to a shopping cart and a partition occurs, your cart might appear to be empty. You could add an item to that empty cart, and it’d be stored, but depending on which side of the partition you talk to, you might see 20 items or just one.

When the partition heals, we have a new problem: it’s not clear which version of an object is authoritative. Dynamo employs a causality-tracing algorithm called vector clocks, which means it knows which copies of an object have been overwritten by updates, and which copies are actually conflicts–causally unconnected–due to concurrent writes.

Concurrent. We were talking about partitions, right? Two writes are concurrent if they happen in different components and can’t see each other’s changes, because the network didn’t let them communicate.

Well that’s interesting, because we’re also used to concurrency being a property of normal database systems. If two people read an object, then write it back with changes, those writes will also conflict. In a very real sense, partitions are just really big windows of concurrency. We often handle concurrent writes in relational databases with multi-version concurrency control or locks, but we can’t use locks here because the time horizons could be minutes or hours, and there’s no safe way to distribute a lock algorithm over a partition. We need a different approach. We need to be able to merge arbitrary conflicting objects for Dynamo to work. From the paper:

For instance, the application that maintains customer shopping carts can choose to “merge” the conflicting versions and return a single unified shopping cart. Despite this flexibility, some application developers may not want to write their own conflict resolution mechanisms and choose to push it down to the data store, which in turn chooses a simple policy such as “last write wins”.

Last write wins. That sounds like a timestamp. Didn’t we learn that Clocks Are Not To Be Trusted? Let’s try it and find out!

Riak with last-write-wins

Riak is an excellent open-source adaptation of the Dynamo model. It includes a default conflict resolution mode of last-write-wins, which means that every write includes a timestamp, and when conflicts arise, it picks the one with the higher timestamp. If our clocks are perfectly synchronized, this ensures we pick the most recent value.

To be clear: there are actually two settings in Riak which affect conflict resolution: lww=true, which turns off vector clock analysis entirely, and allow-mult=false, which uses vector clocks but picks the sibling with the highest timestamp. Allow-mult=false is safer, and that’s the setting I’m referring to by “last write wins.” All cases of data loss in this post apply to both settings, though.

First, let’s install Riak, join the nodes together, and tell the cluster to commit the change:

salticid riak.setup

salticid riak.join

salticid riak.commit

You can watch the logs with salticid riak.tail. Watch salticid riak.transfers until there are no handoffs remaining. The cluster is now in a stable state.

For this particular application we’ll be adding numbers to a list stored in a single Riak object. This is a typical use case for Dynamo systems–the atomic units in the system are keys, not rows or columns. Let’s run the app with last-write-wins consistency:

lein run riak lww-sloppy-quorum

Writes completed in 5.119 seconds

2000 total

2000 acknowledged

566 survivors

1434 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

1 2 3 4 6 8 ... 1990 1991 1992 1995 1996 1997

1.0 ack rate

0.717 loss rate

Riak lost 71% of acknowledged writes on a fully-connected, healthy cluster. No partitions. Why?

Remember how partitions and concurrency are essentially the same problem? Simultaneous writes are causally disconnected. If two clients write values which descend from the same object, Riak just picks the write with the higher timestamp, and throws away the other write. This is a classic data race, and we know how to fix those: just add a mutex. We’ll wrap all operations against Riak in a perfectly consistent, available distributed lock.

“But you can’t do that! That violates the CAP theorem!”

Clever girl. Jepsen lets us pretend, though:

lein run lock riak-lww-sloppy-quorum

Writes completed in 21.475 seconds

2000 total

2000 acknowledged

2000 survivors

All 2000 writes succeeded. :-D

Problem solved! No more write conflicts. Now let’s see how it behaves under a partition by running salticid jepsen.partition during a run:

237 :ok

242 :ok

247 :ok

252 :ok

257 :ok

262 timeout

85 :ok

204 timeout

203 timeout

106 :ok

209 timeout

267 timeout

90 :ok

The first thing you’ll notice is that our writes start to lag hard. Some clients are waiting to replicate a write to a majority of nodes, but one side of the partition doesn’t have a majority available. Even though Riak is an AP design, it can functionally become unavailable while nodes are timing out.

Those requests time out until Riak determines those nodes are inaccessible, and sets up fallback vnodes. Once the fallback vnodes are in place, writes proceed on both sides of the cluster, because both sides have a majority of vnodes available. This is by design in Dynamo. Allowing both components to see a majority is called a sloppy quorum, and it allows both components to continue writing data with full multi-node durability guarantees. If we didn’t set up fallback vnodes, a single node failure could destroy our data.

Before collecting results, let’s heal the cluster: salticid jepsen.heal. Remember to wait for Riak to recover, by waiting until salticid riak.transfers says there’s no data left to hand off.

Writes completed in 92.773 seconds

2000 total

1985 acknowledged

176 survivors

1815 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

85 90 95 100 105 106 ... 1994 1995 1996 1997 1998 1999

6 unacknowledged writes found! ヽ(´ー`)ノ

(203 204 218 234 262 277)

0.9925 ack rate

0.91435766 loss rate

0.00302267 unacknowledged but successful rate

91% data lost. This is fucking catastrophic, ladies.

What happened? When the partition healed, Riak had two essentially two versions of the list: one from each side of the partition (plus some minorly divergent copies on each side). Last-write-wins means we pick the one with the higher timestamp. No matter what you do, all the writes from one side or the other will be discarded.

If your Riak cluster partitions, and you write to a node which can’t reach any of the original copies of the data, that write of a fresh object can overwrite the original record–destroying all the original data.

Strict quorum

The problem is that we allowed writes to proceed on both sides of the partition. Riak has two more settings for reads and writes: PR and PW, for primary read and write, respectively. PR means you have to read a value from at least that many of the original owners of a key: fallback vnodes don’t count. If we set PR + PW >= quorum, operations against a given key will only be able to proceed on one component of a partitioned cluster. That’s a CP system, right?

lein run lock riak-lww-quorum

274 :ok

1250 :ok

279 com.basho.riak.client.RiakRetryFailedException: com.basho.riak.pbc.RiakError: {pw_val_unsatisfied,2,1}

1381 :ok

277 com.basho.riak.client.RiakRetryFailedException: com.basho.riak.pbc.RiakError: {pr_val_unsatisfied,2,1}

Here we see the cluster denying a write and a read, respectively, to clients which can’t see a majority of the primary nodes for a key. Note that because the quorums are spread around the nodes, a Dynamo system will be partially available in this mode. In any given component, you’ll be able to read and write some fraction of the keys, but not others.

2000 total

1971 acknowledged

170 survivors

1807 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

86 91 95 96 100 101 ... 1994 1995 1996 1997 1998 1999

6 unacknowledged writes found! ヽ(´ー`)ノ

(193 208 219 237 249 252)

0.9855 ack rate

0.9167935 loss rate

0.00304414 unacknowledged but successful rate

PR=PW=R=W=quorum still allowed 92% write loss. We reported failure for more writes than before, so that’s a start–but what gives? Shouldn’t this have been CP?

The problem is that that failed writes may still be partially successful. Dynamo is designed to preserve writes as much as possible. Even though a node might return “PW val unsatisfied” when it can’t replicate to the primary vnodes for a key, it may have been able to write to one primary vnode–or any number of fallback vnodes. Those values will still be exchanged during read-repair, considered as conflicts, and the timestamp used to discard the older value–meaning all writes from one side of the cluster.

This means the minority component’s failing writes can destroy all of the majority component’s successful writes. Repeat after me: Clocks. Are. Evil.

Embrace your siblings

Is there no hope? Is there anything we can do to preserve my writes in Riak?

Yes. We can use CRDTs.

If we enable allow-mult in Riak, the vector clock algorithms will present both versions to the client. We can combine those objects together using a merge function. If the merge function is associative, commutative, and idempotent over that type of object, we can guarantee that it always converges to the same value regardless of the order of writes. If the merge function doesn’t discard data (like last-write-wins does), then it will preserve writes from both sides.

In this case, we’re accumulating a set of numbers. We can use set union as our merge function, or 2P sets, or OR sets, if we need to remove numbers.

lein run riak-crdt

Writes completed in 80.918 seconds

2000 total

1948 acknowledged

2000 survivors

All 2000 writes succeeded. :-D

CRDTs preserve 100% of our writes. We still have false negatives in this demo, because the client timed out on a few writes which Riak was still propagating, when the partition first began. False negatives are OK, though, because state-based CRDTs are idempotent. We can repeat our writes arbitrarily many times, in any order, without duplicating data.

Moreover, CRDTs are an AP design: we can write safely and consistently even when the cluster is totally partitioned–for example, when no majority exists. They’re also eventually consistent (in a safe, data-preserving sense) when components are partitioned away from all copies of a given object and are forced to start from scratch.

Strategies for working with Riak

Enable allow-mult. Use CRDTs.

Seriously. LWW never should have been the standard behavior for a Dynamo system, but Basho made it the default after customers complained that they didn’t like the complexity of reasoning about siblings. Customers are the only reason Riak exists, and this behavior is gonna seem OK until you start experiencing partitions (and remember, fault tolerance is the reason you chose Riak in the first place), so we’re stuck with a default config which promotes simple-yet-dangerous behavior.

As a consequence of that decision, community resources which people rely on to learn how to use Riak are often aimed towards last-write-wins. Software isn’t just an artifact, but a culture around its use. I don’t really know what we can learn from this, besides the fact that engineering and culture are tough problems.

CRDTs may be too large, too complex, or too difficult to garbage-collect for your use case. However, even if you can’t structure your data as a full CRDT, writing a hacked-together merge function which just takes care of a couple important fields (say, set union over your friend list and logical OR over the other fields) can go a long way towards preventing catastrophic data loss.

There are cases where last-write-wins is a safe strategy. If your data is immutable, then it doesn’t matter which copy you choose. If your writes mean “I know the full correct state of this object at this time”, it’s safe. Many caches and backup systems look like this. If, however, your writes mean “I am changing something I read earlier,” then LWW is unsafe.

Finally, you can decide to accept dropped data. All databases will fail, in different ways, and with varying probabilities. Riak’s probability distribution might be OK for you.

Introducing locks is a bad idea. Even if they did prevent data loss–and as we saw, they don’t–you’ll impose a big latency cost. Moreover, locks restrict your system to being CP, so there’s little advantage to having an AP database. However, some really smart folks at Basho are working on adding Paxos rounds for writes which need to be CP. Having a real consensus protocol will allow Riak’s distributed writes to be truly atomic.

So: we’ve seen that Riak’s last-write-wins is fundamentally unsafe in the presence of network partitions. You can lose not only writes made during the partition, but all writes made at any time prior. Riak is an AP system, and its tunable CAP controls only allow you to detect some forms of write loss–not prevent it. You can’t add consistency to a database by tacking on a lock service because wall clock time doesn’t matter: consistency is a causal property of the relationships between the writes themselves. AP systems involve fundamentally different kinds of data structures, with their own unique tradeoffs.

In the next post, we’ll review what we’ve learned from these four distributed systems, and where we go from here.

Outstanding post. And a great talk at Ricon (I watched the live stream.) One small argument:

You said “False negatives are OK, though, because state-based CRDTs are idempotent.” Which is not true of any of the counters currently described in the literature. Retrying an increment on a counter that was partially successful will lead to a double count. You can easily create an idempotent counter by using a G-Set (or two!) and using a unique ID for each operation, taking the set cardinality as the counter’s value, but the space trade-off is pretty steep. Maybe you could bound the time a unique ID may be reused after failure, but this is still a reasonably difficult engineering problem, that involves some sort of consensus to shrink the G-Set.

I’m working on something at the moment that uses append only logs, transformed periodically into state based CRDTs that might be good for an idempotent counter…it is a long way from done and I have little time.