Previously, on Jepsen, we reviewed Elasticsearch’s progress in addressing data-loss bugs during network partitions. Today, we’ll see Aerospike 3.5.4, an “ACID database”, react violently to a basic partition.

[Update, 2018-03-07] See the followup analysis of 3.99.0.3

Aerospike is a high-performance, distributed, schema-less, KV store, often deployed in caching, analytics, or ad tech environments. Its five-dimensional data model is similar to Bigtable or Cassandra: namespaces (databases) contain sets (tables) of records, where keys identify records. Each record is a map of bin names to values. Aerospike has put a good deal of work into performance across good-size (~100TB) datasets, and is repositioning itself as a general purpose datastore competitive with, say, MongoDB.

Previously, on Jepsen, we demonstrated stale and dirty reads in MongoDB. In this post, we return to Elasticsearch, which loses data when the network fails, nodes pause, or processes crash.

Nine months ago, in June 2014, we saw Elasticsearch lose both updates and inserted documents during transitive, nontransitive, and even single-node network partitions. Since then, folks continue to refer to the post, often asking whether the problems it discussed are still issues in Elasticsearch. The response from Elastic employees is often something like this:

Please note: our followup analysis of 3.4.0-rc3 revealed additional faults in MongoDB’s replication algorithms which could lead to the loss of acknowledged documents–even with Majority Write Concern, journaling, and fsynced writes.

In May of 2013, we showed that MongoDB 2.4.3 would lose acknowledged writes at all consistency levels. Every write concern less than MAJORITY loses data by design due to rollbacks–but even WriteConcern.MAJORITY lost acknowledged writes, because when the server encountered a network error, it returned a successful, not a failed, response to the client. Happily, that bug was fixed a few releases later.

Since then I’ve improved Jepsen significantly and written a more powerful analyzer for checking whether or not a system is linearizable. I’d like to return to Mongo, now at version 2.6.7, to verify its single-document consistency. (Mongo 3.0 was released during my testing, and I expect they’ll be hammering out single-node data loss bugs for a little while.)

I like builders and have written APIs that provide builder patterns, but I really prefer option maps where the language makes it possible. Instead of a builder like

Wizard wiz = new WizardBuilder("some string")

.withPriority(1)

.withMode(SOME_ENUM)

.enableFoo()

.disableBar()

.build();

I prefer writing something like

So there’s a blog post that advises every method should, when possible, return self. I’d like to suggest you do the opposite: wherever possible, return something other than self.

Mutation is hard

Mutation makes code harder to reason about. Mutable objects make equality comparisons tricky: if you use a mutable object as the key in a hashmap, for instance, then change one of its fields, what happens? Can you access the value by the new string value? By the old one? What about a set? An array? For a fun time, try these in various languages. Try it with mutable primitives, like Strings, if the language makes a distinction. Enjoy the results.

Previously: Modeling.

Writing software can be an exercise in frustration. Useless error messages, difficult-to-reproduce bugs, missing stacktrace information, obscure functions without documentation, and unmaintained libraries all stand in our way. As software engineers, our most useful skill isn’t so much knowing how to solve a problem as knowing how to explore a problem that we haven’t seen before. Experience is important, but even experienced engineers face unfamiliar bugs every day. When a problem doesn’t bear a resemblance to anything we’ve seen before, we fall back on general cognitive strategies to explore–and ultimately solve–the problem.

There’s an excellent book by the mathematician George Polya: How to Solve It, which tries to catalogue how successful mathematicians approach unfamiliar problems. When I catch myself banging my head against a problem for more than a few minutes, I try to back up and consider his principles. Sometimes, just taking the time to slow down and reflect can get me out of a rut.

With the language fundamentals in hand, here’s my thinking for the remainder of the Clojure from the ground up book chapters. I’m putting Jepsen on hold to work on this project for the rest of the year; hoping to get the source material complete by… January?

- Debugging and getting help

- Polymorphism

- Error Handling

- Modularization and refactoring

- It’s not at all obvious what an object is

- JVM interop

- The Clojure type system

- Compiler at runtime

- Build your own language

- Performance analysis

- Parsers and protocols

- Storage and persistence

- Networks and messaging

- Concurrency and queues

This post covers Elasticsearch 1.1.0. In the months since its publication, Elasticsearch has added a comprehensive overview of correctness issues and their progress towards fixing some of these bugs.

In the previous post, we discovered the potential for data loss in RabbitMQ clusters. In this oft-requested installation of the Jepsen series, we’ll look at etcd: a new contender in the CP coordination service arena. We’ll also discuss Consul’s findings with Jepsen.

Like Zookeeper, etcd is designed to store small amounts of strongly-consistent state for coordination between services. It exposes a tree of logical nodes; each identified by a string key, containing a string value, and with a version number termed an index–plus, potentially, a set of child nodes. Everything’s exposed as JSON over an HTTP API.

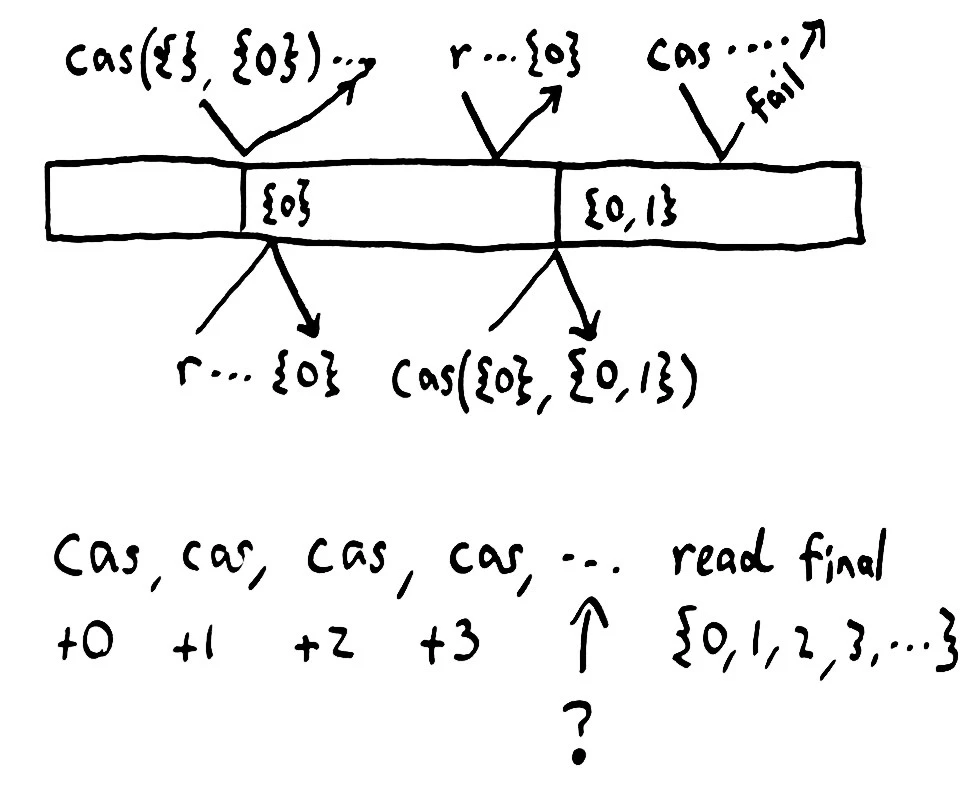

Etcd is often used for service discovery, distributed locking, atomic broadcast, sequence numbers, and pointers to data in eventually consistent stores. Because etcd offers atomic compare-and-set by both value and version index, it’s a powerful primitive in building other distributed systems.

RabbitMQ is a distributed message queue, and is probably the most popular open-source implementation of the AMQP messaging protocol. It supports a wealth of durability, routing, and fanout strategies, and combines excellent documentation with well-designed protocol extensions. I’d like to set all these wonderful properties aside for a few minutes, however, to talk about using your queue as a lock service. After that, we’ll explore RabbitMQ’s use as a distributed fault-tolerant queue.

Earlier versions of Jepsen found glaring inconsistencies, but missed subtle ones. In particular, Jepsen was not well equipped to distinguish linearizable systems from sequentially or causally consistent ones. When people asked me to analyze systems which claimed to be linearizable, Jepsen could rule out obvious classes of behavior, like dropping writes, but couldn’t tell us much more than that. Since users and vendors are starting to rely on Jepsen as a basic check on correctness, it’s important that Jepsen be able to identify true linearization errors.

Update, 2018-08-24: For a more complete, formal discussion of consistency models, see jepsen.io.

Network partitions are going to happen. Switches, NICs, host hardware, operating systems, disks, virtualization layers, and language runtimes, not to mention program semantics themselves, all conspire to delay, drop, duplicate, or reorder our messages. In an uncertain world, we want our software to maintain some sense of intuitive correctness.

Well, obviously we want intuitive correctness. Do The Right Thing(TM)! But what exactly is the right thing? How might we describe it? In this essay, we’ll take a tour of some “strong” consistency models, and see how they fit together.