This fall, I worked with MongoDB to design a new Jepsen test for MongoDB. We discovered design flaws in the v0 replication protocol, plus implementation bugs in the v1 protocol, both of which allowed for the loss of majority-committed updates. While the v0 protocol remains broken, patches for v1 are available in MongoDB 3.2.12 and 3.4.0, and now pass the expanded Jepsen test suite.

You can read the full analysis at jepsen.io.

In Herlihy and Wing’s seminal paper introducing linearizability, they mention an important advantage of this consistency model:

Unlike alternative correctness conditions such as sequential consistency [31] or serializability [40], linearizability is a local property: a system is linearizable if each individual object is linearizable.

Locality is important because it allows concurrent systems to be designed and constructed in a modular fashion; linearizable objects can be implemented, verified, and executed independently. A concurrent system based on a nonlocal correctness property must either rely on a centralized scheduler for all objects, or else satisfy additional constraints placed on objects to ensure that they follow compatible scheduling protocols.

This advantage is not shared by sequential consistency, or its multi-object cousin, serializability. This much, I knew–but Herlihy & Wing go on to mention, almost offhand, that strict serializability is also nonlocal!

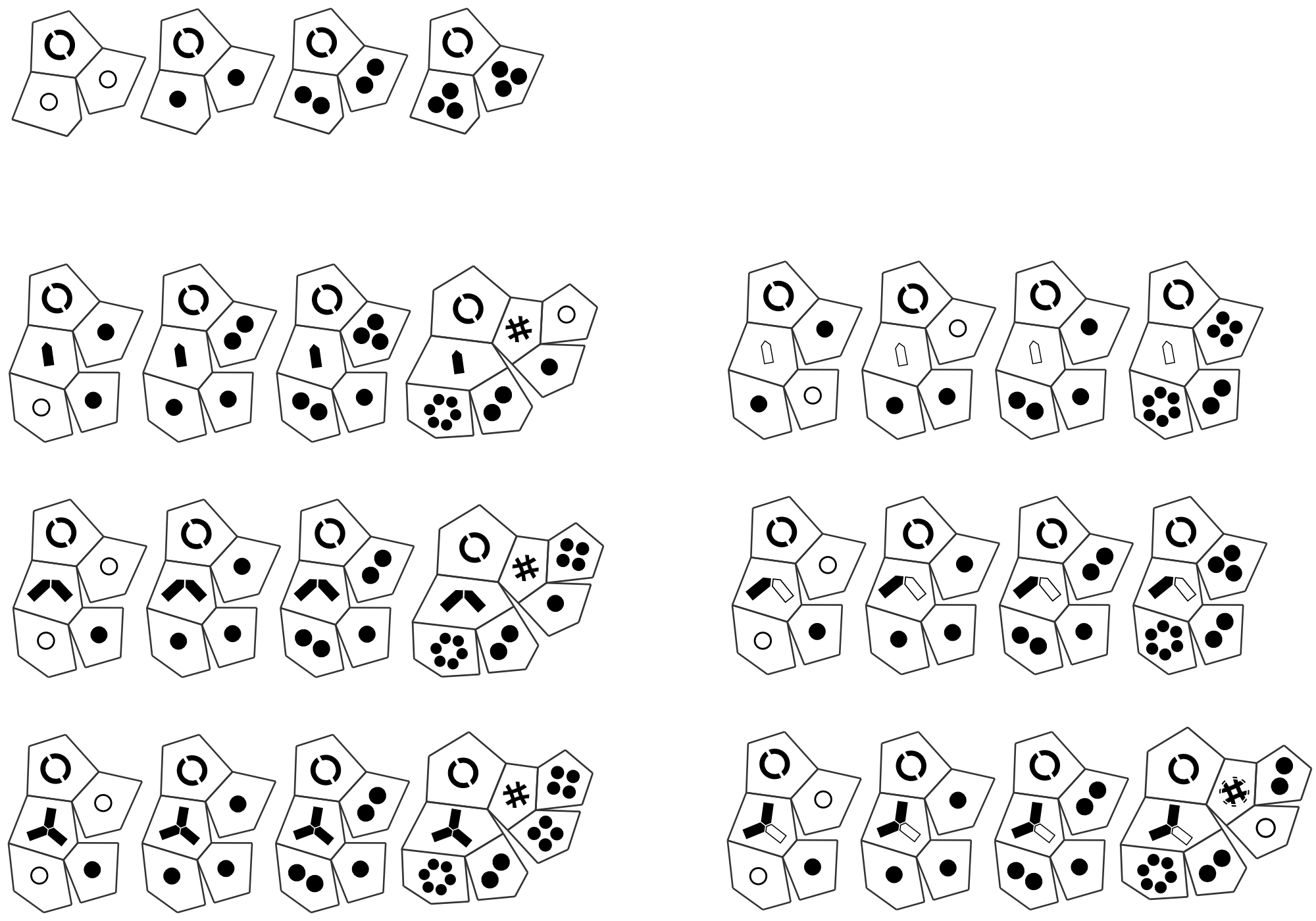

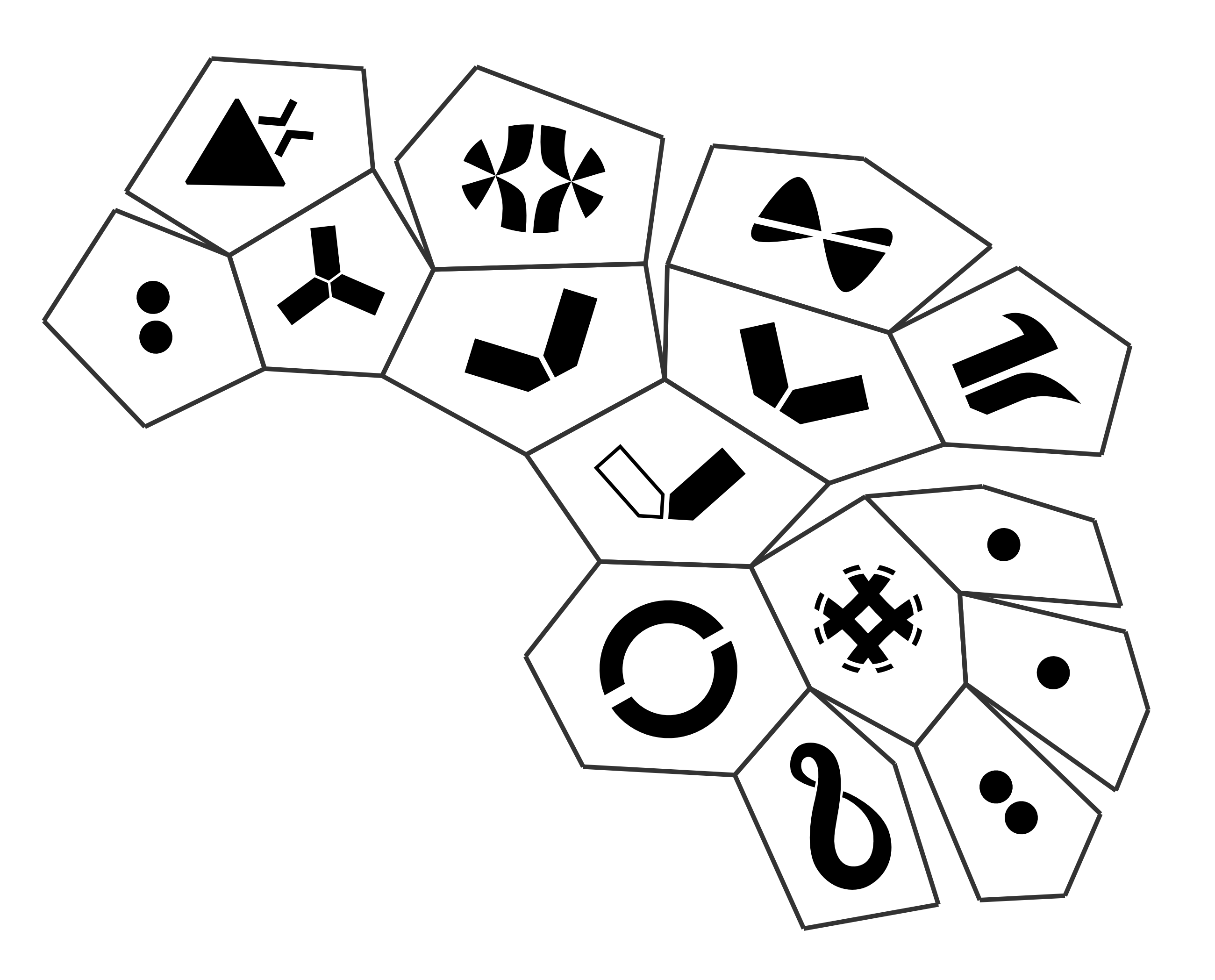

I finished my tattoo last night. If you like puzzles, here’s a primer for the language, and the design itself. You’ll need some basic algebra for the primer, and a little domain knowledge–or a few Google queries–for the tattoo proper.

These are unpolished thoughts. I started playing again for sources and to refine these ideas, but the game crashes so often that I’m giving up. Still think some folks might find this interesting. Spoilers everywhere.

In the opening, Davey notes that the CounterStrike level appears to be a desert town, but Coda has scattered these floating boxes and out-of-place, brightly-colored cubes in the level: a reminder that the game is not exactly what it purports to be. “Calling cards”, he calls them. A reminder that the game was created by a real person. “They are all going to give us access to their creator. I want to see past the games themselves. I want to know who the real person is.”

Jump up a level. We’re not just interested in learning about Coda as a person. We’re interested in understanding Davey as a person, too. What were the rough circumstances in Davey’s life? How did Coda’s work help? If each level tells us something about Coda, the purported author, then The Beginner’s Guide tells us something about Davey. And in another sense, the characters of Davey and Coda tells us something about the real Davey, and the people on his team. For instance, regardless of how we interpret the narrative’s characters, it’s likely safe to say that the real Davey is interested in questions of authorial intent.

In the last Jepsen post, we found that RethinkDB could lose data when a network partition occurred during cluster reconfiguration. In this analysis, we’ll show that although VoltDB 6.3 claims strict serializability, internal optimizations and bugs lead to stale reads, dirty reads, and even lost updates; fixes are now available in version 6.4. This work was funded by VoltDB, and conducted in accordance with the Jepsen ethics policy.

VoltDB is a distributed SQL database intended for high-throughput transactional workloads on datasets which fit entirely in memory. All data is stored in RAM, but backed by periodic disk snapshots and an on-disk recovery log for crash durability. Data is replicated to at least k+1 nodes to tolerate k failures. Tables may be replicated to every node for fast local reads, or sharded for linear storage scalability.

As an SQL database, VoltDB supports the usual ad-hoc SQL statements, with some caveats (e.g. no auto-increment, no foreign key constraints, etc.) However, its approach to multi-statement transactions is distinct: instead of BEGIN ... COMMIT, VoltDB transactions are expressed as stored procedures, either in SQL or Java. Stored procedures must be deterministic across nodes (a constraint checked by hashing and comparing their resulting SQL statements), which allows VoltDB to pipeline transaction execution given a consensus on transaction order.

In the last Jepsen analysis, we saw that RethinkDB 2.2.3 could encounter spectacular failure modes due to cluster reconfiguration during a partition. In this analysis, we’ll talk about Crate, and find out just how many versions a row’s version identifies.

Crate is a shared-nothing, “infinitely scalable”, eventually-consistent SQL database built on Elasticsearch.

Because Elasticsearch has and continues to lose and corrupt data in response to network partitions and other faults, some might question whether Elasticsearch is appropriate for a primary data store. Crate’s co-founders knew about these hazards, and promised to publish fault-tolerance documentation in October 2014.

In the previous Jepsen analysis of RethinkDB, we tested single-document reads, writes, and conditional writes, under network partitions and process pauses. RethinkDB did not exhibit any nonlinearizable histories in those tests. However, testing with more aggressive failure modes, on both 2.1.5 and 2.2.3, has uncovered a subtle error in Rethink’s cluster membership system. This error can lead to stale reads, dirty reads, lost updates, node crashes, and table unavailability requiring an unsafe emergency repair. Versions 2.2.4 and 2.1.6, released last week, address this issue.

Until now, Jepsen tests have used a stable cluster membership throughout the test. We typically run the system being tested on five nodes, and although the network topology between the nodes may change, processes may crash and restart, and the system may elect new nodes as leaders, we do not introduce or remove nodes from the system while it is running. Thus far, we haven’t had to go that far to uncover concurrency errors.

Since RethinkDB passed its stable-membership partitioning tests, I offered the team a more aggressive failure model: we’d dynamically reconfigure the cluster membership during the test. This is a harder problem than consensus with fixed membership: both old and new nodes must gracefully agree on the membership change, ensure that both sets of nodes will agree on any operations performed during the handover, and finally transition to normal consensus on the new set of nodes. The delicate handoff of operations from old nodes to new provides ample opportunities for mistakes.

In this Jepsen report, we’ll verify RethinkDB’s support for linearizable operations using majority reads and writes, and explore assorted read and write anomalies when consistency levels are relaxed. This work was funded by RethinkDB, and conducted in accordance with the Jepsen ethics policy.

RethinkDB is an open-source, horizontally scalable document store. Similar to MongoDB, documents are hierarchical, dynamically typed, schemaless objects. Each document is uniquely identified by an id key within a table, which in turn is scoped to a DB. On top of this key-value structure, a composable query language allows users to operate on data within documents, or across multiple documents–performing joins, aggregations, etc. However, only operations on a single document are atomic–queries which access multiple keys may read and write inconsistent data.

RethinkDB shards data across nodes by primary key, maintaining replicas of each key across n nodes for redundancy. For each shard, a single replica is designated a primary, which serializes all updates (and strong reads) to that shard’s documents–allowing linearizable writes, updates, and reads against a single key.

Percona’s CTO Vadim Tkachenko wrote a response to my Galera Snapshot Isolation post last week. I think Tkachenko may have misunderstood some of my results, and I’d like to clear those up now. I’ve ported the MariaDB tests to Percona XtraDB Cluster, and would like to confirm that using exclusive write locks on all reads, as Tkachenko recommends, can recover serializable histories. Finally, we’ll address Percona’s documentation.

I didn’t use the default isolation levels

But there I need to add quite IMPORTANT addition: it may leave data in inconsistent state if you use SPECIAL TYPE of transactions in default isolation levels that Aphyr uses in his test.

Previously, on Jepsen, we saw Chronos fail to run jobs after a network partition. In this post, we’ll see MariaDB Galera Cluster allow transactions to read partially committed state.

Galera Cluster extends MySQL (and MySQL’s fork, MariaDB) to clusters of machines, all of which support reads and writes. It uses a group communication system to broadcast writesets and certify each for use. Unlike most Postgres replication systems, it handles the failure and recovery of all nodes automatically, and unlike MySQL Cluster, it has only one (as opposed to three) types of node. The MariaDB Galera packages are particularly easy to install and configure.

Galera Cluster uses the normal InnoDB isolation levels locally–but we’re interested in cluster-wide consistency guarantees. Between nodes, Galera claims to implement Snapshot Isolation–a reasonably strong consistency model.

Chronos is a distributed task scheduler (cf. cron) for the Mesos cluster management system. In this edition of Jepsen, we’ll see how simple network interruptions can permanently disrupt a Chronos+Mesos cluster

Chronos relies on Mesos, which has two flavors of node: master nodes, and slave nodes. Ordinarily in Jepsen we’d refer to these as “primary” and “secondary” or “leader” and “follower” to avoid connotations of, well, slavery, but the master nodes themselves form a cluster with leaders and followers, and terms like “executor” have other meanings in Mesos, so I’m going to use the Mesos terms here.

Mesos slaves connect to masters and offer resources like CPU, disk, and memory. Masters take those offers and make decisions about resource allocation using frameworks like Chronos. Those decisions are sent to slaves, which actually run tasks on their respective nodes. Masters form a replicated state machine with a persistent log. Both masters and slaves rely on Zookeeper for coordination and discovery. Zookeeper is also a replicated persistent log.

In response to You Do It Too: Forfeiting Partition Tolerance in Distributed Systems, I’d like to remind folks of a few things around CAP.

Partition intolerance does not mean that partitions cannot happen, it means partitions are not supported.

Specifically, partition-intolerant systems must sacrifice invariants when partitions occur. Which invariants? By Gilbert & Lynch, either the system allows nonlinearizable histories, or some requests to non-failing nodes cannot complete. Related proofs tell us that systems which preserve availability during partitions also cannot provide sequential consistency, serializability, repeatable read, cursor stability, or snapshot isolation.