This is total conjecture; please correct me in the comments, because I don’t understand finance at all. This is from a physics standpoint.

In markets, money flows against an information gradient. Traders with perfect knowledge of a stock’s value in the future can make trades with no risk, yielding the highest expectation values of returns E[R]. Traders with zero knowledge of the stock’s value have the worst expectation value. If the market is conservative–that is to say, there is no money added or lost inside the market itself; a stock sells for x dollars and is purchased for x dollars in each transaction, the sum of all expectation values over traders

S = Σ0^n E[R(tradern)]

In response to Results of the 2012 State of Clojure Survey:

The idea of having a primary language honestly comes off to me as a sign that the developer hasn't spent much time programming yet: the real world has so many languages in it, and many times the practical choice is constrained by that of the platform or existing code to interoperate with.

I’ve been writing code for ~18 years, ~10 professionally. I’ve programmed in (chronological order here) Modula-2, C, Basic, the HTML constellation, Perl, XSLT, Ruby, PHP, Java, Mathematica, Prolog, C++, Python, ML, Erlang, Haskell, Clojure, and Scala. I can state unambiguously that Clojure is my primary language: it is the most powerful, the most fun, and has the fewest tradeoffs.

More from Hacker News. I figure this might be of interest to folks working on parallel systems. I’ll let KirinDave kick us off with:

Go scales quite well across multiple cores iff you decompose the problem in a way that's amenable to Go's strategy. Same with Erlang.No one is making “excuses”. It’s important to understand these problems. Not understanding concurrency, parallelism, their relationship, and Amdahl’s Law is what has Node.js in such trouble right now.

Our last full day in New Zealand, I rented a Ninjette from the folks over at nzbike.com. $70 for a full day, maybe $90 with petrol included. I headed north from Auckland on 16, found mile after mile of well-paved, well-paced roads with good cadence and spectacular scenery. Enjoyed getting completely lost in a generally northeasterly direction, hit the eastern coast, took in the surf, and hugged the coast south. It’s all farms: one lane bridges over creeks, limestone hillocks, and the occasional jaw-dropping valley opened up in the sun. Hit some dirt, a few dead ends, and generally had a grand time of it.

Everyone, everywhere, was thrilled to say hello, tell me about their farm or flower shop, and give directions. I don’t think I met a single unfriendly soul in the whole darn country. Even petrol station attendants grinned, warned me about storms to the north and pointed a better route. Maybe it’s a factor of size, maybe it’s purely cultural, but I wish the rural US were anywhere near this friendly.

Sunset lasted three hours. I don’t know how to describe it, and it doesn’t come through in photos. Been trying for the last eight hours to find an exposure that fits, but nothing feels right. The light is warm, hangs in the air thickly, coats the landscape in dusty gold.

This is a response to a Hacker News thread asking about concurrency vs parallelism.

Concurrency is more than decomposition, and more subtle than “different pieces running simultaneously.” It’s actually about causality.

Two operations are concurrent if they have no causal dependency between them.

This post by Jason Alexander on public ownership of firearms has been making the rounds on my Twitter feed lately, and I need to interject some facts into the discussion.

Despite these massacres recurring and despite the 100,000 Americans that die every year due to domestic gun violence - these people see no value to even considering some kind of control as to what kinds of weapons are put in civilian hands.

This number is off by a factor of 3-10, depending on what you mean. The total number of gun deaths in the US is roughly 31,000 per annum. The US is the country with the highest incidence of gun deaths per capita, which probably has something to do with a quarter of US citizens and roughly half of households owning guns.

Most applications have configuration: how to open a connection to the database, what file to log to, the locations of key data files, etc.

Configuration is hard to express correctly. It’s dynamic because you don’t know the configuration at compile time–instead it comes from a file, the network, command arguments, etc. Config is almost always implicit, because it affects your functions without being passed in as an explicit parameter. Most languages address this in two ways:

Globals

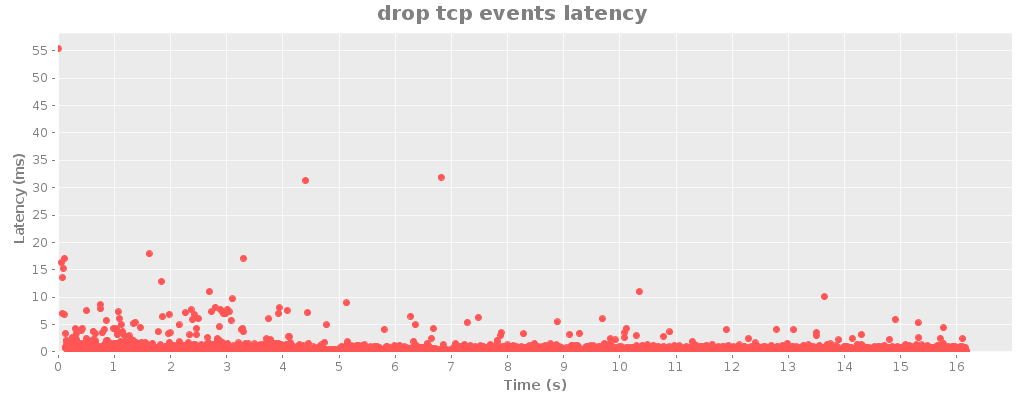

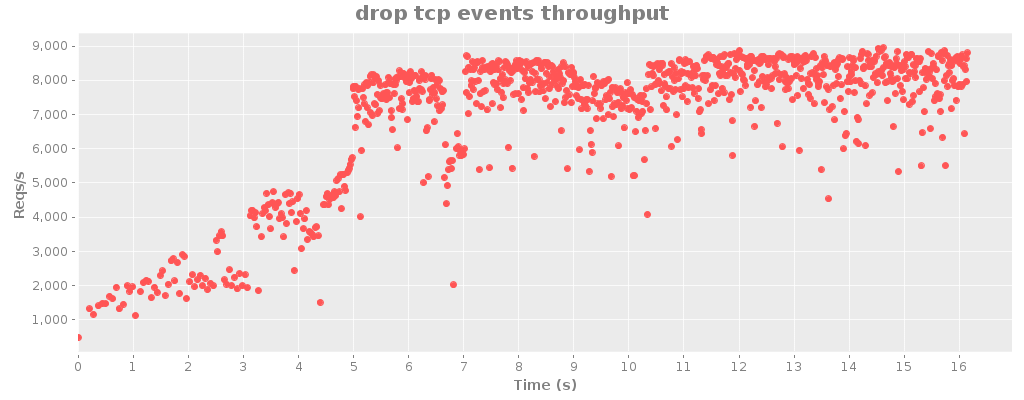

As a quick follow-up, I managed to squeeze an extra 10% or so out of riemann.server by adding a few type hints.

I’ve been focusing on Riemann client libraries and optimizations recently, both at Boundary and on my own time.

Boundary uses the JVM extensively, and takes advantage of Coda Hale’s Metrics. For our applications I’ve written a Riemann Java UDP and TCP client, which also includes a Metrics reporter. The Metrics reporter (I’ll be submitting that to metrics-contrib later) will just send periodic events for each of the metrics in a registry, and optionally some VM statistics as well. It can prefix each service, filter with predicates, and has been reporting for two of our production systems for about a week now.

The Java client has been integrated into Riemann itself, replacing the old Aleph client. It’s about on par with the old Aleph client, owing to its use of standard Socket and friends as opposed to Netty. Mårten Gustafson and Edward Ribeiro have been instrumental in getting the Java client up and running, so my sincere thanks go out to both of them.

The initial stable release of Riemann 0.1.0 is available for download. This is the culmination of the 0.0.3 development path and 2 months of production use at Showyou.

Is it production ready? I think so. The fundamental stream operators are in place. A comprehensive test suite checks out. Riemann has never crashed. Its performance characteristics should be suitable for a broad range of scales and applications.

There is a possible memory leak, on the order of 1% per day in our production setup. I can’t replicate it under a variety of stress tests. It’s not clear to me whether this is legitimate state information (i.e. an increase in tracked data), GC/malloc implementations being greedy, or an actual memory leak. Profiling and understanding this is my top priority for Riemann. If this happens to you, restarting the daemon every few weeks should not be prohibitive; it takes about five seconds to reload. Should you encounter this issue, please drop me a line with your configuration; it may help me identify the cause.

When I designed UState, I had a goal of a thousand state transitions per second. I hit about six hundred on my Macbook Pro, and skirted 1000/s on real hardware. Eventmachine is good, but I started to bump up against concurrency limits in MRI’s interpreter lock, my ability to generate and exchange SQL with SQLite, and protobuf parse times. So I set out to write a faster server. I chose Clojure for its expressiveness and powerful model of concurrent state–and more importantly, the JVM, which gets me Netty, a mature virtual machine with a decent thread model, and a wealth of fast libraries for parsing, state, and statistics. That project is called Riemann.

Today, I’m pleased to announce that Riemann crossed the 10,000 event/second mark in production. In fact it’s skirting 11k in my stress tests. (That final drop in throughput is an artifact of the graph system showing partially-complete data.)