ClassNotFoundException: java.util.SequencedCollection

Recently I’ve had users of my libraries start reporting mysterious errors due to a missing reference to SequencedCollection, a Java interface added in JDK 21:

Execution error (ClassNotFoundException) at

jdk.internal.loader.BuiltinClassLoader/loadClass (BuiltinClassLoader.java:641).

java.util.SequencedCollection

Specifically, projects using Jepsen 0.3.5 started throwing this error due to Clojure’s built-in rrb_vector.clj, which is particularly vexing given that the class doesn’t reference SequencedCollection at all.

Why is Jepsen Written in Clojure?

People keep asking why Jepsen is written in Clojure, so I figure it’s worth having a referencable answer. I’ve programmed in something like twenty languages. Why choose a Weird Lisp?

Jepsen is built for testing concurrent systems–mostly databases. Because it tests concurrent systems, the language itself needs good support for concurrency. Clojure’s immutable, persistent data structures make it easier to write correct concurrent programs, and the language and runtime have excellent concurrency support: real threads, promises, futures, atoms, locks, queues, cyclic barriers, all of java.util.concurrent, etc. I also considered languages (like Haskell) with more rigorous control over side effects, but decided that Clojure’s less-dogmatic approach was preferable.

Because Jepsen tests databases, it needs broad client support. Almost every database has a JVM client, typically written in Java, and Clojure has decent Java interop.

10⁹ Operations: Large Histories with Jepsen

Jepsen is a library for writing tests of concurrent systems: everything from single-node data structures to distributed databases and queues. A key part of this process is recording a history of operations performed during the test. Jepsen checkers analyze a history to find consistency anomalies and to compute performance metrics. Traditionally Jepsen has stored the history in a Clojure vector (an immutable in-memory data structure like an array), and serialized it to disk at the end of the test. This limited Jepsen to histories on the order of tens of millions of operations. It also meant that if Jepsen crashed during a several-hour test run, it was impossible to recover any of the history for analysis. Finally, saving and loading large tests involved long wait times—sometimes upwards of ten minutes.

Over the last year I’ve been working on ways to resolve these problems. Generators are up to ten times faster. A new operation datatype makes each operation smaller and faster to access. Jepsen’s new on-disk format allows us to stream histories incrementally to disk, to work with histories of up to a billion operations far exceeding available memory, to recover safely from crashes, and to load tests almost instantly by deserializing data lazily. New history datatypes support both densely and sparsely indexed histories, and efficiently cache auxiliary indices. They also support lazy disk-backed map and filter. These histories support both linear and concurrent folds, which dramatically improves checker performance on multicore systems: real-world checkers can readily analyze 250,000 operations/sec. Histories support multi-query optimization: when multiple threads fold over the same history, a query planner automatically fuses those folds together to perform them in a single pass. Since Jepsen often folds dozens of times over the same history, this saves a good deal of disk IO and deserialization time. These features are enabled by a new, transactional, dependency-aware task executor.

Fast Multi-Accumulator Reducers

Again with the reductions! I keep writing code which reduces over a collection, keeping track of more than one variable. For instance, here’s one way to find the mean of a collection of integers:

(defn mean

"A reducer to find the mean of a collection. Accumulators are [sum count] pairs."

([] [0 0])

([[sum count]] (/ sum count))

([[sum count] x]

[(+ sum x) (inc count)]))

This mean function is what Clojure calls a reducer, or a reducing function. With no arguments, it constructs a fresh accumulator. With two arguments, it combines an element of some collection with the accumulator, returning a new accumulator. With one argument, it transforms the accumulator into some final result.

Loopr: A Loop/Reduction Macro for Clojure

I write a lot of reductions: loops that combine every element from a collection in some way. For example, summing a vector of integers:

(reduce (fn [sum x] (+ sum x)) 0 [1 2 3])

; => 6

If you’re not familiar with Clojure’s reduce, it takes a reducing function f, an initial accumulator init, and a collection xs. It then invokes (f init x0) where x0 is the first element in xs. f returns a new accumulator value acc1, which is then passed to (f acc1 x1) to produce a new accumulator acc2, and so on until every x in xs is folded into the accumulator. That accumulator is the return value of reduce.

In writing reductions, there are some problems that I run into over and over. For example, what if you want to find the mean of some numbers in a single pass? You need two accumulator variables–a sum and a count. The usual answer to this is to make the accumulator a vector tuple. Destructuring bind makes this… not totally awful, but a little awkward:

(reduce (fn [[sum count] x]

[(+ sum x) (inc count)])

[0 0]

[1 2 3 4 5 6 7])

; => [28 7]

Rewriting the Technical Interview

Previously: Typing the Technical Interview.

Update, November 2023: here are the full term rewrite and language macros which formed the seed of this story. These files include OO notation as well as the basic Algol syntax shown here. There is also a sketch of an object-oriented language with classes and inheritance, implemented as a Clojure macro. I do not remember writing it. It looks terrifying.

Frigitt, vi danser

For et øyeblikk, vi leker

Vi tusen små bladskiper

Gleder oss, på det klare morgenlys

Reversing the technical interview

If you want to get a job as a software witch, you’re going to have to pass a whiteboard interview. We all do them, as engineers–often as a part of our morning ritual, along with arranging a beautiful grid of xterms across the astral plane, and compulsively running ls in every nearby directory–just in case things have shifted during the night–the incorporeal equivalent of rummaging through that drawer in the back of the kitchen where we stash odd flanges, screwdrivers, and the strangely specific plastic bits: the accessories, those long-estranged black sheep of the families of our household appliances, their original purpose now forgotten, perhaps never known, but which we are bound to care for nonetheless. I’d like to walk you through a common interview question: reversing a linked list.

First, we need a linked list. Clear your workspace of unwanted xterms, sprinkle salt into the protective form of two parentheses, and recurse. Summon a list from the void.

(defn cons [h t] #(if % h t))

Returning self or void suggests mutability

So there’s a blog post that advises every method should, when possible, return self. I’d like to suggest you do the opposite: wherever possible, return something other than self.

Mutation is hard

Mutation makes code harder to reason about. Mutable objects make equality comparisons tricky: if you use a mutable object as the key in a hashmap, for instance, then change one of its fields, what happens? Can you access the value by the new string value? By the old one? What about a set? An array? For a fun time, try these in various languages. Try it with mutable primitives, like Strings, if the language makes a distinction. Enjoy the results.

Clojure from the ground up: debugging

Previously: Modeling.

Writing software can be an exercise in frustration. Useless error messages, difficult-to-reproduce bugs, missing stacktrace information, obscure functions without documentation, and unmaintained libraries all stand in our way. As software engineers, our most useful skill isn’t so much knowing how to solve a problem as knowing how to explore a problem that we haven’t seen before. Experience is important, but even experienced engineers face unfamiliar bugs every day. When a problem doesn’t bear a resemblance to anything we’ve seen before, we fall back on general cognitive strategies to explore–and ultimately solve–the problem.

There’s an excellent book by the mathematician George Polya: How to Solve It, which tries to catalogue how successful mathematicians approach unfamiliar problems. When I catch myself banging my head against a problem for more than a few minutes, I try to back up and consider his principles. Sometimes, just taking the time to slow down and reflect can get me out of a rut.

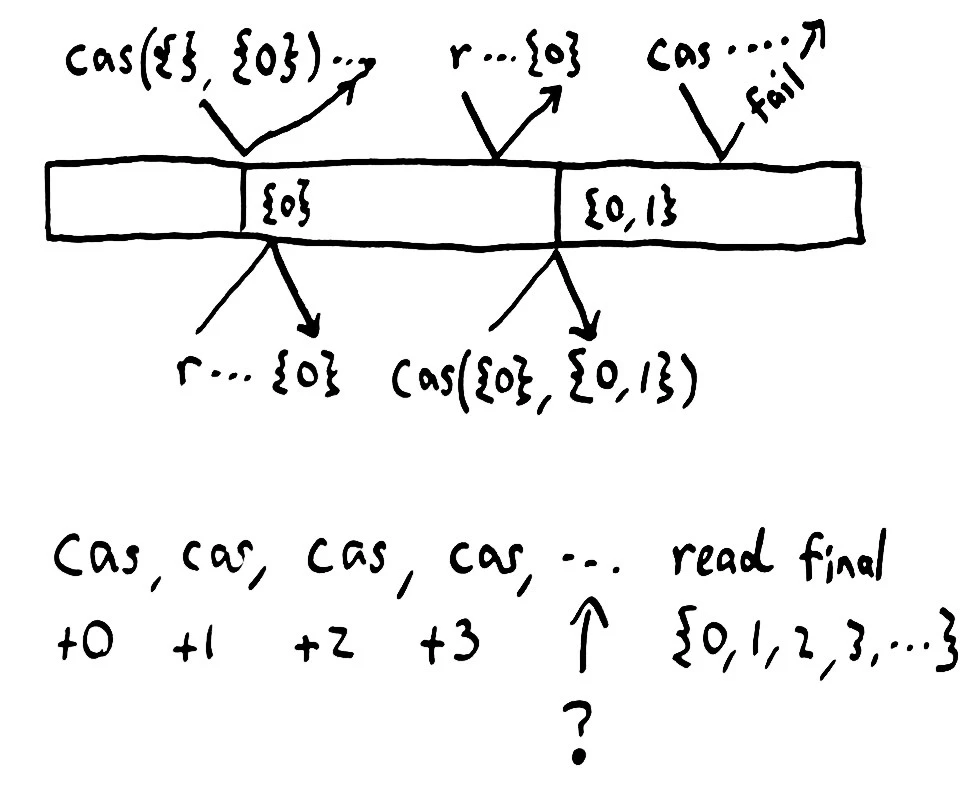

Computational techniques in Knossos

Earlier versions of Jepsen found glaring inconsistencies, but missed subtle ones. In particular, Jepsen was not well equipped to distinguish linearizable systems from sequentially or causally consistent ones. When people asked me to analyze systems which claimed to be linearizable, Jepsen could rule out obvious classes of behavior, like dropping writes, but couldn’t tell us much more than that. Since users and vendors are starting to rely on Jepsen as a basic check on correctness, it’s important that Jepsen be able to identify true linearization errors.

Clojure from the ground up: modeling

Previously: Logistics

Until this point in the book, we’ve dealt primarily in specific details: what an expression is, how math works, which functions apply to different data structures, and where code lives. But programming, like speaking a language, painting landscapes, or designing turbines, is about more than the nuts and bolts of the trade. It’s knowing how to combine those parts into a cohesive whole–and this is a skill which is difficult to describe formally. In this part of the book, I’d like to work with you on an integrative tour of one particular problem: modeling a rocket in flight.

We’re going to reinforce our concrete knowledge of the standard library by using maps, sequences, and math functions together. At the same time, we’re going to practice how to represent a complex system; decomposing a problem into smaller parts, naming functions and variables, and writing tests.

Clojure from the ground up: logistics

Previously, we covered state and mutability.

Up until now, we’ve been programming primarily at the REPL. However, the REPL is a limited tool. While it lets us explore a problem interactively, that interactivity comes at a cost: changing an expression requires retyping the entire thing, editing multi-line expressions is awkward, and our work vanishes when we restart the REPL–so we can’t share our programs with others, or run them again later. Moreover, programs in the REPL are hard to organize. To solve large problems, we need a way of writing programs durably–so they can be read and evaluated later.

In addition to the code itself, we often want to store ancillary information. Tests verify the correctness of the program. Resources like precomputed databases, lookup tables, images, and text files provide other data the program needs to run. There may be documentation: instructions for how to use and understand the software. A program may also depend on code from other programs, which we call libraries, packages, or dependencies. In Clojure, we have a standardized way to bind together all these parts into a single directory, called a project.

Knossos: Redis and linearizability

A few weeks ago I criticized a proposal by Antirez for a hypothetical linearizable system built on top of Redis WAIT and a strong coordinator. I showed that the coordinator he suggested was physically impossible to build, and that anybody who tried to actually implement that design would run into serious problems. I demonstrated those problems (and additional implementation-specific issues) in an experiment on Redis’ unstable branch.

Antirez’ principal objections, as I understand them, are:

- Some readers mistakenly assumed that the system I discussed was a proposal for Redis Cluster.

- I showed that the proposal was physically impossible, but didn’t address its safety if it were possible.

- The impossible parts of the proposed system could be implemented in a real asynchronous network by layering in additional constraints on the leader election process.

Clojure from the ground up: state

Previously: Macros.

Most programs encompass change. People grow up, leave town, fall in love, and take new names. Engines burn through fuel while their parts wear out, and new ones are swapped in. Forests burn down and their logs become nurseries for new trees. Despite these changes, we say “She’s still Nguyen”, “That’s my motorcycle”, “The same woods I hiked through as a child.”

Identity is a skein we lay across the world of immutable facts; a single entity which encompasses change. In programming, identities unify different values over time. Identity types are mutable references to immutable values.

Clojure from the ground up: macros

In Chapter 1, I asserted that the grammar of Lisp is uniform: every expression is a list, beginning with a verb, and followed by some arguments. Evaluation proceeds from left to right, and every element of the list must be evaluated before evaluating the list itself. Yet we just saw, at the end of Sequences, an expression which seemed to violate these rules.

Clearly, this is not the whole story.

Macroexpansion

Clojure from the ground up: sequences

In Chapter 3, we discovered functions as a way to abstract expressions; to rephrase a particular computation with some parts missing. We used functions to transform a single value. But what if we want to apply a function to more than one value at once? What about sequences?

For example, we know that (inc 2) increments the number 2. What if we wanted to increment every number in the vector [1 2 3], producing [2 3 4]?

user=> (inc [1 2 3])

ClassCastException clojure.lang.PersistentVector cannot be cast to java.lang.Number clojure.lang.Numbers.inc (Numbers.java:110)

Clojure from the ground up: functions

We left off last chapter with a question: what are verbs, anyway? When you evaluate (type :mary-poppins), what really happens?

user=> (type :mary-poppins)

clojure.lang.Keyword

To understand how type works, we’ll need several new ideas. First, we’ll expand on the notion of symbols as references to other values. Then we’ll learn about functions: Clojure’s verbs. Finally, we’ll use the Var system to explore and change the definitions of those functions.

Clojure from the ground up: basic types

We’ve learned the basics of Clojure’s syntax and evaluation model. Now we’ll take a tour of the basic nouns in the language.

Types

We’ve seen a few different values already–for instance, nil, true, false, 1, 2.34, and "meow". Clearly all these things are different values, but some of them seem more alike than others.

Clojure from the ground up: welcome

This guide aims to introduce newcomers and experienced programmers alike to the beauty of functional programming, starting with the simplest building blocks of software. You’ll need a computer, basic proficiency in the command line, a text editor, and an internet connection. By the end of this series, you’ll have a thorough command of the Clojure programming language.

Who is this guide for?

Science, technology, engineering, and mathematics are deeply rewarding fields, yet few women enter STEM as a career path. Still more are discouraged by a culture which repeatedly asserts that women lack the analytic aptitude for writing software, that they are not driven enough to be successful scientists, that it’s not cool to pursue a passion for structural engineering. Those few with the talent, encouragement, and persistence to break in to science and tech are discouraged by persistent sexism in practice: the old boy’s club of tenure, being passed over for promotions, isolation from peers, and flat-out assault. This landscape sucks. I want to help change it.

Riemann 0.2.0

Riemann 0.2.0 is ready. There’s so much left that I want to build, but this release includes a ton of changes that should improve usability for everyone, and I’m excited to announce its release.

Version 0.2.0 is a fairly major improvement in Riemann’s performance and capabilities. Many things have been solidified, expanded, or tuned, and there are a few completely new ideas as well. There are a few minor API changes, mostly to internal structure–but a few streams are involved as well. Most functions will continue to work normally, but log a deprecation notice when used.

I dedicated the past six months to working on Riemann full-time. I was fortunate to receive individual donations as well as formal contracts with Blue Mountain Capital, SevenScale, and Iovation during that time. That money gave me months of runway to help make these improvements–but even more valuable was the feedback I received from production users, big and small. I’ve used your complaints, frustrations, and ideas to plan Riemann’s roadmap, and I hope this release reflects that.

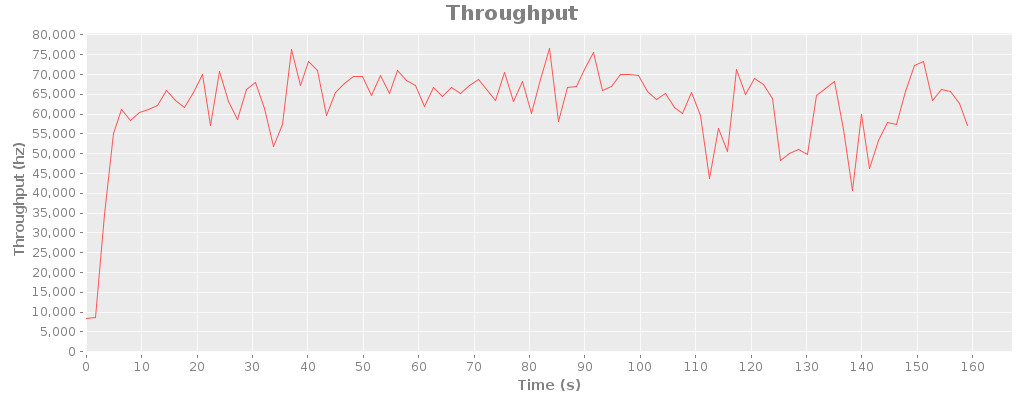

65K messages/sec

The Netty redesign of riemann-java-client made it possible to expose an end-to-end asynchronous API for writes, which has a dramatic improvement on messages with a small number of events. By introducing a small queue of pipelined write promises, riemann-clojure-client can now push 65K events per second, as individual messages, over a single TCP socket. Works out to about 120 mbps of sustained traffic.

I’m really happy about the bulk throughput too: three threads using a single socket, sending messages of 100 events each, can push around 185-200K events/sec, at over 200 mbps. That throughput took 10 sockets and hundreds of threads to achieve in earlier tests.

Timelike 2: everything fails all the time

In the previous post, I described an approximation of Heroku’s Bamboo routing stack, based on their blog posts. Hacker News, as usual, is outraged that the difficulty of building fast, reliable distributed systems could prevent Heroku from building a magically optimal architecture. Coda Hale quips:

Really enjoying @RapGenius’s latest mix tape, “I Have No Idea How Distributed Systems Work”.

Coda understands the implications of the CAP theorem. This job is too big for one computer–any routing system we design must be distributed. Distribution increases the probability of a failure, both in nodes and in the network itself. These failures are usually partial, and often take the form of degradation rather than the system failing as a whole. Two nodes may be unable to communicate with each other, though a client can see both. Nodes can lie to each other. Time can flow backwards.

Timelike: a network simulator

For more on Timelike and routing simulation, check out part 2 of this article: everything fails all the time. There’s also more discussion on Reddit.

RapGenius is upset about Heroku’s routing infrastructure. RapGenius, like many web sites, uses Rails, and Rails is notoriously difficult to operate in a multithreaded environment. Heroku operates at large scale, and made engineering tradeoffs which gave rise to high latencies–latencies with adverse effects on customers. I’d like to explore why Heroku’s Bamboo architecture behaves this way, and help readers reason about their own network infrastructure.

To start off with, here’s a Rails server. Since we’re going to be discussing complex chains of network software, I’ll write it down as an s-expression:

Blathering about Riemann consistency

tl;dr Riemann is a monitoring system, so it emphasizes liveness over safety.

Riemann is aimed at high-throughput (millions of events/sec/node), partial-harvest event processing, where it is acceptable to trade completeness for throughput at low latencies. For instance, it’s probably fine to drop half of your request latency events on the floor, if you’re calculating a lossy histogram with sampling anyway. It’s also typically acceptable to have nondeterministic behavior with respect to time windows: if one node’s clock is skewed, it’s better to process it “soonish” rather than waiting an unbounded amount of time for it to check in.

There is no synchronization or relationship between events. Events are immutable and have a total order, even though a given server or client may only have a fraction of the relevant events for a system. The events are, in a sense, the transaction log–except that the semantics of those transactions depend on the stream configuration.

Reaching 200K events/sec

I’ve been doing a lot of performance tuning in Riemann recently, especially in the clients–but I’d like to share a particularly spectacular improvement from yesterday.

The Riemann protocol

Riemann’s TCP protocol is really simple. Send a Msg to the server, receive a response Msg. Messages might include some new events for the server, or a query; and a response might include a boolean acknowledgement or a list of events matching the query. The protocol is ordered; messages on a connection are processed in-order and responses sent in-order. Each Message is serialized using Protocol Buffers. To figure out how large each message is, you read a four-byte length header, then read length bytes, and parse that as a Msg.

Language Power

I’ve had two observations floating around in my head, looking for a way to connect with each other.

Many “architecture patterns” are scar tissue around the absence of higher-level language features.

and a criterion for choosing languages and designing APIs

Pipelining requests

I’ve been putting more work into riemann-java-client recently, since it’s definitely the bottleneck in performance testing Riemann itself. The existing RiemannTcpClient and RiemannRetryingTcpClient were threadsafe, but almost fully mutexed; using one essentially serialized all threads behind the client itself. For write-heavy workloads, I wanted to do better.

There are two logical optimizations I can make, in addition to choosing careful data structures, mucking with socket options, etc. The first is to bundle multiple events into a single Message, which the API supports. However, your code may not be structured in a way to efficiently bundle events, so where higher latencies are OK, the client can maintain a buffer of outbound events and flush it regularly.

The second optimization is to take advantage of request pipelining. Riemann’s protocol is simple and synchronous: you send a Message over a TCP connection, and receive exactly one TCP message in response. The existing clients, however, forced you to wait n milliseconds for the message to cross the network, be processed by Riemann, and receive an acknowledgement. We can do better by pipelining requests: sending new requests before waiting for the previous responses, and matching up received messages with their corresponding requests later.

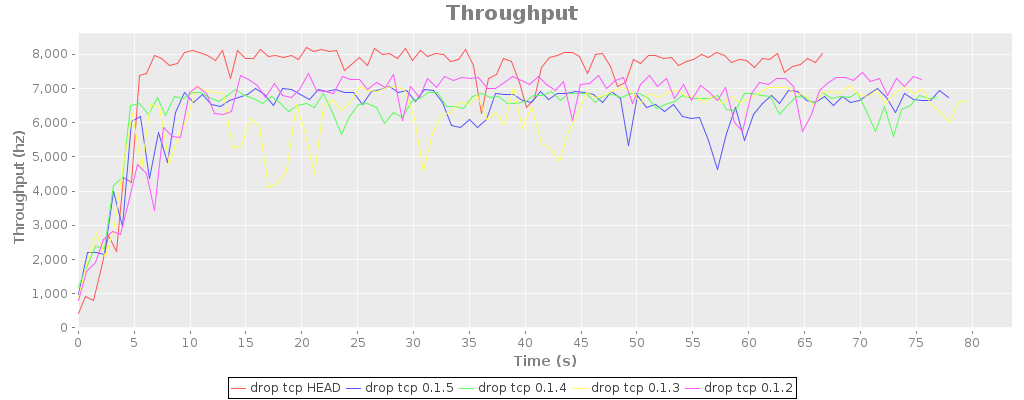

Schadenfreude

Schadenfreude is a benchmarking tool I’m using to improve Riemann. Here’s a profile generated by the new riemann-bench, comparing a few recent releases in their single-threaded TCP server throughput. These results are dominated by loopback read latency–maxing out at about 8-9 kiloevents/sec. I’ll be using schadenfreude to improve client performance in high-volume and multicore scenarios.

A Clojure benchmarking thing

I needed a tool to evaluate internal and network benchmarks of Riemann, to ask questions like

- Is parser function A or B more efficient?

- How many threads should I allocate to the worker threadpool?

- How did commit 2556 impact the latency distribution?

In dealing with “realtime” systems it’s often a lot more important to understand the latency distribution rather than a single throughput figure, and for GC reasons you often want to see a time dependence. Basho Bench does this well, but it’s in Erlang which rules out microbenchmarking of Riemann functions (e.g. at the repl). So I’ve hacked together this little thing I’m calling Schadenfreude (from German; “happiness at the misfortune of others”). Sums up how I feel about benchmarks in general.

Cheerleading

In response to Results of the 2012 State of Clojure Survey:

The idea of having a primary language honestly comes off to me as a sign that the developer hasn't spent much time programming yet: the real world has so many languages in it, and many times the practical choice is constrained by that of the platform or existing code to interoperate with.

I’ve been writing code for ~18 years, ~10 professionally. I’ve programmed in (chronological order here) Modula-2, C, Basic, the HTML constellation, Perl, XSLT, Ruby, PHP, Java, Mathematica, Prolog, C++, Python, ML, Erlang, Haskell, Clojure, and Scala. I can state unambiguously that Clojure is my primary language: it is the most powerful, the most fun, and has the fewest tradeoffs.

Context switches and serialization in Node

More from Hacker News. I figure this might be of interest to folks working on parallel systems. I’ll let KirinDave kick us off with:

Go scales quite well across multiple cores iff you decompose the problem in a way that's amenable to Go's strategy. Same with Erlang.No one is making “excuses”. It’s important to understand these problems. Not understanding concurrency, parallelism, their relationship, and Amdahl’s Law is what has Node.js in such trouble right now.

Configuration and scope

Most applications have configuration: how to open a connection to the database, what file to log to, the locations of key data files, etc.

Configuration is hard to express correctly. It’s dynamic because you don’t know the configuration at compile time–instead it comes from a file, the network, command arguments, etc. Config is almost always implicit, because it affects your functions without being passed in as an explicit parameter. Most languages address this in two ways: